MBZUAI is changing the landscape of large language models in the region.

Wednesday, November 01, 2023

Previously published in MIT Tech Review Arabia.

Given the recent advancements in large language models (LLMs), particularly with the introduction of Open AI’s GPT 3.5 and GPT 4 models, it’s likely that you’ve been contemplating how these developments will shape your sector in the coming months. Generative artificial intelligence (AI) has made significant inroads across various sectors and fields, including health, education, economics, and climate. Beyond its transformative potential, generative AI promises substantial economic advantages, as evidenced by a recent McKinsey & Company report estimating annual economic gains in the range of $2.6 trillion to $4.4 trillion. These developments signal a pivotal moment in the evolution of AI and its profound impact on a range of industries.

While the recent advancements in LLMs are undoubtedly promising, they are not without their share of concerns and risks, ranging from accuracy and reliability to safety and environmental impact. However, major institutions are taking proactive measures to address these issues and seek solutions. One such institution is Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), recognized as the world’s first postgraduate research university dedicated to AI.

MBZUAI has been leading open-source AI solutions in the region since its establishment back in 2019, as demonstrated by its rigorous research initiatives, collaborations with respected institutions, and involvement in the development of cutting-edge language models in Arabic and English. These efforts reflect a commitment to harnessing AI’s potential while addressing its challenges.

The university has been a key participant in developing AI and expanding its uses, including generative models. It was involved in the recent development of Llama 2, an open source LLM from META, by providing early feedback before launching the final version, in partnership with 50 other institutions that META invited to evaluate the model before releasing it.

Department Chair of NLP, and Professor of NLP at MBZUAI, Professor Preslav Nakov, says that Llama 2 marks a significant improvement over its predecessor, Llama 1, in terms of its size and carbon footprint.

MBZUAI has made substantial contributions to the development of other innovative LLMs, including Jais, Vicuna, and LaMini. These endeavors are driven by a mission to address the critical challenges associated with linguistic models. These challenges include issues around the ability of models to effectively verify the accuracy of the information and their propensity to sometimes generate incorrect content. Furthermore, the university’s efforts target the economic and environmental costs associated with these models, emphasizing sustainability. Additionally, they aim to rectify the limitations of Arabic models stemming from the scarcity of Arabic content available online. In doing so, the university aims to enhance the reliability and utility of linguistic models.

How do large language models avoid misinformation traps?

Misinformation and the difficulty of verifying information have long been a challenge associated with the rapid spread of information on the Internet, and this challenge has increased with the emergence of LLMs, which can be used to spread fake news. However, a research paper titled “Fact-Checking Complex Claims with Program-Guided Reasoning” co-authored by Nakov and a group of researchers, demonstrated that linguistic models can be harnessed to verify the validity of information.

The authors developed a system, called ProgramFC, that takes complex claims, breaks them down into their component parts or data, and verifies them with the goal of producing a judgment about whether the claim is true or false, and explaining the reasons for the judgment. The program consists of three basic components: answering simple questions, validating simple claims, and solving logical expressions.

In the paper, the authors analyze the claim: “James Cameron and the director of Interstellar were born in the same country.” “If you search for this claim, you won’t really be able to find a direct answer on the Internet,” Nakov says. But ProgramFC divides it into simple questions, such as: Where was James Cameron born? In Canada, then who is the director of the movie Interstellar? Christopher Nolan, then where was Christopher Nolan born? In the United Kingdom. Now, is the United Kingdom the same as Canada? The answer is no. So, this claim is incorrect. What happened here is that you’re taking a complex claim and breaking it down into components you can validate,” Nakov adds.

It’s essential to recognize that not all claims require verification; some are subjective opinions, as pointed out by Nakov. Statements such as declaring white as the ideal color for a car cover in Abu Dhabi, or designating a specific bank or school as the best, fall into the category of opinions rather than verifiable factual claims. To handle such statements, a straightforward mechanism can be applied, where the segments entirely validated are shaded in green, partially verified sections are marked in red, while yellow signifies cases where the system couldn’t verify the information, or it’s deemed unimportant to fact-check. This approach helps distinguish subjective opinions from verifiable facts effectively.

Three linguistic models that change the rules of the game.

MBZUAI seeks to develop LLMs to different levels in terms of cost, environmental impact, and volume of training data. Here we review three different LLMs that the university has developed or co-developed with other institutions.

Jais: The world’s highest quality open source large Arabic language model

Numerous international open source language models have been launched, but until recently it was difficult for Arabic speakers to find an Arabic language model that matched these models, mainly due to the lack of Arabic content on the Internet. This is where Jais comes in. Jais was launched by Inception, a subsidiary of G42 Group (G42) in cooperation with MBZUAI and Cerberus Systems, and was trained using an AI supercomputer powered by Condor Galaxy 1 (CG-1), an AI supercomputer with 54 million AI-optimized cores.

Nakov says that Jais is the largest open source and most accurate Arabic LLM in the world. It was trained on 395 billion tokens, including 116 billion Arabic tokens and 279 billion English tokens. It outperforms current Arabic models by a large margin and competes with English models of similar size despite being trained on much less data.

The interesting aspect of Jais’s development is that the English component of the model learned from the Arabic data and vice versa, allowing it to overcome the lack of Arabic content on the Internet by complementing its knowledge development using English and Arabic, and hence opening a new era of development and training for LLMs.

Vicuna: An Open-Source, sustainable language model

Despite the growing importance of LLMs, the cost of building and maintaining them has typically been financially and interoperability unsustainable, with computing costs in the tens of millions of dollars per year, and a carbon footprint comparable to that of a small city.

A team of researchers at MBZUAI, UC Berkeley, Carnegie Mellon University, Stanford University, and UC San Diego worked to address these unsustainable costs by creating Vicuna, an open-source LLM which cost US$300 to train and has a very low carbon footprint compared to ChatGPT, which cost more than $4 million to train, with an estimated 500 tons of carbon emitted.

With its minuscule size-to-power ratio, Vicuna can easily be accommodated in a single GPU accelerator, compared to the several hundred billion parameters of cores used in the neural network of ChatGPT, so, it is not surprising that Vicuna’s accessibility gained attention in the industry. On the open source website Github, it obtained more than 12,000 stars in just two weeks, squaring up respectably against the Stable Diffusion model which took a month to reach 50,000 stars.

What is interesting about Vicuna is that it refutes the commonly held belief that LLMs with more parameters automatically get better results. Vicuna demonstrates that low cost does not necessarily mean low quality, and lower cost models can be financially viable and sustainable. Despite its relatively low cost, Vicuna is highly competitive when compared to Chat GPT and Bard, scoring around 90% on self-language assessments, making it the strongest publicly available alternative.

LaMini: A miniature version with the capabilities of the larger models

The research team at MBZUAI developed another LLM called LaMini LM by extracting knowledge from GPT creations in the same way a teacher transfers a condensed version of his or her knowledge to others. The researchers asked questions on GPT chats, got answers, and used those answers to train the LaMini LM model. Although training took much less time, the Lamini models performed almost as well as their larger counterparts. The university’s research team suggests that organizations, instead of leaving their workforce to use cloud-based models to answer questions and produce content, could use a customized solution like LaMini LM, which could help keep their data secure.

Nakov says that while Vicuna was the result of the university’s collaboration with various other institutions, LaMini LM was completed entirely at MBZUAI without external partners, which supports the main idea that it is possible to come up with an LLM with limited resources.

MBZUAI seeks to harness its expertise in foundational models and LLMs to support numerous institutions and companies affiliated with the Abu Dhabi government in numerous sectors including health, education, finance, and climate.

Related

MBZUAI to launch lab at UAE’s Ministry of Climate Change and Environment

New lab will support advancing agricultural sustainability in the UAE through research and analysis.

- ai lab ,

- partnerships ,

- sustainability ,

- agriculture ,

- MoUs ,

- environment ,

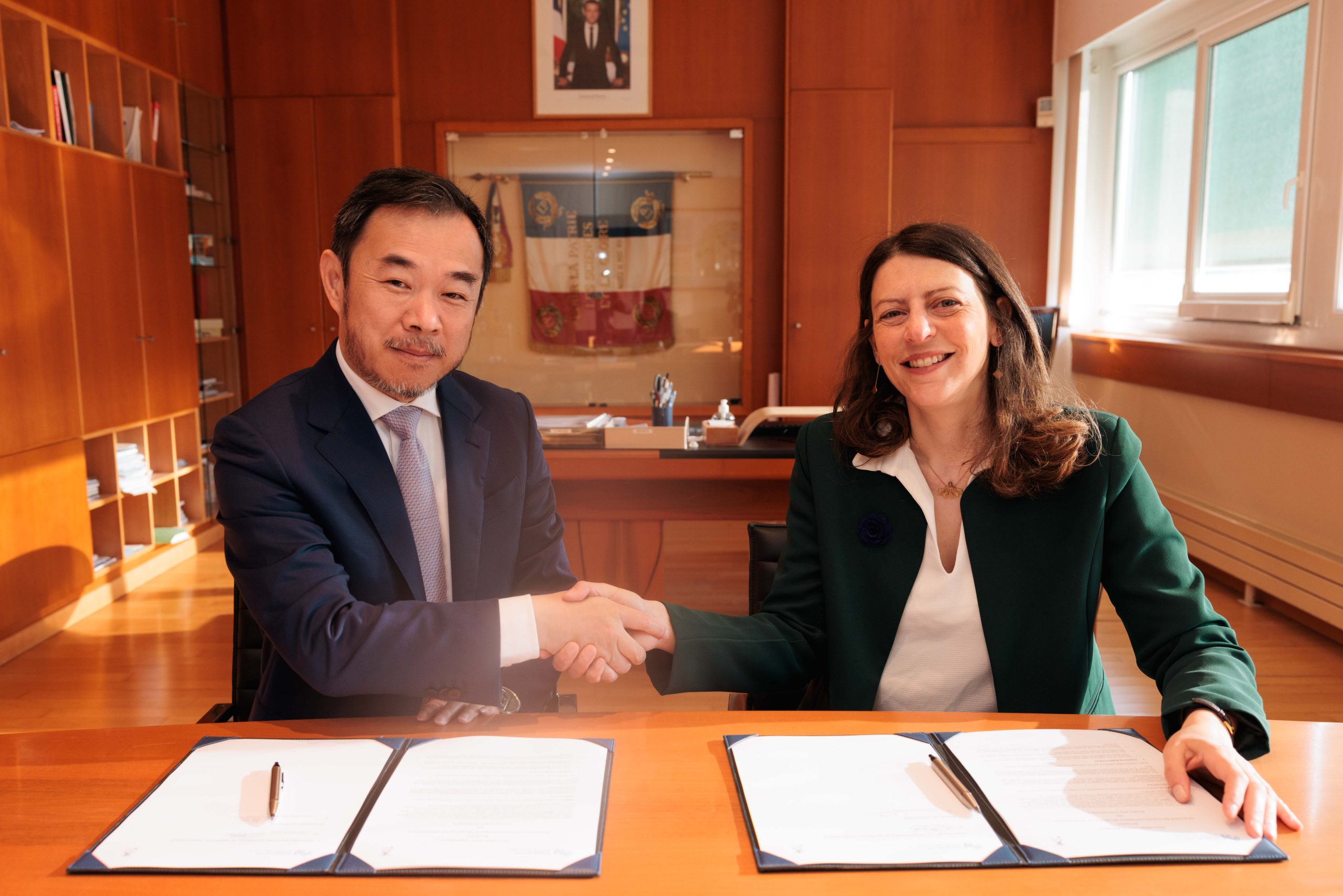

MBZUAI Strengthens Research Ties with France’s École Polytechnique

Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) will deepen ties with France’s AI ecosystem in 2025.....

- MBZUAI ,

- partnership ,

MBZUAI and Berkeley explore the future of machine learning

Machine learning pioneer Michael I. Jordan was among the speakers discussing the cutting-edge ideas shaping the field.

- berkeley ,

- workshop ,

- ML ,

- collaborations ,

- innovation ,

- research ,

- machine learning ,