When medical AI meets messy reality

Tuesday, July 29, 2025

When MBZUAI Ph.D. student Raza Imam is asked to think of a real-world example that could illustrate why he won best paper at the 29th Medical Image Understanding and Analysis 2025 conference in Leeds, UK, he offers the case of a retinal scan smeared by a patient’s tiny head movement.

“Just a slight patient movement can smear the image enough to confuse an AI model into misclassifying a diseased retina as normal or vice versa,” he says.

Written in collaboration with research associate Rufael Marew and Associate Professor of Computer Vision, Mohammad Yaqub, both also from MBZUAI, Imam’s paper, “On the Robustness of Medical Vision‑Language Models: Are They Truly Generalizable?”, delivers two things the field has lacked: first, a rigorous corruption benchmark called MediMeta‑C and secondly, a lightweight defence dubbed RobustMedCLIP (RMC). Together they reveal and then repair how today’s most celebrated medical vision‑language models (MVLMs) crumble when scans look anything less than textbook‑perfect.

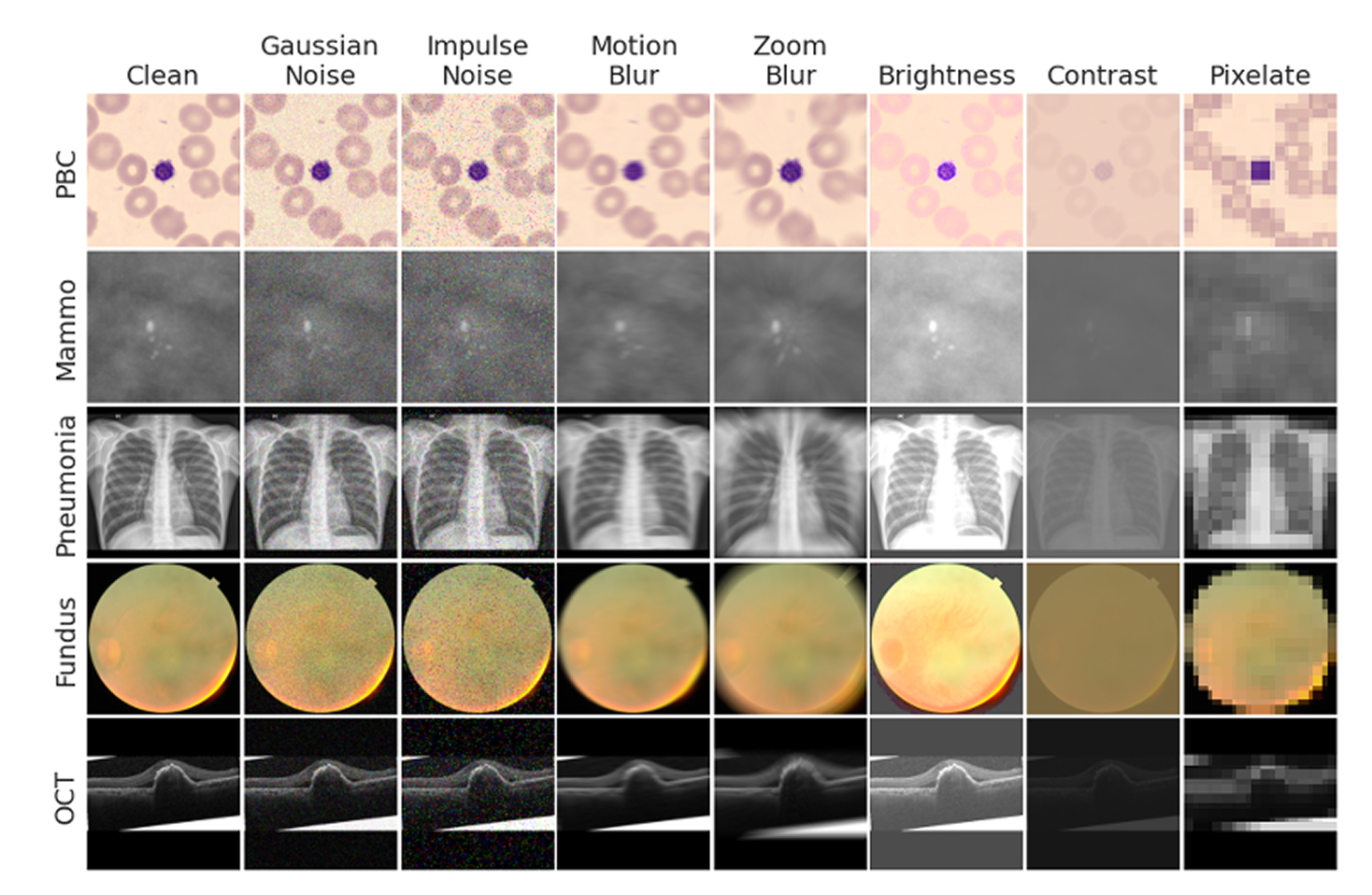

Over the last few years, large multimodal models have dazzled radiologists by matching specialists on clean chest X‑rays and pathology slides. But those benchmarks were “generally pristine,” Imam says. In real hospital wards, you get motion blur, low‑light noise, compression artefacts from ageing scanners, the visual equivalent of static on a phone line – all illustrated in the diagram below. “High accuracy on clean data didn’t guarantee robustness; some top performers broke down under mild corruption,” he found.

To quantify the gap, Imam took inspiration from ImageNet‑C, the computer‑vision benchmark that stresses consumer models with fog, frost and jitter. MediMeta‑C applies seven corruption types (Gaussian noise, impulse noise, motion blur, zoom blur, brightness shifts, contrast shifts and pixelation), each at five severity levels, across five medical modalities from cell microscopy to retinal OCT. That alone yields 175 distinct test sets.

He then paired MediMeta‑C with an earlier low‑resolution testbed, MedMNIST‑C, to build what he calls “a full‑spectrum crash test” for MVLMs.

Across the combined benchmark, baseline models suffered more than a two‑fold increase in error on average. Fundoscopy (eye‑disease screening) was a horror story: some models collapsed under a simple contrast tweak, a reminder that diabetic‑retinopathy programs in pharmacies and rural clinics might be running on ice.

Perhaps most sobering was the third research question in the paper: does clean accuracy correlate with robustness? Imam discovered that clean accuracy does not equal real-world reliability, especially in medical AI. A model that topped the leaderboard on uncorrupted data often fared worse than simpler rivals once noise crept in.

Rather than rebuild models from scratch, Imam opted for a surgical patch: few‑shot LoRA tuning. The idea is to update ≈ 1% of an existing model’s parameters (specifically the low‑rank adapters inside its attention layers) using a tiny, highly diverse sample of clean images from each modality. Training takes under two hours on cheaply available GPUs, a “game‑changer for hospitals with thin IT budgets,” he says.

The resulting model, RobustMedCLIP, delivered the following results:

| Metric | Baseline BioMedCLIP | RobustMedCLIP |

| Mean Corruption Error (mCE) ↓ | 112 % (Cell microscopy) | 70 % |

| Average clean accuracy ↑ | 8 % (Cell microscopy) | 80 % |

Even more striking: RMC kept pace with or beat state‑of‑the‑art on pristine images while slashing errors on corrupted ones, closing the dreaded robustness–accuracy gap.

Why does it work? Imam’s hunch: diversity trumps volume. Adapting on a broad slice of modalities teaches the model what not to latch onto (artifact patterns that vary wildly across scanners) while preserving its grasp of real anatomy.

The study also reignited a simmering architecture debate. Vision Transformers (ViT) consistently beat ResNets for robustness. Transformers pick up global context, Imam argues, whereas ResNets lean on local textures that distort easily.

Imam hopes MediMeta‑C will become the seat‑belt test for clinical AI and that if a model can’t survive brightness, motion blur, or other similar distortions, it shouldn’t diagnose patients. He urges vendors to publish robustness scores alongside accuracy numbers, and regulators to demand proof that systems work on real hospital data.

For AI startups working on healthcare-related challenges, the takeaway is simple: train smart, not just big. Rural clinics sending chest X‑rays from older gear need models seasoned on diverse sources, not merely fed terabytes from a single flagship hospital.

Imam sketches a five‑year vision: every medical AI will be stress‑tested under corruptions before deployment, updated continually with minimal retraining, and judged by reliability first, accuracy second. What’s missing? Industry‑wide adoption of corruption benchmarks and a cultural shift he sums up in one line: “If clinicians adopt our benchmark tomorrow, patients will benefit because we’ll stop trusting MVLMs only on clean scans.”

Until then, the next time your eye‑doctor’s AI flags a lesion, spare a thought for the pixelated, low‑contrast world those algorithms too rarely see and the Ph.D. student in Abu Dhabi who’s determined to change that.

- conference ,

- award ,

- medical ,

- Vision language model ,

- best paper ,

- medical imaging ,

Related

Not just another deck: how MBZUAI’s okkslides is redefining executive communication

The MBZUAI startup is turning messy research and organizational context into decision-ready narratives with a human-in-the-loop AI.....

Read MoreMBZUAI marks five years of pioneering AI excellence with anniversary ceremony and weeklong celebrations

The celebrations were held under the theme “Pioneering Tomorrow: AI, Science and Humanity,” and featured events, lectures,.....

- celebration ,

- five year anniversary ,

- ceremony ,

- event ,

- board of trustees ,

- campus ,

- students ,

- faculty ,

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read More