Towards trustworthy generative AI

Friday, March 24, 2023

Generative AI is so hot right now. Unless you’ve been living under a rock, you’ve heard of it, and most likely, you’ve been using it. Right under your nose, Google has started autocompleting your emails and search queries, and many of the memes you’ve liked and shared recently might well have been created by generative AI systems like Dall-E2.

But you’ve also most likely heard, that there are some fundamental flaws in the ability of these generative systems to deliver truthful results. Ask a computer trained on the entire internet (ChatGPT4 for example) a question and you’re bound to get an accurate representation of what the internet has to offer on the subject, not necessarily what you were looking for, nor what is “the truth” in so much as it can be defined.

[wps_pull-out-quote-right content=”The trouble is that machine learning mainly focuses on prediction, when what we need to recover is the truth.” surename=”Kun Zhang” source=”MBZUAI Associate Professor of Machine Learning”][/wps_pull-out-quote-right]This is a problem, of course, in daily life, as we strive to solve problems using advanced technology. But perhaps more pressingly, it is a problem as researchers grapple with how to incorporate AI into medicine, to give just one example. In AI for health an added layer of urgency enters the conversation, as biomedical research, the development of pharmaceuticals, personalized medicine, the future of public health, and quite a bit else, all hangs on the reliability and veracity of the information generated.

Prediction and truth

The main problem, according to machine learning researchers, is that with many of today’s generative AI systems, we cannot say with certainty, why a particular result was arrived at. So whether you ask for a primer on Indonesian weather patterns, or a bespoke image of a Germanic castle on Mars, you can’t be certain what has caused the outcome the system has generated – it’s a black box to a degree.

“The trouble is that machine learning mainly focuses on prediction, when what we need to recover is the truth,” Associate Professor of Machine Learning Kun Zhang said. “So in the case of AI in health, we are no longer looking just for a prediction. The system has to be infinitely more flexible and deliver the true relationships between genes to provide meaningful and accurate information.”

Zhang, who is also the Director of the Center for Integrative Artificial Intelligence (CIAI), along with his academic collaborators, are focused on changing that. Zhang’s area of expertise is causality, and in particular, the causal relationships that underlie data. Zhang et al have a paper titled: “Scalable Estimation of Nonparametric Markov Networks with Mixed-Type Data” accepted at ICLR 2023 where they plan to present their findings on the subject.

According to Zhang, to create generative systems that are accurate and powerful enough to deal with large, complex, heterogenous data sets, we first have to understand the causal relationships between each distinct piece of information. Next we must scale the system up to deal with millions of measured things in a reliable and accurate manner. Only then can we begin to entertain the notion that AI can be used to inform new areas of research, the development of new pharmaceuticals, or the treatment of an individual patient.

“If you assume, as many researchers do, that there are linear relationships between your variables, this might skew all of your results on real problems. On the other hand, if you use flexible models, the learning process will be less efficient. This is why we often say that causal analysis doesn’t scale. With this research, we think we’ve made a large contribution to allowing for the scaling of causal analysis, and therefore, the analysis of millions of complex relationships,” Zhang said.

Causal analysis at scale

Scaling up understanding of causal relationships is a long-standing challenge in various disciplines of science and machine learning, mostly, according to Zhang, because researchers had to assume fairly basic associations between the variables – often linear relationships where a change to one variable results in a direct and predictable change in another variable.

But as we all know, life doesn’t conform to such simple arrangements or discrete variables. Essentially, the nuances and gene-gene interactions that factor into gene expression are less like a light switch and more akin to a Jackson Pollock masterpiece.

Zhang is careful to stress that this work is fundamental in nature. But for keen observers of the AI for health space, this is clearly quite profound. Systems that can elucidate the complex relationships between millions of variables will be adequate to grapple with disease, and quite possibly, play a pivotal role in the realization of personalized medicine.

At last count MBZUAI authors have 22 papers in total accepted at the Eleventh International Conference on Learning Representations (ICLR 2023).

- zhang ,

- generative AI ,

- causality ,

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

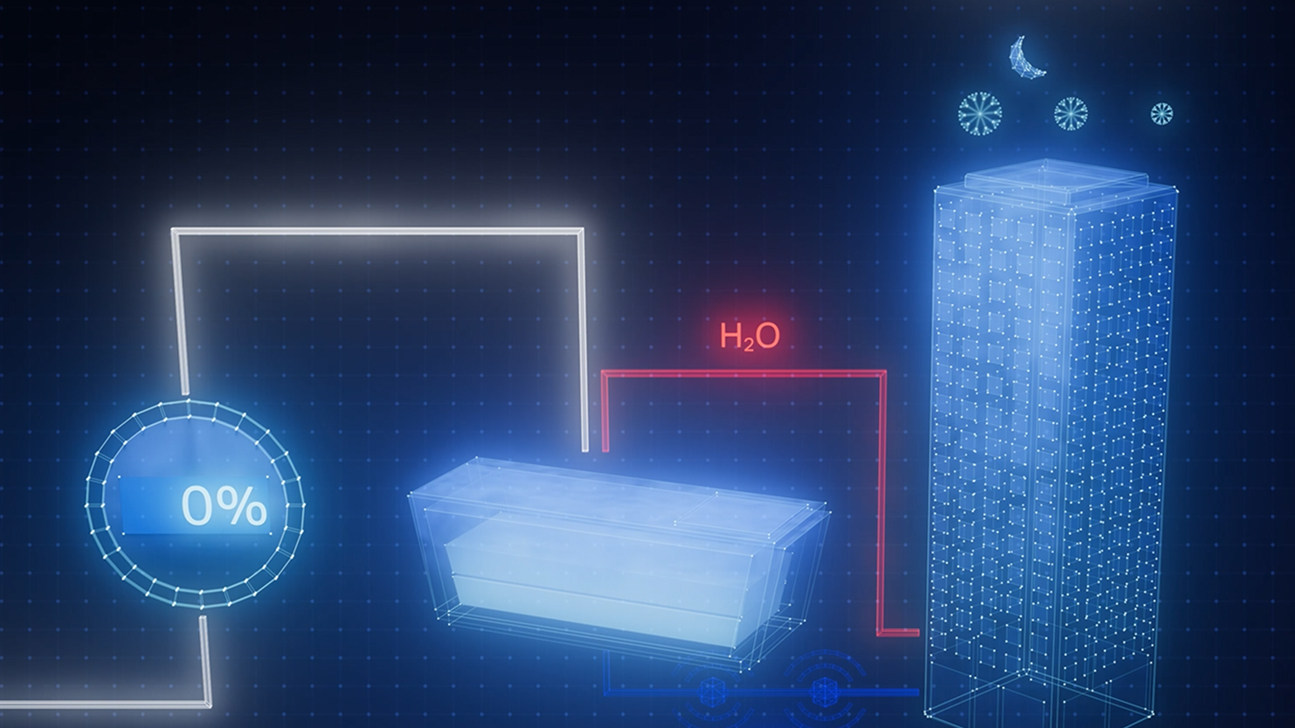

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

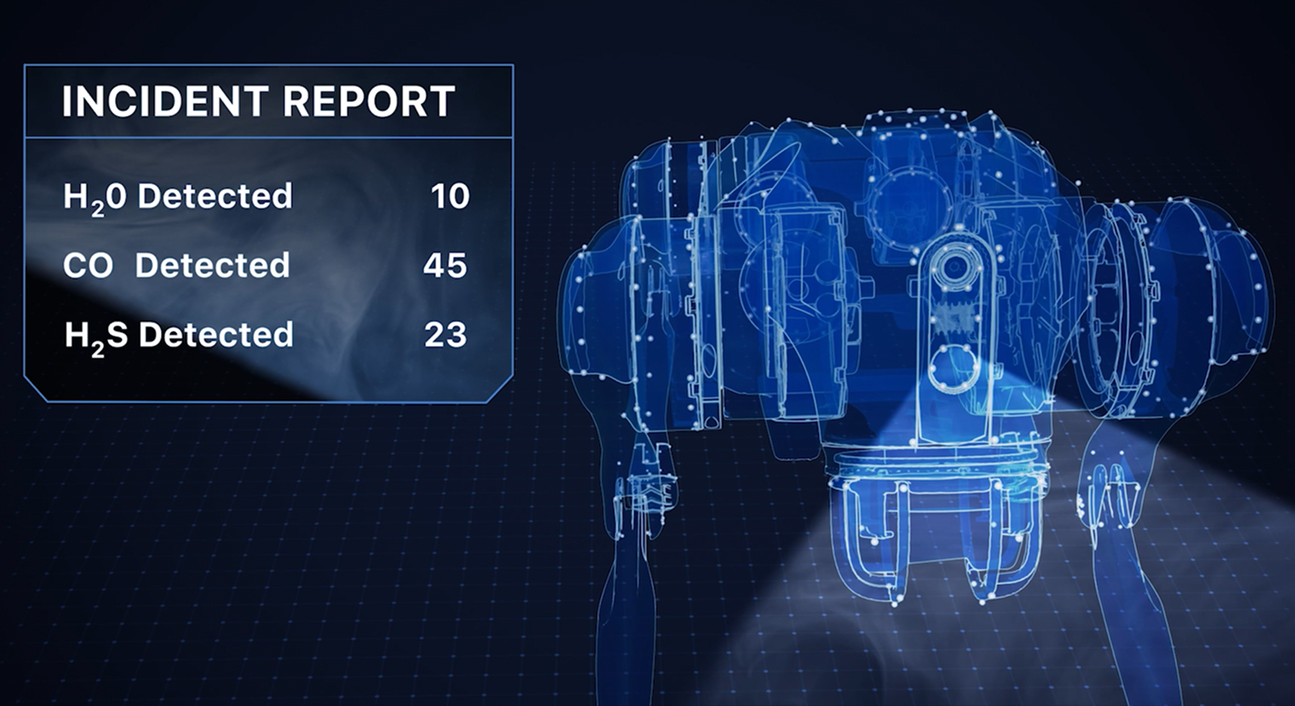

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,