The diagnosis game: A simulated hospital environment to measure AI agents’ diagnostic abilities

Friday, September 26, 2025

There is a genre of social media videos found on platforms like Instagram and TikTok in which medical students challenge their peers to diagnose a theoretical patient in less than a minute. It’s a way for students to put their hard-earned knowledge and diagnostic skills on display in an engaging format.

While these videos are made for entertainment and don’t recreate the exact nature of authentic conversations between physicians and patients, they illustrate an important aspect of real-life clinical interactions. Doctors often come to their conclusions about what might be ailing a patient through a process of informed questioning that repeatedly narrows the universe of potential diagnoses to smaller sets of possibilities.

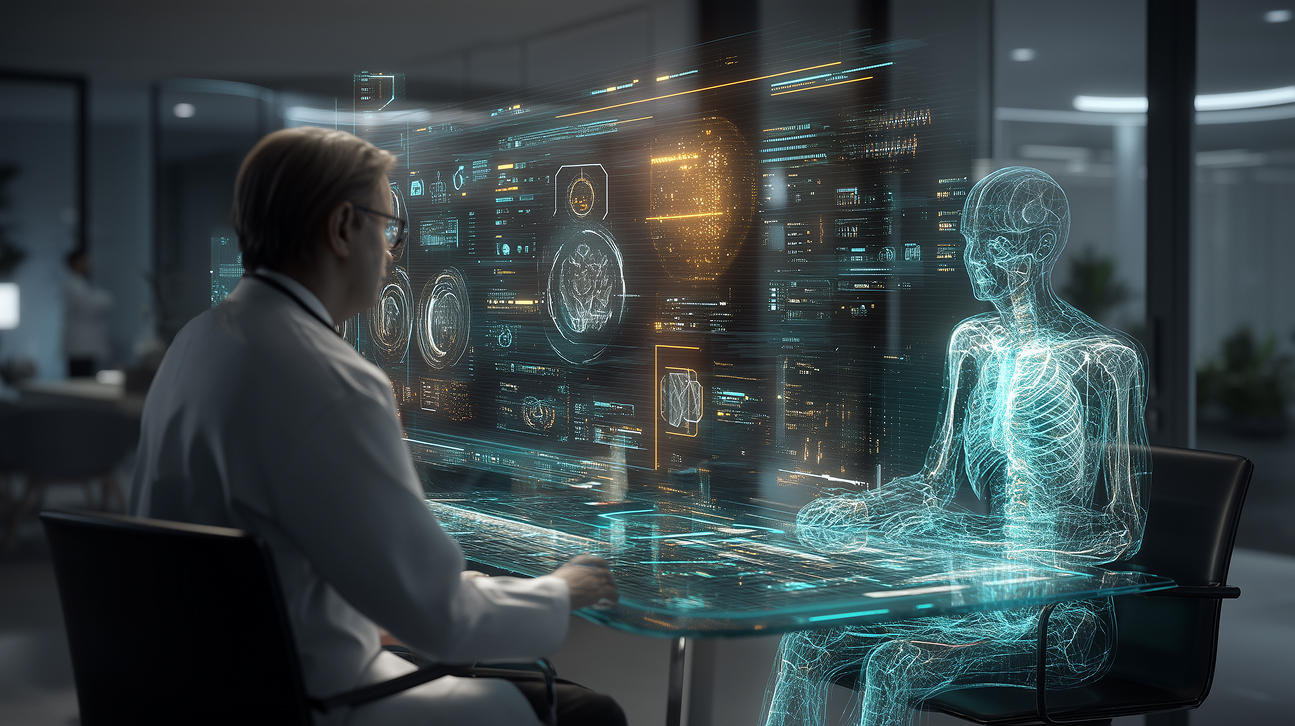

A new initiative by researchers at MBZUAI recreates this back-and-forth between doctor and patient in a simulated hospital environment, using AI agents powered by large language models (LLMs) to mimic the kinds of conversations that might be heard in a typical exam room. The team’s approach provides insights into the diagnostic abilities of language models and holds the potential to be used as a training tool to help physicians diagnose patients faster and more accurately.

The team’s simulation, MedAgentSim, will be shared in an oral presentation at the 28th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) in Daejeon, South Korea. Out of nearly 3,700 papers submitted to the conference, fewer than 80 were accepted for oral presentations.

The creators of MedAgentSim are MBZUAI doctoral students Mohammad Almansoori and Komal Kumar and Assistant Professor of Computer Vision Hisham Cholakkal.

What brings you in today?

There is growing interest in the potential for AI systems to be used in medicine and the performance of language models has been improving on a variety of benchmarks that are designed to test their diagnostic abilities. That said, these measurements don’t recreate the dynamic nature of conversations between doctor and patient.

When tested on current benchmarks, models are typically given all the information about a patient at once. Sometimes the possible diagnoses are presented in multiple-choice format. This has the potential to inflate performance, making it seem that models are better than they would be if used in a hospital. “One of our motivations was to see if these systems really are good enough to be used in a clinical setting,” Cholakkal says.

MedAgentSim is a simulated hospital environment populated with three kinds of agents: doctors, patients, and evaluators that provide additional patient information like tests and imaging.

The agents are what are known as “non-playable characters” and are powered by a language model or a vision-language model. They can move around the simulated hospital, start conversations with other agents, interact with medical equipment, and make real-time decisions based on what they encounter.

How MedAgentSim works

In a simulation run by the researchers, a patient agent enters the hospital, finds its way to an exam room, and reports symptoms to a doctor agent.

After receiving the initial information, the doctor asks follow-up questions. This is a key differentiator from other evaluation methods because “real patients often don’t necessarily know what information is relevant and only provide it when asked by a doctor,” Kumar says.

The doctor can ask the patient multiple follow-up questions. If the doctor needs to know more, it can refer the patient to the measurement agent for testing or imaging.

Almansoori explains that MedAgentSim isn’t only an evaluation mechanism; it can also be used to improve the performance of language models in diagnostic settings. Correctly diagnosed cases are stored in a database that a language model can pull from to increase accuracy, an approach known as in-context learning. “This allows the doctor agent to improve its capabilities over time as it interacts with more patients,” he says.

MedAgentSim creates scenarios that are more realistic than traditional benchmarks designed to evaluate LLM performance. “It’s not the accuracy on a standard benchmark that matters for the performance of these systems,” Cholakkal explains. “It’s their ability to follow instructions and get the maximum amount of information from a patient through a conversation” that matters.

The impact of MedAgentSim on performance

The MedAgentSim pipeline is flexible and can accommodate both proprietary and open-source language models. For the doctor and patient agents, the researchers used LLMs or multimodal LLMs. Measurement agents were implemented as LMMs to interpret medical imaging, such as X-rays and magnetic resonance imaging (MRI) scans.

The researchers plugged several proprietary and open-source models into MedAgentSim to test them as doctor and patient agents. These included ChatGPT-4o, LLaMA 3.3, Mistral Small 3, and Qwen 2 and 2.5. For visual encoding of patient imaging data, they used LLava 1.5.

They tested the models on three benchmarks after preprocessing the benchmark data to align with the format of MedAgentSim. The accuracy of models was determined by another language model that made a judgment about whether a diagnosis was right or wrong.

The results showed that the back-and-forth conversational nature generated by MedAgentSim improved the performance of models compared to other evaluation methods. For example, on a benchmark from the New England Journal of Medicine (NEJM Extended), MedAgentSim achieved accuracy of 28.3% using LLaMA 3.3, compared to the model’s best baseline performance on that benchmark (24.2%). ChatGPT-4o was close behind at 27.5%.

Beyond benchmarking

While the simulation can be run in modes that are automated, humans can also play the role of doctor or patient and interact with AI counterparts. For example, medical students or practicing physicians could play the role of the doctor agent, meeting patients, asking questions, and making a diagnosis. This arrangement turns the system into a valuable training tool.

Alternatively, the role of the patient agent could be replaced by information from a real patient, which would help gather important public health information during a rapidly evolving situation like a pandemic. This information could also be used to update an LLM with recent disease information and help it self-evolve under changing circumstances.

Overall, the researchers say that their findings show that the conversational nature of MedAgentSim is well equipped to generate realistic clinical insights and understand medical images. It’s an important step towards a future where AI can be used to help physicians in both learning and practice.

- healthcare ,

- conference ,

- llms ,

- MICCAI ,

- agents ,

- simulation ,

- presentation ,

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- delivery ,

- logistics ,

- machine learning ,

- research ,

- computer vision ,

- conference ,

- neurips ,

- benchmark ,