Teaching machines what they don’t know: a new approach to open-world object detection

Tuesday, February 20, 2024

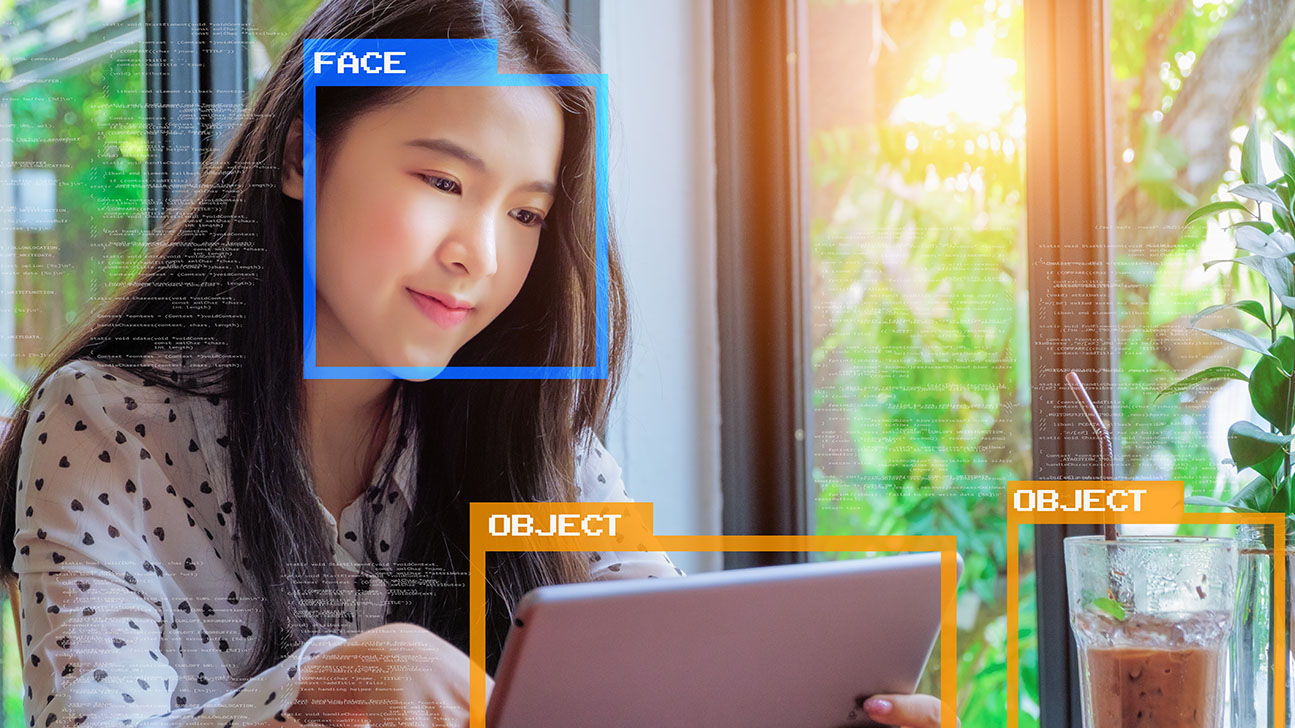

Object detection provides machines with the ability to identify objects in images. It’s a fundamental capability in many computer vision applications that are used today for activities like autonomous driving and medical image analysis.

Advances in object detection have sparked progress in a wide array of industries by enhancing the ability of machines to interact with and interpret the physical world in a way that is increasingly sophisticated and autonomous.

That said, training a machine for an object detection task typically requires a large investment of time and effort by people. Indeed, painstaking and precise work by humans is required to annotate images in the data sets that are used to train models. These annotations essentially tell the machine how it should interpret different objects in images.

In the most basic example, an annotator may locate each object in the image and classify those objects into categories, like “cat” and “dog.” Then when the machine encounters an image of a huskie in the snow, it knows to identify the creature in the image as a dog.

Distinguishing knowns and unknowns

This approach works well when the application only sees images of cats and dogs. But if a model that was trained only on the classes of “cat” and “dog” came across a different kind of animal on which it was never trained, say a bear, it might categorize the bear as a dog. In this case, the problem is that the application doesn’t have the capability to distinguish between known and unknown objects.

“These kinds of systems go forward with the closest guess and make a prediction,” said Hisham Cholakkal, assistant professor of computer vision at MBZUAI. Cholakkal is working to broaden the capabilities of an approach called open-world object detection by which a machine can distinguish between known and unknown objects and later learn to appropriately classify the unknown objects once trained to do so.

[wps_image-left image=”https://staticcdn.mbzuai.ac.ae/mbzuaiwpprd01/2022/06/hisham.jpg” caption=”Dr. Hisham Cholakkal” first-paragraph=”Cholakkal and his Ph.D./M.Sc. students at MBZUAI, Sahal Shaji Mullappilly and Abhishek Singh Gehlot will share their recent innovations on the topic at the 38th Annual AAAI Conference on Artificial Intelligence, scheduled to be held later this month in Vancouver, Canada. Mullappilly is the lead author and will present the study titled “Semi-Supervised Open-World Detection” at the conference. ” second-paragraph=”Open-world object detection applications are designed to operate in environments where the variety and types of objects they encounter change over time, requiring the model to adapt and incorporate new knowledge without needing to be completely retrained from scratch, which requires time and money.”]

That said, there is room for improvement when it comes to this approach. “The problem with open-world object detection is that to train a model on a new object, an annotator will still need to draw a bounding box around the object in an image,” Mullappilly said. “For example, if you want to train a model to identify roundabouts in satellite data, someone will need to annotate the data and retrain the model.”

This isn’t a great option for a business that is using an AI object detection application for a crucial role in their business. “In an industry deployment setting, you can’t really spend several months marking new categories and retraining the model. That’s not practical,” Cholakkal said.

Minimizing annotation

Cholakkal, Mullappilly, Abhishek Singh Gehlot, and Professor of Computer Vision Fahad Khan and Assistant Professor of Computer Vision Rao Anwer, both of MBZUAI, have co-authored the AAAI paper in which they proposed an approach that enables users to add new categories to an object detection application with minimal annotation requirements. The model possesses the capability to distinguish between known and unknown objects.

Then once a human is available, “the human can identify new categories and annotate a few examples of each new category,” Cholakkal said. “Subsequently, the object detection approach can incrementally learn the newly added categories in a semi-supervised manner, requiring only a few labeled examples while leaving the majority of the training data unlabeled.”

The team also developed a new architecture for making these predictions, which they call semi-supervised open-world object detection transformer, or SS-OWFormer. “We label a few images and leverage AI’s capability to learn from the limited annotation in this kind of semi-supervised setting,” Mullappilly said.

The researchers showed that SS-OWFormer was able to perform just as well for both known and unknown objects compared to the current state-of-the-art technology. They also demonstrated their architecture achieved comparable performance to existing approaches while using significantly less annotated training data, with only 10% of the data being labeled.

This study is also the first time that anyone in the field has proposed an open-world object detection problem setting for satellite images.

Cholakkal and Mullappilly intend to continue their efforts by extending their insights to video and working to continually reduce the number of annotations that would be required by a person to train a model.

“We will continue to work on this problem setting and improve it,” Cholakkal said, “and hopefully we will see more innovations on this important research area from around the world.”

- research ,

- computer vision ,

- conferences ,

- object detection ,

- AAAI ,

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- delivery ,

- logistics ,

- machine learning ,

- research ,

- computer vision ,

- conference ,

- neurips ,

- benchmark ,