Satellites are speaking a visual language that today’s AI doesn’t quite get

Wednesday, October 22, 2025

Spend a few minutes with a modern vision-language model and it feels magical. Ask for “a red car parked outside a cafe,” and it nails it. Point at a picture and ask, “what’s happening here?”, and it gives you a decent story.

Now zoom out to a satellite’s view of a city block, a flooded valley, or a container port at dusk, and suddenly the magic sputters. Counting damaged buildings, telling ships apart, noticing what changed between last week and today are things a disaster agency or a climate analyst actually needs. And they’re exactly where the AI models start guessing.

That gap is the motivation behind GEOBench-VLM, a new benchmark introduced at ICCV 2025 by a team spanning MBZUAI, IBM Research Europe, ServiceNow Research, and partners. The premise is simple enough: if we want AI to help with earth observation, we should test it on earth-observation tasks properly and comprehensively, rather than on a diet of internet photos and open-ended chit-chat.

The co-author of the GEOBench-VLM paper Akhtar Munir, a postdoctoral associate in computer vision at MBZUAI, put it even plainer: “What’s missing today is reliable, spatially grounded reasoning over time: knowing where objects are, how many there are in dense scenes, and what changed between pre/post imagery.” That isn’t an academic nit. Cities, forests, farms, and disaster zones live and die by counts, locations, and change detection.

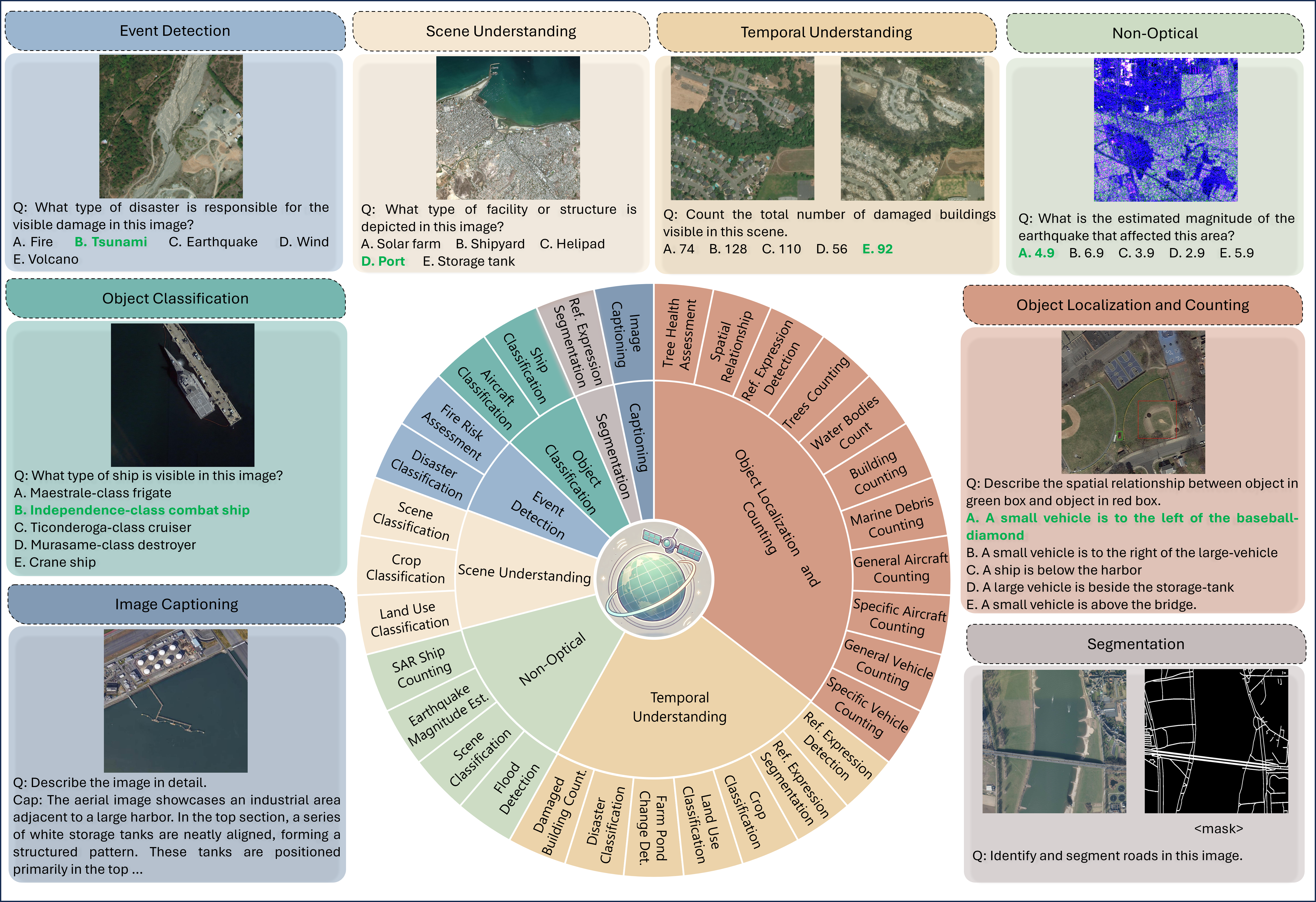

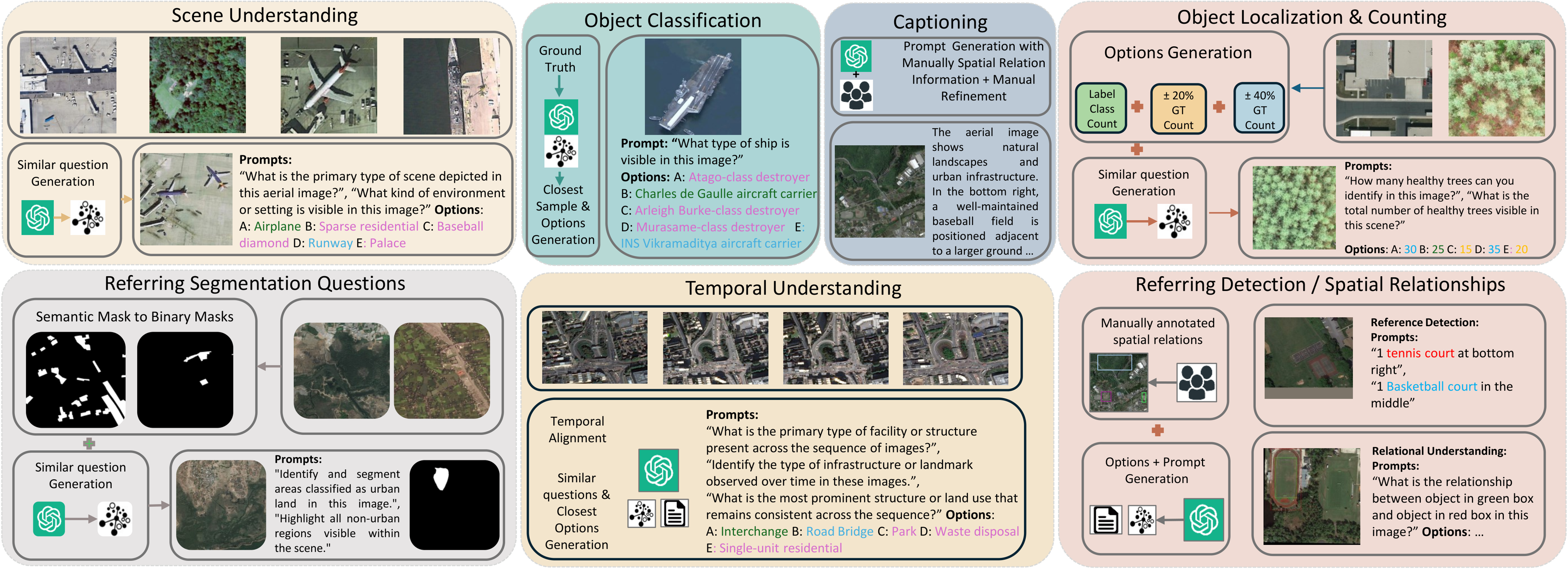

GEOBench-VLM turns that agenda into a sprawling but disciplined test suite. It spans eight task families and 31 sub-tasks, all rooted in the real questions analysts ask of satellite and aerial imagery: scene and land-use classification; object classification (down to fine-grained ship and aircraft types); object localization and dense counting; spatial relationships and referring expressions; semantic segmentation; temporal understanding for change and damage assessment; non-optical sensing (multispectral and SAR); and even captioning for narrative summaries.

Under the hood sit 10,000-plus, human-verified instructions assembled from diverse open datasets, with a careful pipeline that mixes automated generation with manual review. The authors settled on multiple-choice questions for scoring most tasks because automated grading of free-form responses in this domain is still too brittle. MCQs keep evaluation objective and scalable, and they make failure modes legible.

If you’ve followed the genre of “VLM benchmarks,” you might wonder how this one differs from SEED-Bench, MMMU, or geospatial predecessors like VLEO. The short answer is coverage and fidelity. Existing general-purpose suites rarely stress what satellites see: tiny objects in huge scenes; wildly variable resolutions and sun angles; and, critically, time. Many geospatial efforts, meanwhile, skip multi-temporal change, non-optical sensors, segmentation, or dense counting. GEOBench-VLM is explicit about all of those and adds a layer of manual verification to keep the prompts precise and the answer keys defensible. In manual passes, the curators corrected the unglamorous stuff that quietly ruins an eval (ambiguous wording, spatial-relation mismatches, and mask/box alignment errors) before anything touched a leaderboard.

Highlighting gaps and frailties

The first results are very interesting. Across 13 models, including popular open-source systems (LLaVA variants, Qwen2-VL, InternVL-2) and domain-specific ones (EarthDial, GeoChat, RS-LLaVA, SkySenseGPT, LHRS-Bot-Nova) plus a closed commercial baseline (GPT-4o), the best overall accuracy on the MCQ tasks is about 41.7%, roughly twice random guessing. Put differently: even the current front-runners are not yet operationally reliable for the geospatial tasks that matter. Strengths and weaknesses split along intuitive lines. LLaVA-OneVision leads the counting track; GPT-4o is strong on object classification; EarthDial shines on scene understanding; Qwen2-VL looks good on some event-detection and non-optical tasks. But no model is uniformly strong, and the categories that throttle real deployments (dense counting, precise grounding, and pre/post change) remain the most brittle.

Diving deeper into the breakdown of the results, we can see that counting accuracy collapses as object density rises—cars in a megamall lot, containers in a shipyard, small buildings stacked along a hillside. The culprit is basic but stubborn: models miss small instances, confuse near neighbors, and drift numerically when distractor options sit close to the truth. An object-density analysis makes that pain visible: performance drops stepwise from sparse to very dense scenes, with different models failing at different thresholds. In other words, “count to 50” is not the same difficulty as “count to 5,” and geospatial systems must be engineered for that reality.

Grounding is another fault line. GEOBench-VLM evaluates referring expression detection (find “the gray large plane near the bottom-right,” not any plane) at IoU 0.25 and 0.5. Precision is modest across the board; notably, one model trained with strong visual grounding (Sphinx) tops the referring task at both IoU thresholds, while generic models that excel at classification falter when asked to put a tight box on a small target. It’s a useful reminder: “knowing what” is not “knowing where,” and earth observation often cares more about the latter.

Time, the other axis of geospatial reality, is a mixed story. On temporal tasks such as crop evolution, farm-pond change, land-use stability, disaster classification, and damaged-building counts, the benchmark shows small, task-specific gains when multiple snapshots help, and equally frequent regressions when extra frames act more like noise than context. GPT-4o and Qwen2-VL do well on some disaster and damage tasks; EarthDial leads in land-use classification; but nobody is consistently extracting long-range temporal signal. If you’ve tried to align pre- and post-earthquake imagery over uneven terrain with differing sun angles, that finding will not surprise you. Today’s “multi-image” inputs are often concatenation, not temporal reasoning.

Two design choices in the benchmark deserve a closer look if you build models for a living. First, the answer-set engineering for counting: choices are generated around the ground truth with controlled ±20% and ±40% perturbations, so models can’t win by eliminating silly distractors. That makes numeric drift painfully clear and exposes brittleness to prompt paraphrases; indeed, GEOBench-VLM quantifies how accuracy varies across equivalent prompts, and some marquee models swing more than you might expect. Second, the suite goes beyond RGB. It includes non-optical tasks where generalist models stumble despite their scale. For earthquake magnitude estimation from non-optical cues, Qwen2-VL climbs to the top while GPT-4o hits the floor, underscoring the cost of training exclusively on web photos.

Raising the bar

So what does “good enough” look like? Munir sketches a target that sounds ambitious but concrete: think >85% MCQ accuracy across categories (including dense counting), Prec@0.5 > 0.70 on small/medium referring targets, stable performance across paraphrased prompts, and consistently low numeric error. The near-term deployment he’d back is a human-in-the-loop damage-mapping pipeline: the model proposes building-level counts and flood/damage polygons; analysts verify and adjust; authoritative products ship in minutes instead of hours.

How do we get there? The authors suggest three engineering levers. First, resolution-aware tiling and multiscale features to keep tiny objects visible without losing global context. Second, count-aware heads and losses so the model treats numbers as first-class citizens rather than a by-product of classification. Third, temporal modules that actually align and reason over time rather than hoping a bigger backbone will learn it implicitly. Layer in domain adaptation for non-optical sensors, and you have a plausible roadmap.

Benchmarks are not an end state; they’re a contract. GEOBench-VLM’s contract is refreshingly grounded: if you claim your model is ready for cities, climate, or disasters, show that it can count dense objects, localize small ones, reason over time, and read beyond RGB under objective scoring and human-verified prompts.

Today’s results say we’re not there yet, even as specific components look promising. Progress in geospatial AI has always come in bursts when someone puts the right yardstick in front of the field. With this release, the yardstick just got a lot closer to the work people actually do.

- research ,

- ICCV ,

- vision-language models ,

- benchmark ,

- geospatial ,

- imaging ,

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- delivery ,

- logistics ,

- machine learning ,

- research ,

- computer vision ,

- conference ,

- neurips ,

- benchmark ,