Safeguarding AI-for-health systems

Monday, June 05, 2023

Around the world, people benefit, not only from the personalized care they receive, but also from the work of public health systems that provide population-level information germane to personalized treatment. AI researchers facilitate this work and enable the safe sharing of information while maintaining privacy using tools like federated learning.

Sharing anonymized health information helps us all benefit from the experiences, successes, and failures, of millions of our fellow humans, and helps doctors gain greater visibility of proven treatments and emerging threats to our health faster and more easily. But not everyone feels a responsibility to engage with such systems for the betterment of humanity.

AI system administrators must protect against hacking, which is an ongoing threat, but unfortunately, they must also battle with bad actors within the system who seek to skew the outcomes of their data. Whether malicious or not, skewing data undermines the very work researchers set out to do.

The research of a collaborative team from MBZUAI, KAUST, and Mila is aimed at helping administrators identify bad actors and minimize the negative impacts on results. What they found is enlightening about the state of information sharing systems, and how administrators of these systems might well protect the critical insights AI promises to deliver in human health.

Sharing safely using federated learning

In federated learning systems, a central administrator designs algorithms that use the data on our devices, runs computations, and then sends the anonymous, encrypted outcomes of that data to a central repository. This type of system is how we collect and analyze vast troves of health information and make safe, ethical use of it in modern, AI-driven health systems. It’s how, for example, scientists crush hundreds of millions of data points about cancer treatment down into the handful of insights and recommendations that could save lives.

MBZUAI Assistant Professor of Machine Learning Samuel Horváth and postdoctoral fellow Eduard Gorbunov work to better understand these systems, optimize them, and protect them from bad actors. On a routine basis, administrators of such a system will update the algorithm, and train the network of devices (your phone included) on the latest version of the math that will turn your data into insights and lives saved. But unfortunately, not everyone is as honest as you are.

“Some users will send back bad information, or even attacks,” Horváth said. “They’re not even necessarily trying to destroy the model, but the manner in which they skew data can be very disruptive to the findings they are based on.”

Gorbunov and Horváth, along with their co-authors, KAUST Professor of Computer Science Peter Richtárik, and Assistant Professor at Université de Montréal and core faculty member at Mila Gauthier Gidel, have a paper accepted at ICLR 2023 titled: “Variance Reduction is an Antidote to Byzantine Workers: Better Rates, Weaker Assumptions, and Communication Compression as a Cherry on the Top.”

The team found that often, defending against Byzantines is more disruptive to data than doing nothing at all. In response, Gorbunov et al. propose a new Byzantine-tolerant method that helps to stabilize training and increase speed, while outperforming benchmarks.

In the end, the team propose a solution that is worth implementing both because it improves outcomes, but also because it is moderately effective against various forms of attack. Essentially there is no “cost” associated with using their Byzantine reduction strategy, and there is no optimization “price” or speed loss either.

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

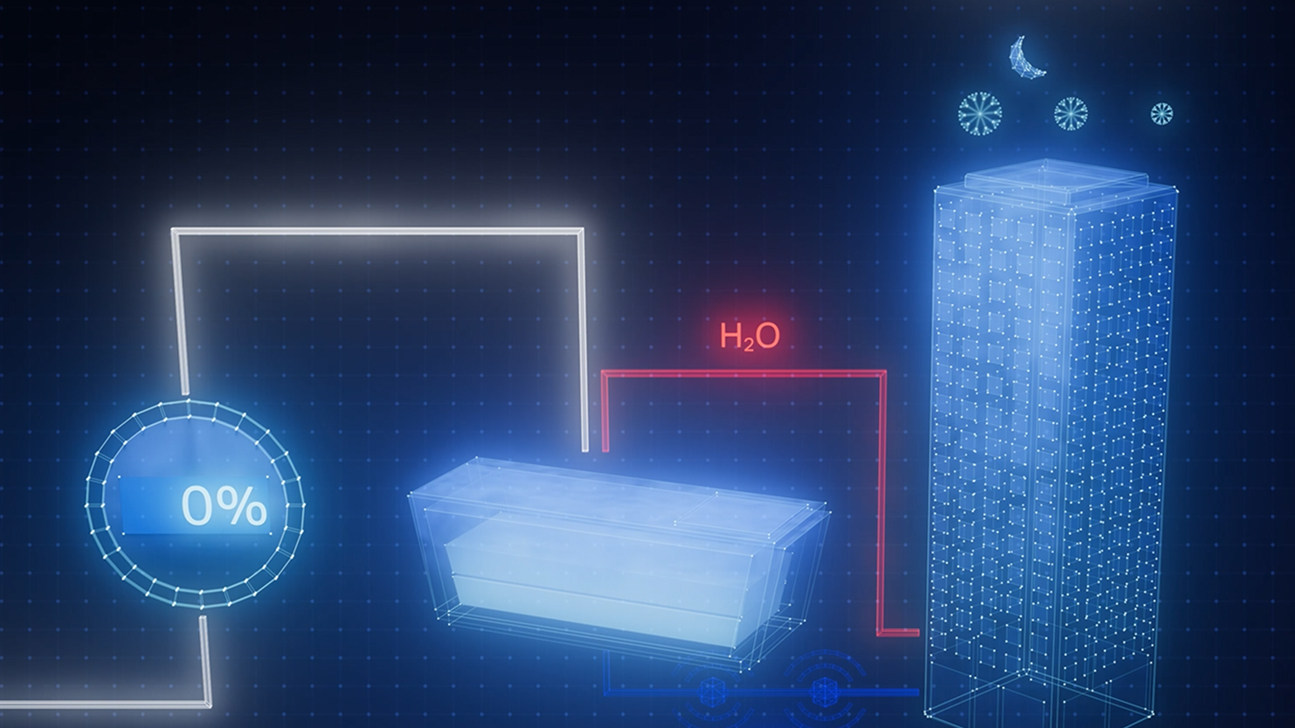

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

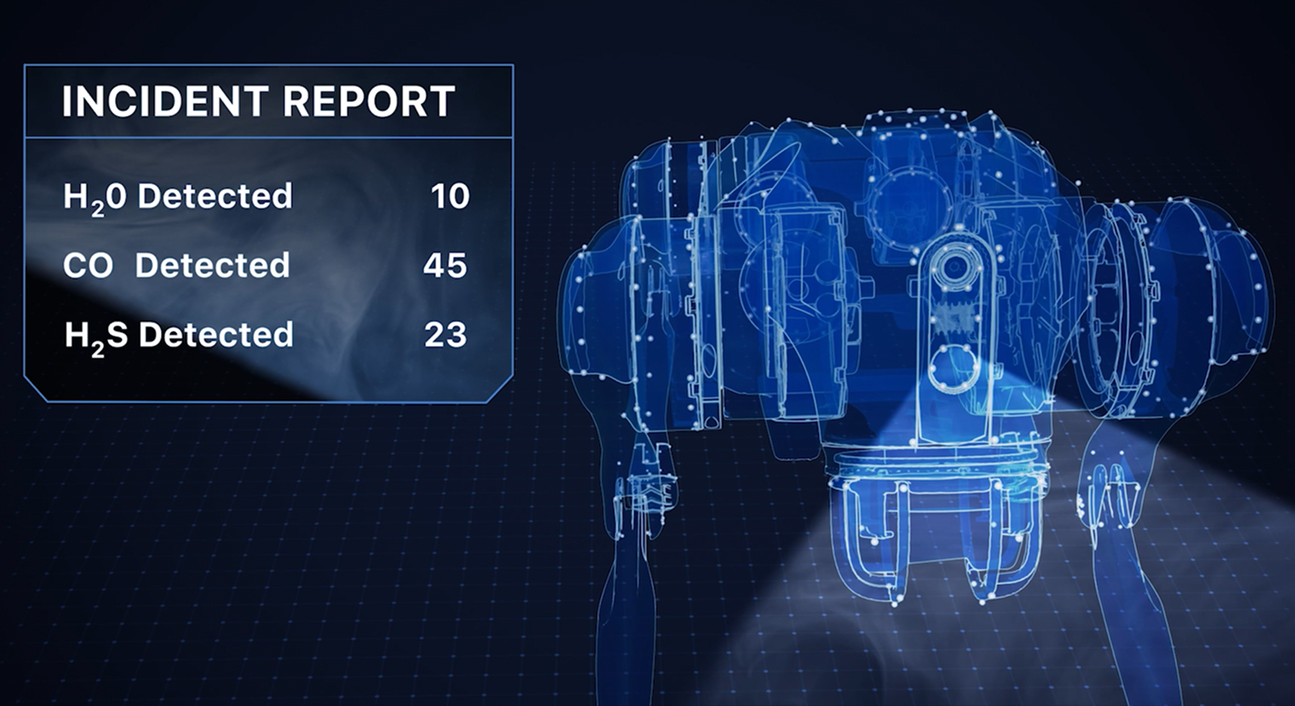

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,