MBZUAI research at ICLR 2023

Monday, May 01, 2023

At last count, MBZUAI scientists have 22 papers accepted at the Eleventh International Conference on Learning Representations (ICLR 2023). In total, 14 MBZUAI faculty, researchers, postdocs, and students have academic research accepted at ICLR 2023 alongside their national and international co-authors. ICLR 2023 commenced today in Kigali, Rwanda, and will run until Friday.

Topping the list of MBZUAI publishers at ICLR 2023, MBZUAI Deputy Department Chair of Machine Learning, Associate Professor of Machine Learning, and Director of the Center for Integrative Artificial Intelligence (CIAI), Kun Zhang, is co-author of seven papers.

MBZUAI Affiliated Assistant Professor of Machine Learning Yuanzhi Li was also honored with an outstanding paper honorable mention at ICLR 2023 for “Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning” – work he produced with his collaborator from Meta Zeyuan Allen-Zhu.

Also of note, a paper titled: “Betty: An Automatic Differentiation Library for Multilevel Optimization,” co-authored by MBZUAI President and University Professor Eric Xing and Adjunct Assistant Professor of Machine Learning Pengtao Xie, et al., has been recognized as an ICLR 2023 notable top 5% paper.

One of the seven papers that Zhang and his co-authors will publish at ICLR 2023 is titled: “Scalable Estimation of Nonparametric Markov Networks with Mixed-Type Data.”

According to Zhang, to create generative systems that are accurate and powerful enough to deal with large, complex, heterogeneous data sets, researchers must first understand the causal relationships between each piece of information. Next, they must scale the system up to deal with millions of measured things in a reliable and accurate manner.

“If you assume, as many researchers do, that there are linear relationships between your variables, this might skew all of your results on real problems,” Zhang said. “On the other hand, if you use flexible models, the learning process will be less efficient. This is why we often say that causal analysis doesn’t scale. With this research, we think we’ve made a large contribution to allowing for the scaling of causal analysis, and, therefore, the analysis of millions of complex relationships.”

MBZUAI Affiliated Assistant Professor of Machine Learning, Yuanzhi Li, was honored with an outstanding paper honorable mention at ICLR 2023 for “Towards Understanding Ensemble, Knowledge Distillation and Self-Distillation in Deep Learning“.

In the paper, Li and his co-author try to understand distillation in a new way. Distillation helps computer scientists improve accuracy, increase efficiency in training, or both. Li et al’s insight is that “under a natural multi-view structure, without distillation, a neural network can be trained to depend only on part of the features, but with distillation, this problem can be alleviated.”

The awarding committee believed this to be a “very interesting theoretical explanation, leading to a better understanding of the effectiveness of distillation,” according to the ICLR website.

- university ,

- faculty achievements ,

- research ,

- conference ,

- ICLR2023 ,

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

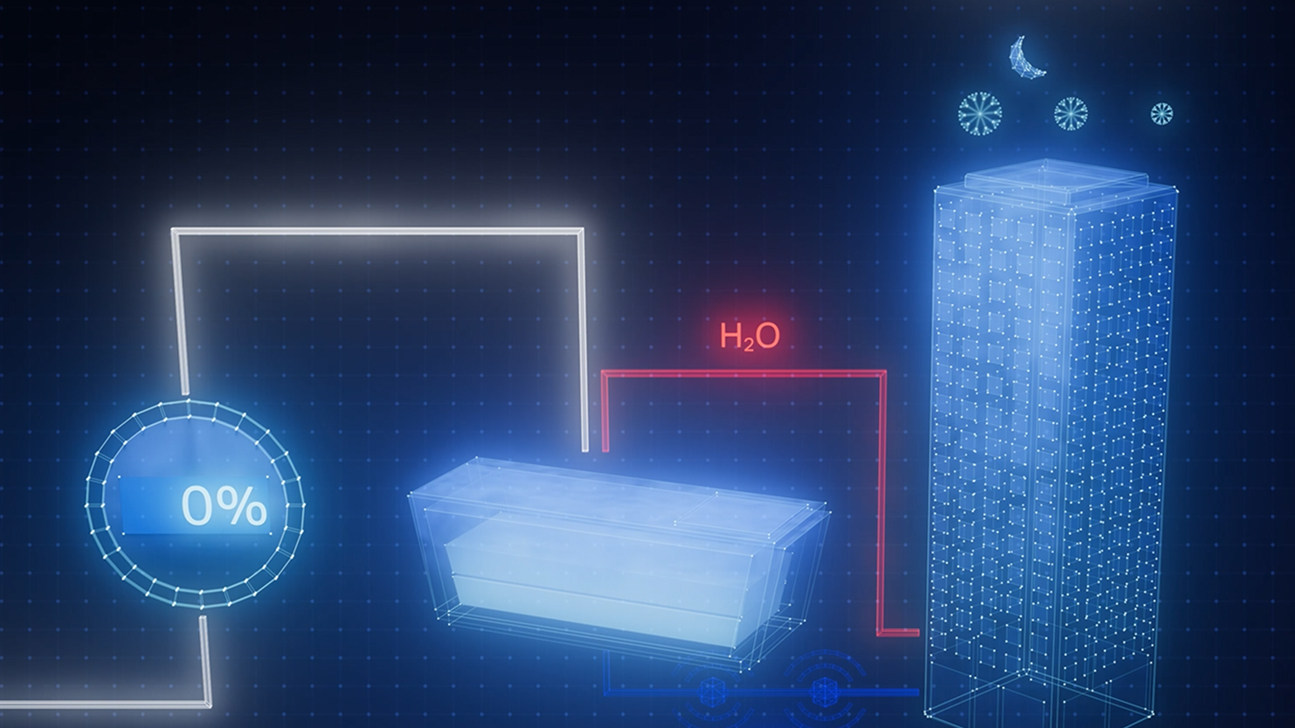

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

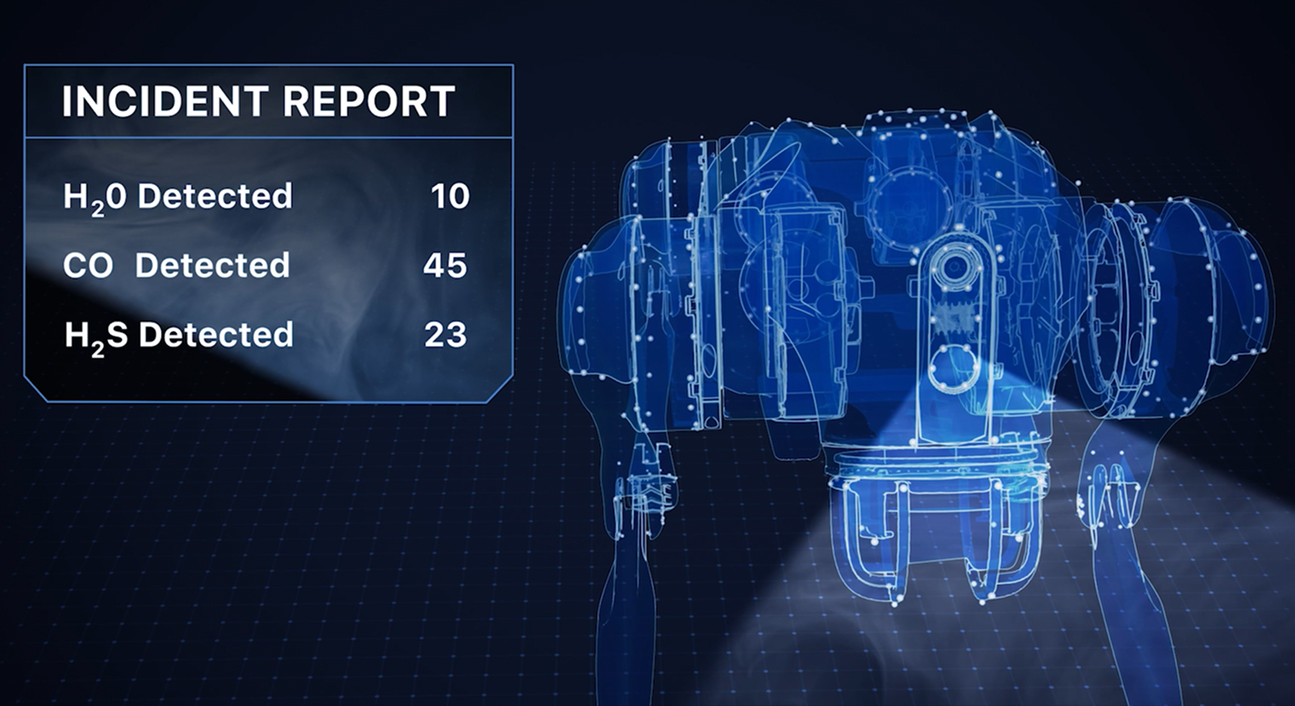

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,