LLMs tackle math word problems

Monday, July 08, 2024

Ekaterina Kochmar, assistant professor of natural language processing at Mohamed Bin Zayed University of Artificial Intelligence, is interested in educational applications of natural language processing, or NLP. She has built systems that help people learn English as a second language and developed applications that provide guidance about how texts can be adapted for different reading levels.

One current area of exploration for Kochmar relates to what are known as math word problems, which are used in education because they test a broad set of skills and knowledge, including reasoning, basic math and general knowledge. Because they’re written in everyday language, math word problems present an opportunity for NLP tools like large language models, or LLMs, to offer insights about how they could be taught more effectively to students.

Building an AI application for education is a difficult endeavor, however. Even though LLMs, like OpenAI’s ChatGPT, can quickly generate fluent text based on a simple prompt, that doesn’t mean they are appropriate for the classroom. “ChatGPT wasn’t designed to be a tutor,” Kochmar said. “There is a lot that needs to be built around large language models to give them capabilities” that are needed to help students learn.

In a recent study, Kochmar and a coauthor investigated errors that open-source LLMs make when solving math word problems. The research was presented at the 2024 Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), which was held earlier this month in Mexico City.

With this research, Kochmar and her coauthor, KV Aditya Srivatsa, a research assistant at MBZUAI, are trying to determine what characteristics make math word problems difficult for a machine to answer and predict if an LLM will be able to solve a problem based on the problem’s characteristics. These insights might help to develop better approaches for teaching math word problems to students.

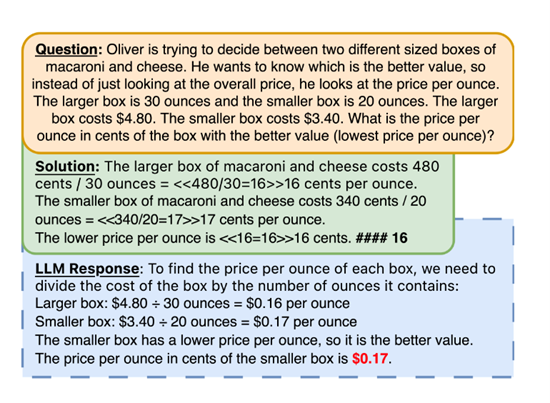

A math word problem, the “Question” in the figure left, was posed to Llama2-70B, a large language model developed by Meta, as part of a study by MBZUAI researchers on LLMs’ ability to solve these kinds of questions. Though the LLM performed the math operations correctly, it still gave the wrong answer to the question.

A multi-faceted task

Though people answer math word problems rather easily, they are challenging for machines. “There are all sorts of things a model needs to do to solve these problems, such as extracting information, assigning the right variables with the right values, understanding that certain words need to be translated into math operations, like addition, subtraction and division,” Kochmar said.

And while LLMs don’t always solve math word problems, they do in many cases. Their performance is surprising because they weren’t made for the task. In the simplest terms, LLMs are designed to predict a likely next word in a sequence of words. Yet, “along the way, LLMs have also developed emergent capabilities,” including a type of reasoning, Kochmar said. “Through the objective of predicting the next word, they have also acquired these other skills.”

The researchers used a dataset of more than 8,000 math word problems that have been collected for the purpose of testing the performance of LLMs. The math in these problems is not in itself difficult, but the questions are phrased in ways that can be confusing. That said, middle school students can typically determine the correct answer.

Kochmar and her coauthor developed a program that analyzes the problems and identifies specific characteristics — called features in machine learning terms — contained in them. In total, the researchers used 23 types of features, which fall into three broad categories: linguistic, mathematical and world-knowledge related.

Linguistic features relate to the length of a question and the complexity of the phrasing. Mathematical features relate to the kind of math operations and math-related reasoning that are required. Real-world knowledge and natural language understanding relates to information not included in the problem. Some examples of real-world knowledge are the number of cents that make up a dollar, or the process for turning a fraction into a percentage. “If a model lacks the ability to translate between different units that are required by a problem, it won’t be able to solve the task,” Kochmar noted.

By identifying the features that are contained in a problem, the researchers were able to associate specific features with the LLMs’ ability to solve problems, providing a sense of what kind of characteristics make math word problems difficult.

“If a large-language model makes certain mistakes on these problems, it’s likely that some students will also be making these mistakes,” Kochmar explained. “If we understand the various ways in which a problem can be misunderstood or approached incorrectly, we can identify those misunderstandings and provide students with guidance to help them avoid those mistakes.”

The researchers found that questions that were long and difficult-to-read and those that required several math operations and real-world knowledge were more frequently answered incorrectly by the LLMs compared to other types of questions. “What is interesting to me is that even the most powerful models still made mistakes,” Kochmar said. “It’s a small subset of the total number of questions, but there were 96 tasks that no model was able to solve.”

The researchers analyzed the performance of four LLMs in the study: Llama2-13B, Llama2-70B, Mistral-7B and MetaMath-13B. MetaMath-13B and Llama2-70B showed similar performance, but MetaMath-13B had the lowest number of wrong and the highest number of correct answers.

Next question

Kochmar is continuing this line of inquiry and wants to see if it’s possible to predict for any given question what the best model would be to solve it.

She is also interested to explore models’ ability to reason. This kind of analysis, however, is difficult because researchers don’t know if a model is simply repeating information that it was exposed to during training or if it is engaging in a kind of reasoning.

“We don’t assume that they reason like humans,” Kochmar said. “But we want to understand if given a premise they can derive a correct conclusion.”

- natural language processing ,

- research ,

- mathematics ,

- nlp ,

- llm ,

- NAACL ,