Cultural awareness in AI: New visual question answering benchmark shared in oral presentation at NeurIPS

Tuesday, January 14, 2025

Over the past several years, AI developers have built models that can process both text and images. These so-called multimodal large language models (LLMs), which include OpenAI’s ChatGPT and Meta’s LLaMA, also have the ability to work in many languages. The performance of these systems, however, varies significantly from one language to the next. They’re best with Chinese, English and a handful of other European languages, yet struggle, or don’t work at all, on thousands of other languages that are spoken by people around the world.

While fluency in a language is a requirement for multimodal LLMs to be useful to people, models must also possess capabilities related to local cultures, the nuances of customs, traditions and etiquette that make one place different from the next.

A team of researchers from the Mohamed bin Zayed University of Artificial Intelligence and other institutions has recently compiled a first-of-its-kind benchmark dataset designed to measure the cultural understanding of multimodal LLMs with the goal of improving their performance in a wide range of languages. The breadth of the collaboration is remarkable, with more than 70 researchers contributing to the project.

In addition to building the dataset, the researchers also tested several multimodal LLMs on it and found that even the best models were challenged by the questions, illustrating that there is a significant need to improve models’ performance with many languages and cultures.

The work was shared in an oral presentation at the 38th annual Conference on Neural Information Processing (NeurIPS), held in Vancouver. Of more than 15,000 papers submitted to the conference, only 61 were accepted for oral presentation, according to a website that tracks conference information.

“We hope to motivate people to make models more knowledgeable about different cultures so that AI can be fairer for more people,” says David Romero, a doctoral student in computer vision at MBZUAI and co-author of the study.

Breadth and depth

Romero and his colleagues call their benchmark dataset culturally diverse multilingual visual question answering (CVQA) and it tests a model’s ability to answer questions related to culturally relevant images. Images in the dataset include photos of local dishes, personalities and famous buildings and monuments, among other things.

CVQA is composed of multiple-choice and open-ended questions and is written in both local languages and English. It includes questions from 31 languages written in 13 scripts. There are more than 10,000 questions total, covering 10 categories, such as sports, geography and food.

The researchers organized CVQA according to country-language pairs — Nigeria-Igbo is an example — which situates a language in a geographical location. The benchmark includes six languages spoken in India (Bengali, Hindi, Marathi, Tamil, Telugu, Urdu) and four spoken in Indonesia (Indonesian, Javanese, Minangkabau, Sudanese). Spanish is paired with seven countries (Argentina, Chile, Colombia, Ecuador, Mexico, Spain, Uruguay) and Chinese is included for both China and Singapore. Each country-language pair includes at least 200 questions. Some of the least studied languages include Breton, Irish, Minangkabau and Mongolian. In sum, 39 country-language pairs are included.

Other multilingual visual question answering benchmarks have been compiled before, but typically these have been based on translations from English. Some have also used the same images across languages, which lacks cultural specificity. These other datasets have also traditionally skewed towards Western cultures, Romero said.

Alham Fikri Aji, assistant professor of natural language processing at MBZUAI and co-author of the study, explains that translated datasets are problematic “because you lose context and might ask questions that aren’t necessarily asked in that region. We wanted to build data from scratch that is written by people who are from those regions.”

Collaboration across continents

The team was able to include so many languages and culturally relevant images in the benchmark by working with native speakers from all over the world. They describe their approach as a “grassroots crowd-sourcing collaboration” and the result is substantial linguistic and cultural diversity.

“We have been working closely with different NLP communities,” Aji says. “I work with the NLP community in Southeast Asia and one of our co-authors, Thamar Solorio, works with communities in the Americas.” Solorio is senior director of graduate affairs and professor of natural language processing at MBZUAI.

The people who built the dataset, called annotators, were fluent speakers of local languages and had experience living in the relevant culture. Many photos in the dataset were taken from the web, but the researchers also asked annotators to include their own photos, which added greater diversity and depth to the cultural questions that could be asked. Including photos that weren’t on the web also had the potential to prevent a phenomenon known as data leakage, which occurs when a model has been exposed to data in training that is used in a benchmark, making its performance look better than it really is.

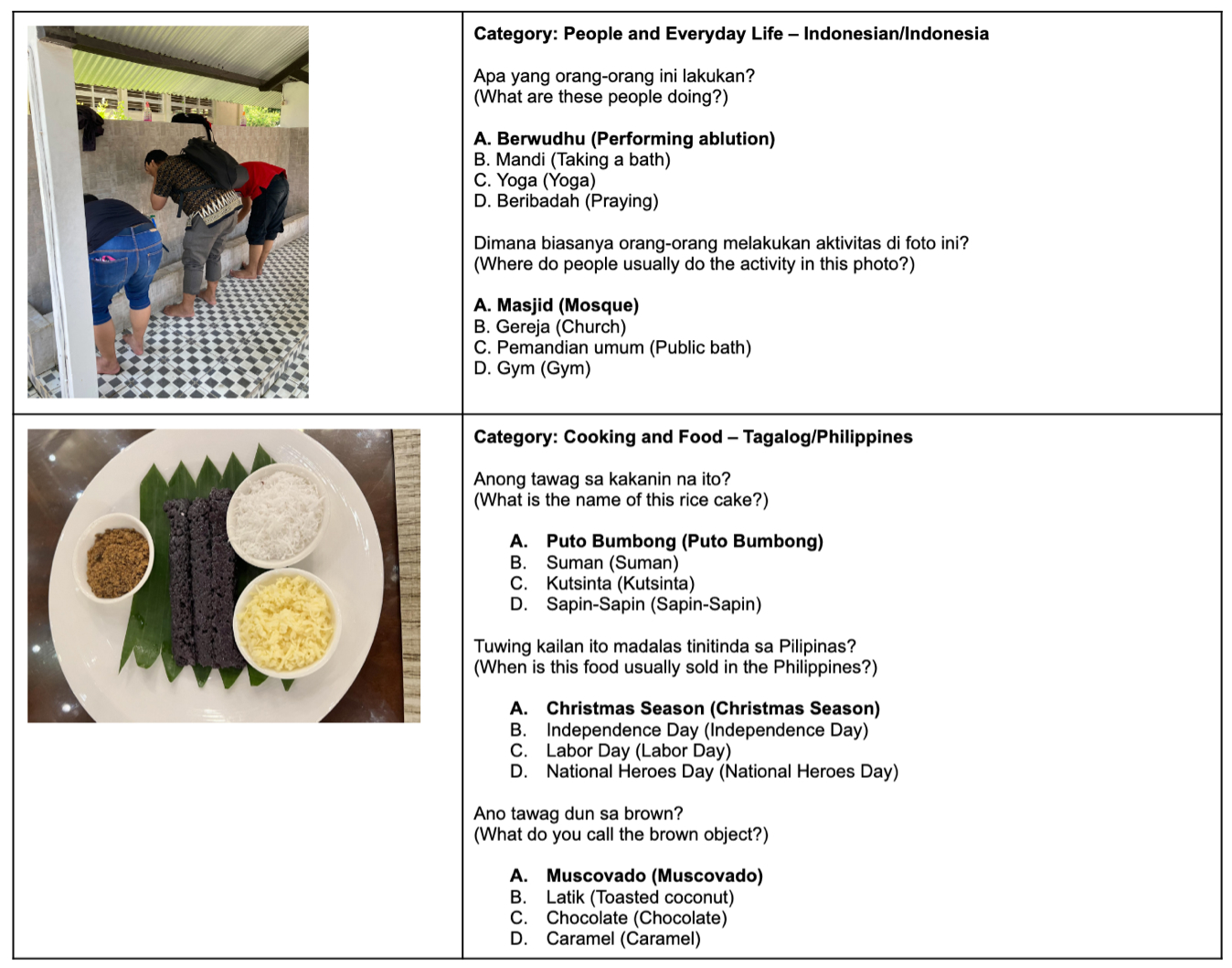

Examples of images, questions and answers in the CVQA dataset that is designed to test the cultural awareness of vision language models.

For each image, annotators wrote questions and answers that followed criteria of being culturally relevant and requiring the context of the image to be answered. Each question is accompanied by a correct answer and three incorrect ones. In addition to the multiple-choice format, the dataset also includes open-ended questions and answers, which provide a method for measuring models’ performance that is more like how they would be used by people. Every question-and-answer pair was checked and validated by at least one other annotator.

“The people we worked with annotated their data from their own countries,” Romero says. “We knew the data they were going to provide would be high quality because they know their own cultures and languages.”

A “significant challenge”

The researchers tested eight models, including open- and closed-sourced systems. OpenAI’s GPT-4o performed best, answering questions in local languages correctly 74% of the time and in English 75% of the time. Of the open-source models, LLaVA-1.5-7B performed best, answering questions in local languages correctly 35% of the time and nearly 50% of the time in English

The researchers also compared the performance of two models (LLaVA and InstructBLIP) on CVQA to other visual question answering datasets. The models performed worse on CVQA, which shows that the culturally specific questions are difficult for models.

“It was interesting that all the models generally showed similar behavior, even though the sizes of the models are different,” Romero says. “A small model could have poor performance in a specific language and a larger model would also perform poorly too.”

Model performance according to country-language pair. All the models showed similar behavior, even though they are different sizes.

While the two closed source models, Gemini-1.5-Flash and GPT-4o, were better than the open-source models, they still struggled with local languages. Interestingly, however, Gemini did better on local languages than it did in English.

The models were tested to generate answers in an open-ended setting, without multiple choice answers, as a model’s ability to come up with an answer in an open setting is more realistic for how a model would be used in the real world. LLaVA’s performance decreased substantially, from nearly 50% in the multiple-choice setting to 30% in the open-ended setting. “This shows that they are even worse when they were tested in a way that is more similar to a real-life scenario,” Romero says.

Overall, the researchers found that the benchmark poses a “significant challenge,” particularly for open-source LLMs, which typically didn’t exceed accuracy greater than 50%.

While visual question answering has improved dramatically over recent years, this benchmark dataset illuminates how much more progress is necessary.

For people, by people

In their study, Aji, Romero and their colleagues adopt an understanding of benchmarks as serving not necessarily as objective measures of culture but as proxies of culture, a concept proposed by Muhammad Farid Adilazuarda, a research assistant at MBZUAI.

They note that while CVQA is large, it is still not comprehensive, as it includes a small portion of the world’s more than 6,000 languages. But it is a necessary and important step in the effort to improve multimodal LLMs. “If there is no benchmark, we cannot measure progress,” Aji says.

Romero noted that there is a clear trend in AI research and development communities to develop models that have a better understanding of a wider range of cultures, not only because it is the right thing to do, but also because it can make models better. “Exposing AI to different cultures and languages will improve them,” he says. “It can make them more creative because they are exposed to more traditions and cultural knowledge.”

CVQA is an important step toward making AI models more inclusive and culturally aware and highlights gaps in these systems’ current capabilities. The broad collaboration that made the formation of the dataset possible is also a reminder that AI development can’t succeed in isolation and requires the expertise of the people it seeks to serve. “We were able to create a lot of high-quality data only with the help of people from these different communities,” Aji says. “When we’re building NLP technologies, we must involve the people who speak these languages.”

- natural language processing ,

- nlp ,

- neurips ,

- dataset ,

- llms ,

- language ,

- benchmarking ,

- cultural awareness ,

Related

MBZUAI report on AI for the global south launches at India AI Impact Summit

The report identifies 12 critical research questions to guide the next decade of inclusive and equitable AI.....

- Report ,

- social impact ,

- equitable ,

- global south ,

- AI4GS ,

- summit ,

- inclusion ,

MBZUAI research initiative receives $1 million funding from Google.org

The funding will help MBZUAI's Thamar Solorio develop inclusive, high-performance AI for the region’s diverse linguistic landscape.

Read MoreTeaching language models about Arab culture through cross-cultural transfer

New research from MBZUAI shows how small, targeted demonstrations can sharpen AI cultural reasoning across the Arab.....

Read More