Changing the landscape: A vision language model to revolutionize remote sensing

Tuesday, September 24, 2024

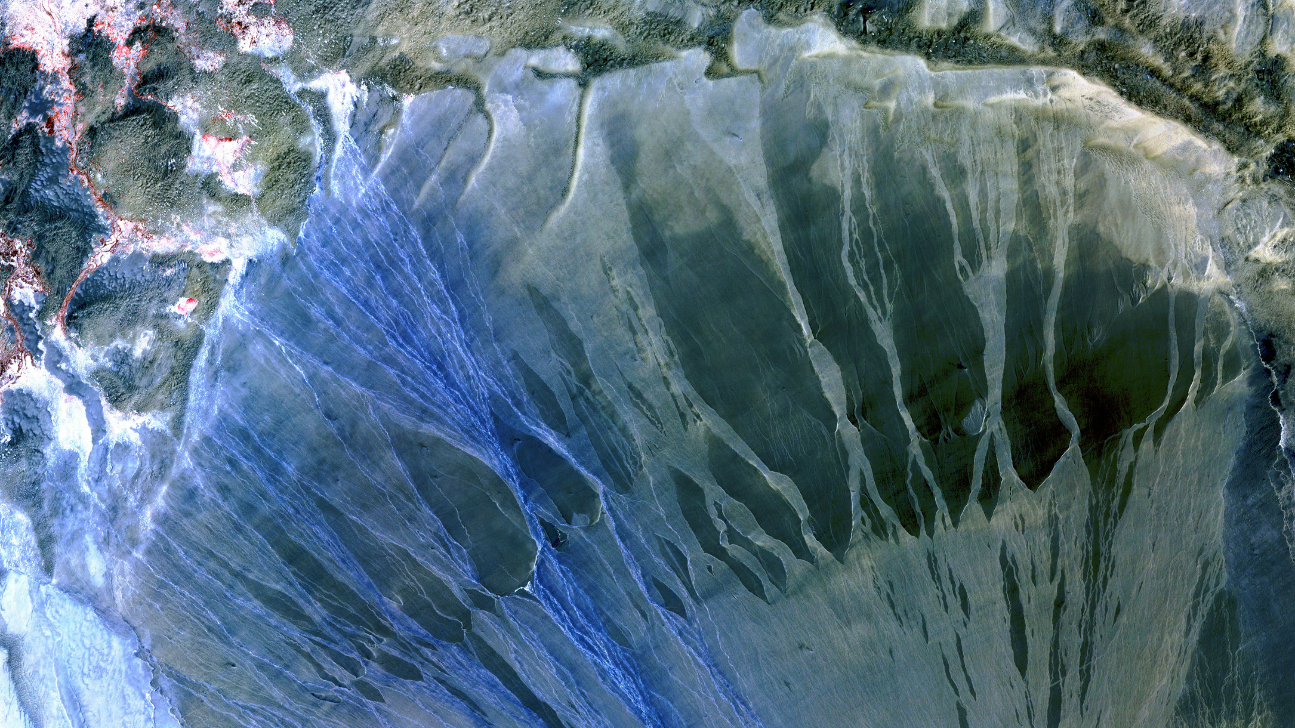

Over the last 10,000 years, one third of Earth’s forests have disappeared – yet half of this loss happened in the last century alone. Researchers seeking to monitor deforestation aim to curb the loss of biodiversity, protect endangered species and limit disruption to both ecosystems and the climate. Studying a single satellite image, however, doesn’t offer the detailed, multi-faceted perspective to assess what’s changed and mitigate the impact.

This is where GeoChat+, a vision-language model (VLM) for multi-modal, temporal remote sensing (RS) image analysis comes into play. A model like GeoChat+ could monitor an area prone to deforestation and assess the impact on soil quality, air quality or erosion. It would provide a detailed report to outline high-risk regions and recommend where reforestation efforts could be most effective. Ultimately it would help researchers analyze the past and predict the future with greater accuracy.

RS EXPERTISE

Salman Khan and his team at Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) are developing GeoChat+ in partnership with IBM Research and will present their ongoing work at GITEX in October 2024. The previous model, GeoChat, was the first grounded large VLM, specifically tailored to RS scenarios.

GeoChat is a tool that combines satellite images with advanced language technology to help understand and describe what is in the images. It excels in handling high-resolution RS imagery, which it uses to answer questions about specific locations, such as monitoring environmental changes or formulating a disaster response. It offers a way to make sense of complex visual data using both images and text.

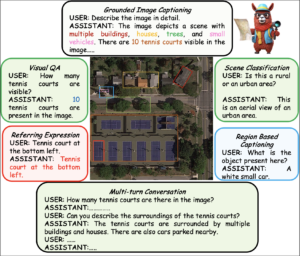

GeoChat already performed well at tasks like image and region captioning, visual question answering, scene classification and object detection. The team aims to develop these capabilities further with GeoChat+ and diversify with 11 new tasks. These include:

- Tree species classification: to monitor biodiversity, detect invasive species and protect endangered species

- Methane plume detection: to quantify emissions from industrial sites, landfills and agriculture to mitigate climate change, and monitor gas leaks to prevent environmental damage

- Land cover classification: to guide sustainable development and environmental conservation by identifying land uses (e.g., residential, commercial, agricultural) and land covers (e.g., forests, water bodies)

- Earthquake prediction: to monitor precursors to earthquakes like ground deformation and fault line activity to improve early warning systems, and assess the stability of critical infrastructure in seismically active regions

- Local climate zones: to mitigate the effects of urban heat islands, identify areas that are more vulnerable to the impacts of climate change and guide urban design for climate resilience

- Change in canopy height: to monitor deforestation and reforestation and assess habitat quality to maintain biodiversity

MULTIMODAL, TEMPORAL

The current GeoChat model can only offer users answers based on a single RGB image. GeoChat+ will use different modalities, captured by different satellites at different times to answer a greater range of queries. “With multi-modal images, GeoChat+ will be able to compare the same scene over a number of years,” says MBZUAI research scientist Akshay Dudhane, “or analyze the same environment to detect what has changed.”

GeoChat+ is set to revolutionize geographic data analysis by integrating a wide array of satellite and imaging sources. The platform will utilize data from renowned Earth observation systems like Sentinel-1 and Sentinel-2, along with Landsat and high-resolution imagery from WorldView and Google Earth. In addition, the PlanetScope constellation, GF-2 and Gaofen-3 satellites, as well as advanced SAR imaging from SOPT 6 and 7 will provide detailed, multi-modal perspectives.

Further enhancing its capabilities, GeoChat+ will incorporate LIDAR imagery for detailed topographic analysis and NAIP imagery for aerial views, along with NIR (near infrared) image data and SAR (synthetic aperture radar) imagery. This extensive suite of data sources will include bi-temporal images for tracking changes over time, enabling users to analyze landscapes, urban growth, environmental shifts and more with unmatched precision.

With such a diverse data set, GeoChat+ promises to provide comprehensive, geospatial insights for a range of applications, from environmental monitoring to disaster response and urban planning.

TRAINING DATA

The greatest obstacle posed by GeoChat+ is its high demand for data. “The major challenge we face is preparing millions of instruction pairs. A huge amount of time and manual and automated effort is required,” says Dudhane. “There is no clear cut, large scale data set available for fine tuning of this kind of mode, so, supported by IBM Research, we have to prepare it from scratch.” The team has prepared approximately 20 million question-answer pairs about the satellite data to train GeoChat+. After training, they will evaluate the model with approximately 30 datasets, benchmarking performance against existing methods.

As an academic collaboration, eventually the team will make GeoChat+ available for researchers to deal with RS images more effectively. As Khan says, “it has the potential to revolutionize the way RS data is analyzed, making it more accessible and actionable for applications in environmental monitoring, disaster management, agriculture, and urban planning.”