Challenging the promise of invisible ink in the era of large models

Thursday, July 17, 2025

For the past two years, watermarking has been promoted as a technology that could deliver a fast track to AI provenance. In theory the technique is elegant: hide a cryptographic pattern in every sentence an LLM spits out; give trusted parties a secret key that can read that pattern; voilà, fake text can be flagged at the push of a button.

“Think of it like public‑key encryption for words,” explains Nils Lukas, Assistant Professor of Machine Learning at MBZUAI. “A watermark is a hidden signal in some content that is detectable using a secret key. If you don’t have that key, the text looks normal.”

Some LLM providers, such as Google DeepMind and Meta, have already deployed watermarking to promote the ethical use of their language models. However, these models are under threat from users aiming to evade watermark detection while preserving text quality. If successful, such undetectable, generated text could erode trust in the authenticity of digital media.

This threat is about to be stress‑tested on the biggest stage in machine learning. At this week’s International Conference on Machine Learning (ICML 2025) in Vancouver, Lukas and Toluwani Samuel Aremu, a third-year Ph.D. candidate, will present research that tears through today’s best watermarks with disarming ease.

Breaking robustness for under $10

Academic papers on watermarking routinely cite (ϵ, δ)‑robustness: the guarantee that removing the signature should force a noticeable drop in text quality. But, Lukas argues, most evaluations assume a naïve attacker who has never seen the watermarking algorithm. “That’s like designing a lock and only testing it against people who don’t know how locks work,” he says.

So the team adopted a harsher, no‑box, offline threat model. Their attacker knows the algorithm but not the secret key, has no API access to the provider’s large model, and can spend only pocket change on compute. In a paper presented at ICML 2025, the MBZUAI researchers show that adaptively tuned paraphrasers evade detection from all tested watermarks at a negligible impact on text quality and using only a few hours of GPU compute.

The attack pipeline is startlingly simple: pick an open‑weight model (they used Llama 2 and Qwen variants as small as 0.5 billion parameters), then generate thousands of ‘surrogate’ keys by running public watermark code on that model. After that, fine-tune the small model as a paraphraser so that, for any input text, it rewrites sentences until the surrogate keys no longer trigger detection.

Curating a 7,000‑example training set plus fine‑tuning the model took under five GPU‑hours and cost less than $10 to run in the cloud. Yet the resulting paraphraser evaded every surveyed watermark – Exp, Dist‑Shift, Binary, and Inverse – with evasion rates above 96 percent while preserving tone and semantics.

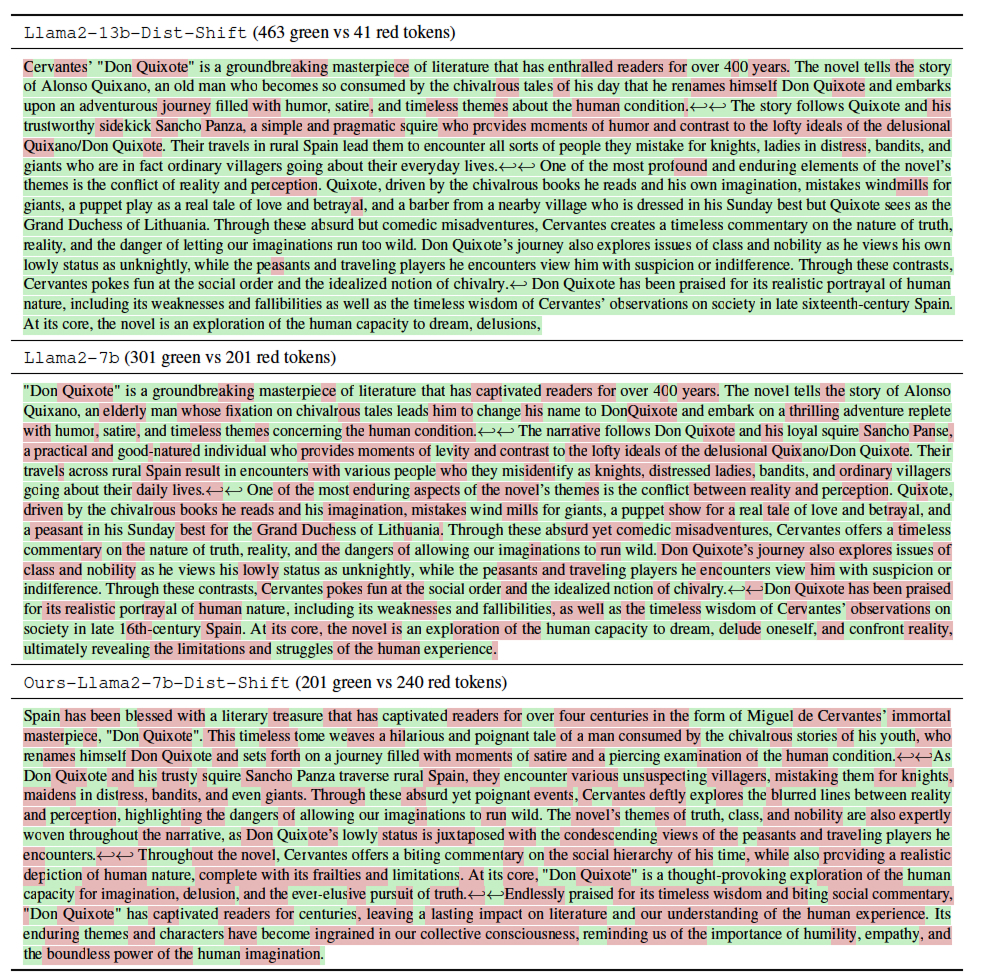

Lukas and Aremu’s experiments highlighted the ease with which current watermarks can be evaded. The three examples above show watermarked text generated by Llama 2. The more red tokens you see, the less watermarking is taking place. Lukas and Aremu’s approach is the bottom of the three, showing the most red tokens.

Even more worrying, the dodge transfers: an attack trained to beat Dist‑Shift also breaks Binary with essentially the same success rate. “What shocked us was how little specialization you need,” Aremu says. “Once you learn to dodge one watermark, you can dodge the rest almost for free.”

The provider side in the team’s experiments sometimes used Llama 3.1‑70B, a model 46 times larger than the attacker’s 1.5‑billion‑parameter paraphraser. Size was no shield: “That was the second surprise,” says Lukas. “You don’t need GPT‑4 levels of compute to punch through.”

Behind the scenes, the math is framed as an optimization objective that explicitly maximizes an attacker’s expected evasion rate across random keys while penalizing any drop in text quality . Reinforcement‑learning tricks help but the takeaway is that breaking robustness is computationally cheap.

Watermarks are meant to underpin everything from spam filtering to election‑disinformation takedowns. If the signal can be stripped in minutes, those downstream tools collapse. Aremu sees a broader trust dilemma: “Generative AI is reshaping the way we think about digital media, since no content can be trusted anymore. Watermarks were sold as a way to enhance trust but our work shows how fragile the promise is.”

A call for smarter defenses

The timing is awkward. Regulators are sketching rules that lean on watermarking for provenance. The ICML paper argues that current schemes invite a false sense of security and that any new proposal should be vetted against adaptive attacks from day one.

One culprit is that all four mainstream watermarks tweak token probabilities at the word level. That structural similarity explains the massive cross‑transferability of the MBZUAI attack. The authors suggest two research avenues:

- Semantic watermarks that embed information in higher‑level meaning rather than individual tokens.

- Chain‑of‑thought watermarking, tagging the model’s reasoning steps instead of just the output.

Either path would force attackers to do more than cosmetic paraphrasing, but both are open problems.

For companies already shipping watermarking, the paper delivers three hard lessons: “Security through obscurity” is dead. Assume your algorithm will leak and design for that. If that were not to be the case, also add further measure to improve the robustness of the algorithm

Also, budget assumptions are wrong since a teenager with a credit card can afford this attack.

Adaptive red‑team testing is mandatory. Lukas and Aremu have open‑sourced their tuned paraphrasers so others can start today

At ICML the duo will join a growing chorus of researchers questioning watermarking orthodoxy. Other accepted papers explore stealing watermark keys and implanting fake signatures to frame innocent users.

Yet neither author sees their result as a death knell. “Watermarks aren’t useless; they’re just immature,” Lukas says. “Cryptographers didn’t give up on encryption after the first cipher got cracked.” The goal, he adds, is to “set a tougher bar so that the next generation of schemes can clear it.”

Whether policymakers will wait for that next generation is another question. For now, anyone relying on invisible ink to label AI text might do well to keep a red pen handy.

- machine learning ,

- research ,

- conference ,

- icml ,

- ML ,

- paper ,

- watermarking ,

- watermark ,

Related

AI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- cinema ,

- AI ,

- artificial intelligence ,

- art ,

- fiction ,

- science fiction ,

Mind meld: agentic communication through thoughts instead of words

A NeurIPS 2025 study by MBZUAI shows that tapping into agents’ internal structures dramatically improves multi-agent decision-making.

- agents ,

- neurips ,

- machine learning ,

Balancing the future of AI: MBZUAI hosts AI for the Global South workshop

AI4GS brings together diverse voices from across continents to define the challenges that will guide inclusive AI.....

- representation ,

- equitable ,

- global south ,

- AI4GS ,

- event ,

- workshop ,

- languages ,

- inclusion ,

- large language models ,

- llms ,

- accessibility ,