A “divide-and-conquer” approach to learning from demonstration

Friday, October 24, 2025

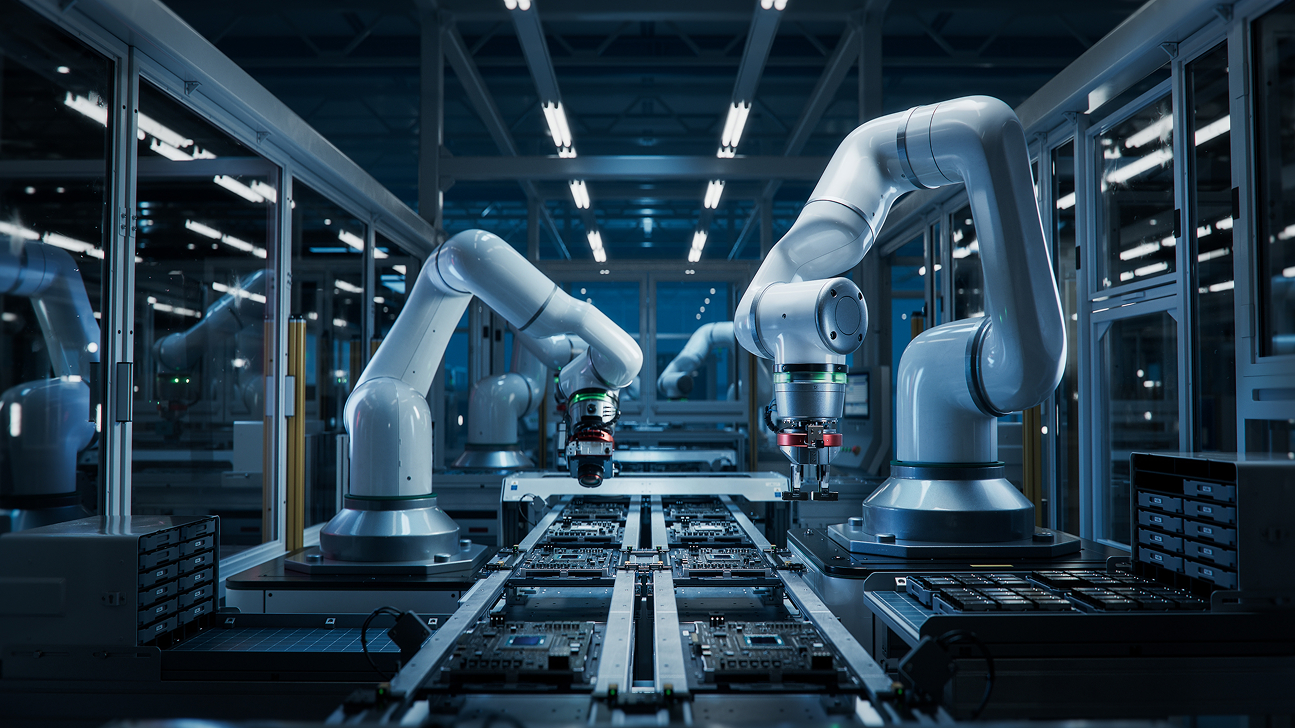

Imagine a time in the future when robots exist alongside us in the home and in the workplace. In this world, when you want to teach a robot a new task, rather than programming it directly, you would simply demonstrate the task so that it can learn from your actions. While this concept is simple enough, it is a difficult problem that researchers are working to solve.

In learning from demonstration, the instructor’s movements are observed by the robot and embedded in what’s known as a dynamical system. A challenge is that the calculations required to turn these embeddings into robot movement are highly complex and they can become computationally infeasible as the number of joints or degrees of freedom of the robot increases. Researchers have come up with approaches to simplify the math, but they can lead to unexpected and potentially dangerous movements.

A new study by researchers at MBZUAI and other institutions proposes a new technique that has the potential to help solve an important problem in learning from demonstration. The team’s approach uses what they call a “divide-and-conquer” strategy to break up a dynamical system into subsystems. Each subsystem is modeled as a linear parameter-varying dynamical system and solved independently. They are then combined for an overall solution.

“We solve the optimization problem constrained by the stability conditions of each subsystem alone,” explains Abdalla Swikir, Assistant Professor of Robotics and co-author of the study. “If each subsystems’ optimization problem is solved and proven stable on its own, then the entire system will also be stable, as long as certain coupling or consistency conditions between the subsystems are mathematically satisfied.”

The researchers will present their findings at the 2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025) this month in Hangzhou, China.

Shreenabh Agrawal, Hugo T.M. Kussaba, Lingyun Chen, Allen Emmanuel Binny, Pushpak Jagtap, and Sami Haddadin are co-authors of the study.

How learning from demonstration works

Demonstrations can be encoded in either task space, which corresponds to the movement of the robot in the world, or in joint space, which corresponds to the movement of the robot’s joints.

Task-space learning is useful in some situations, but it can lead to unexpected movements, as the robot can learn to take paths to a point in space that are different from those that were demonstrated.

Joint-space learning accurately captures joint interactions, which allows it to navigate complex environments. An added benefit is that joint-space learning, unlike task-space learning, captures variations in orientation, which is the way the end effector of a robot is rotated in space.

But there are also costs to joint-space learning. The data is highly dimensional, leading to complex problems that sometimes can’t be solved.

Swikir and his colleagues’ approach uses joint-space learning but instead of solving for the whole joint-space learning problem at once, it breaks it up into units, solves the smaller problems, and, under some mild conditions, combines them into one solution. The stability of the learned dynamical system encoding the demonstrated trajectories is certified using what’s known as a Lyapunov function.

Breaking the optimization problems into smaller problems with fewer variables and constraints allows the system to learn in a way that wasn’t possible previously.

“We went from non-convergence to being able to solve these problems in seconds,” Swikir says. “It’s fast and you can use it on machines with limited computational abilities.”

Learning from you

The researchers tested a robotic arm on two learning from demonstration tasks using their approach.

The goal of the first experiment was for the robot to follow a motion that involved changing position and orientation along a non-linear path. They found that the way the robot moved closely matched the demonstration.

In another experiment, they tested the robot’s ability to learn from a demonstration of removing an object from a box. It had to do this without colliding with the box’s walls. Again, the robot was able to learn the demonstrated movements in joint space in a way that hadn’t been done before.

Safety in a robotic future

Swikir is interested in using formal control methods to make robots safe and functional in real-world environments. While statistical methods have led to dramatic advances over the past decade in fields like natural language processing and computer vision, he says that formal techniques will always be important to robotics, since they can guarantee safety in ways that statistical methods can’t.

“Formal guarantees must play a role in robotic applications, especially when these systems interact with people and the environment,” he says.

After all, the consequences of a hallucination by a language model are quite different from those brought about by a robot.

- robotics ,

- conference ,

- IROS ,

- demonstration ,

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

- AI ,

The future of robotics, rooted in Italian ingenuity

Raised amid Tuscany’s long tradition of engineering excellence, Cesare Stefanini is carrying the same creative spirit into.....

- innovation ,

- healthcare ,

- industry ,

- robotics ,

- faculty ,

- biorobotics ,