Llama 2: a global release of local importance

Monday, August 14, 2023

Since its inception, MBZUAI has championed open-source AI solutions. Meta’s recent release of Llama 2 is only the latest example of the university’s commitment to actively developing and testing open-source, composable, and scalable AI resources for industry, academia, and beyond.

The university recently joined around 50 organizations including IBM, AWS, Microsoft, and NVIDIA as global partners for Meta’s release of Llama 2. Prior to releasing the model, Meta called on its partners to provide early feedback and commit to helping build the software as a global community. The university’s involvement in the initiative promises to bring about a new generation of UAE-born AI advancements built around the Llama 2 ecosystem.

MBZUAI is actively working alongside local and international organizations on large language models – from developing a sustainable LLM named Vicuna, which was trained on Meta’s LlaMA 1, to strengthening infrastructure and establishing LLM-chat evaluation platforms.

In mid-July, MBZUAI President and University Professor Eric Xing joined dozens of top experts from industry and academia to publicly support the launch of Llama 2 alongside the UAE’s H.E. Omar Sultan Al Olama, Minister of State for Artificial Intelligence, and Saeed Aldhaheri, Director of the Center for Futures Studies at the University of Dubai. The signatories backed the following statement, which also included a responsible use guide:

“We support an open innovation approach to AI. Responsible and open innovation gives us all a stake in the AI development process, bringing visibility, scrutiny and trust to these technologies. Opening today’s Llama models will let everyone benefit from this technology.”

All of the buzz and luminary support around Llama 2, and generative AI more generally, is a result of the rapid rise of globally known systems such as ChatGPT, Bard, Stable Diffusion, and more. The technical innovations in Llama 2 are expected to greatly improve performance over LLaMA 1 without increasing the size of the model, which will help to reduce the model’s carbon footprint.

MBZUAI Deputy Department Chair and Professor of Natural Language Processing Preslav Nakov and colleagues from UC Santa Barbara, Nanyang Technological University, and the National University of Singapore demonstrated in a recent paper that ChatGPT – the very engine by which bad actors churn out fake news at an astronomical rate – can be harnessed to fact-check published information just as quickly.

“I am keen to see what Llama 2 can do to help fact-checking and fight fake news, both human and machine-generated,” Nakov said. “As AI methods for fake news detection are data-hungry and given the lack of sufficient training data, it has been proposed to generate synthetic fake news, and then to use it for training. However, such approaches fail to realize an important characteristic of human-written fake news, namely that the purpose is not just to lie, but also to persuade. This is achieved through the use of specific rhetorical devices: propaganda techniques. In this work, we generate fake news that make intentional use of such propaganda techniques, thus better mimicking human-written fake news. As a result, training on our data yields an AI system that is better at detecting human-written fake news.”

Vicuna

In early 2023, Professor Eric Xing was part of a global collaboration with researchers at UC Berkeley, CMU, Stanford, and UC San Diego to develop a sustainable large language model named Vicuna. The energy-efficient Vicuna was trained on Meta’s LLaMA 1, the precursor to the recently released, open-source Llama 2.

With a minuscule size-to-power ratio, Vicuna can be easily accommodated into a single GPU accelerator, compared to the dozens of GPUs required by ChatGPT. The model delivers responses reaching 90% of the subjective quality of ChatGPT, at a fraction of the energy budget and memory footprint. Best of all, it’s open source, so that everyone can use the model to help further green modes of content generation.

Vicuna is publicly available at https://vicuna.lmsys.org/

- open source ,

- vicuna ,

- large language models ,

- llm ,

Related

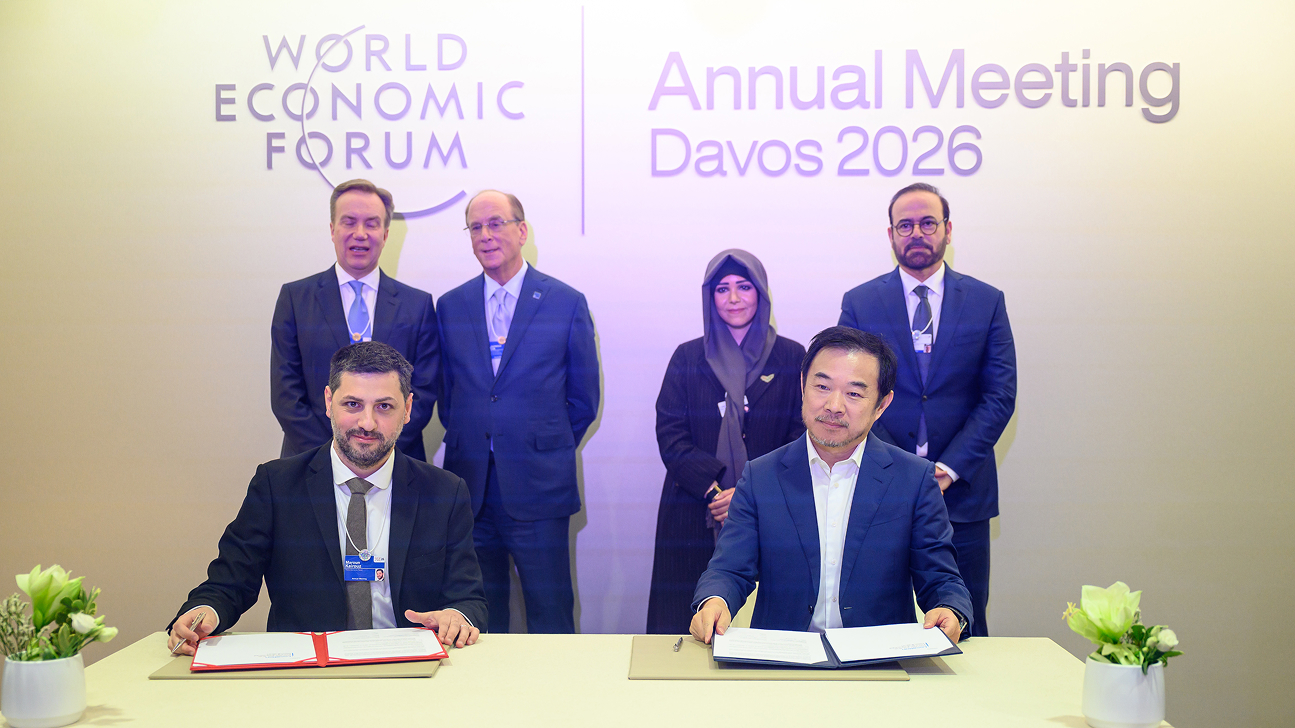

MBZUAI signs agreement with World Economic Forum as Centre for the Fourth Industrial Revolution (C4IR)

MBZUAI will launch the Centre for Intelligent Future as a global platform – connecting AI research with.....

- humanity ,

- economic ,

- social ,

- WEF ,

- World Economic Forum ,

- partnership ,

MBZUAI and AWS collaborate to drive research, skills, and innovation in AI

MBZUAI has signed a multi-year collaboration agreement with Amazon Web Services to advance AI research, enhance technical skills,.....

Read MoreMBZUAI launches Institute for Agriculture and AI to advance digital advisory solutions for smallholder farmers

The IAAI has been established in collaboration with the International Affairs Office at the UAE Presidential Court.....

- advisory ,

- ecosystem ,

- support ,

- farming ,

- farmers ,

- UAE Presidential Court ,

- Institute for Agriculture and Artificial Intelligence ,

- IAAI ,

- Gates Foundation ,

- partnerships ,

- environment ,

- training ,

- agriculture ,

- data ,

- llms ,

- large language models ,