Sustainable AI at scale

Thursday, February 23, 2023

AI at scale is powering new breakthroughs in science for good, including drug design, clean energy, climate change, pandemic mitigation, creative content generation, and intelligent automation.[wps_image-right image=”https://staticcdn.mbzuai.ac.ae/mbzuaiwpprd01/2022/06/Qirong-Ho-secondary.jpg” caption=” MBZUAI Assistant Professor, Qirong Ho” first-paragraph=’The 2nd CASL Workshop was developed to bring together visionaries in these spaces across industry, academia, non-profits, and government. The aim is to share their visions for AI in science, as well as to highlight some of the technology that is enabling the pursuit of AI at scale can be a responsible endeavor with lower energy and carbon costs, and open-source software that is accessible to all.’ second-paragraph=’Below, organizing committee member and MBZUAI Assistant Professor of Machine Learning Qirong Ho, breaks down the scale of the challenges and opportunities in the development of sustainable AI computing.’]

Leading the movement to reduce the carbon footprint of AI computing

AI holds untapped potential for informing and generating powerful climate solutions, with a downside — today’s AI computing is far from being environmentally friendly or sustainable. The energy cost of creating an AI application such as ChatGPT, for example, is more than the energy used by 100,000 homes in one year.

We believe that, using the AI Operating System (AIOS) technology that we’ve developed at MBZUAI, we can substantially reduce the three big costs of AI computing — energy, time, and talent.

Reducing the energy cost of AI computing

AIOS has the power to reduce AI computing energy costs in two main ways. First, by making models smaller, faster, more efficient, and less reliant on expensive hardware for AI creation. Smaller models require less computing power – and hence less time – to train than larger ones. AIOS also directly speeds up the compute operations involved in training and serving AI models, which further reduces the time needed for training. Less time spent training directly translates to lower energy and carbon costs for AI, and makes the use of inexpensive hardware – which would normally take years to train – a realistic proposition.

Second, AIOS will make AI models perform better, behave more reliably, adapt better to ever-changing user needs, and enable real-time insights using consumer-grade computing resources. AIOS is capable of cost-aware model tuning; unlike traditional tuning methods that expensively multiply the cost of AI models, this novel tuning method reduces the time, compute and energy cost of “tuning up” the models – a critical and necessary step each time an AI model is customized for new user needs.

By standardizing common AI modules as Lego-like building blocks, AIOS ensures that models are assembled and behave in a predictable way, like mass-produced cars rolling off an assembly line. By having a standard way of producing AI models, we can confidently certify them for reliable and safe operation, and outfit them with software for real-time monitoring.

Reducing the time-to-market costs of AI computing

AIOS could well become the foundation of next generation AI software production, via automatic coding, collaborative and privacy-respecting machine learning, and standardized and certifiable software engineering. AIOS will be used to create AI software to be used across sustainable industries such as energy, agriculture, healthcare, water, and transportation of essential goods and services.

Such software will be poised to solve the most challenging problems in sustainability: logistics optimization, clean energy and smart grids, predictive maintenance, drug design, adaptive operation, autonomous vehicle safety, climate change reversal, precision management, and pandemic prevention.

Reducing the cost of developing talented AI computing professionals

Toward the AIOS vision, MBZUAI is building the world’s strongest research team in Machine Learning Systems — a team purpose-built to both innovate and re-design the very fabric that underlies AI computation. This fabric is made up of essential AI infrastructure, such as distributed systems, communication protocols, memory management, universal AI program compilers, secure and private learning, collaborative mechanism design, and machine learning operations.

AIOS, we believe, could well become the engine for AI and the Fourth Industrial Revolution. Realizing the potential of AIOS could well support the UAE in its aspiration to become a major provider of high-end AI software. The impact will be as transformative as the Third Industrial Revolution in computer chip manufacturing, which helped strategically position countries such as Taiwan and South Korea in the global economy.

About Qirong Ho

Ho is co-founder and CTO at Petuum Inc., a unicorn AI startup that has been recognized as a World Economic Forum Tech Pioneer for creating standardized building blocks that enable assembly-line production of AI, in a manner that is affordable, sustainable, scalable, and requires less training of AI workers.

Ho is a member of the Technical Committee for the Composable, Automatic and Scalable ML (CASL) open-source consortium. His doctoral thesis received the 2015 SIGKDD Dissertation Award (runner-up).

Ho’s primary area of research interest is in software systems for the industrialization of machine learning (ML) programs. These ML software systems must enable, automate, and optimize over multiple tasks: composition of elementary ML program and systems “building blocks” to create sophisticated applications, scaling to very large data and model sizes, resource allocation and scheduling, hyper-parameter tuning, and code-to-hardware placement.

Learn more about the 2nd CASL Workshop.

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

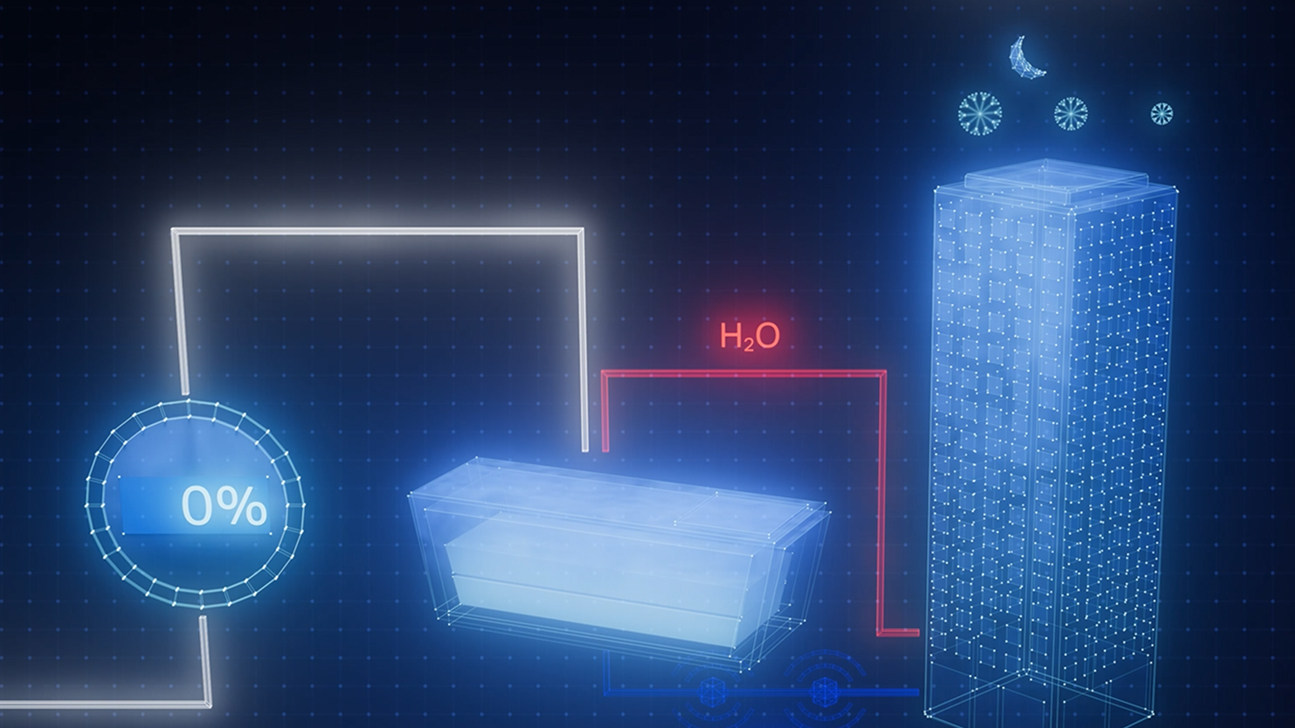

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

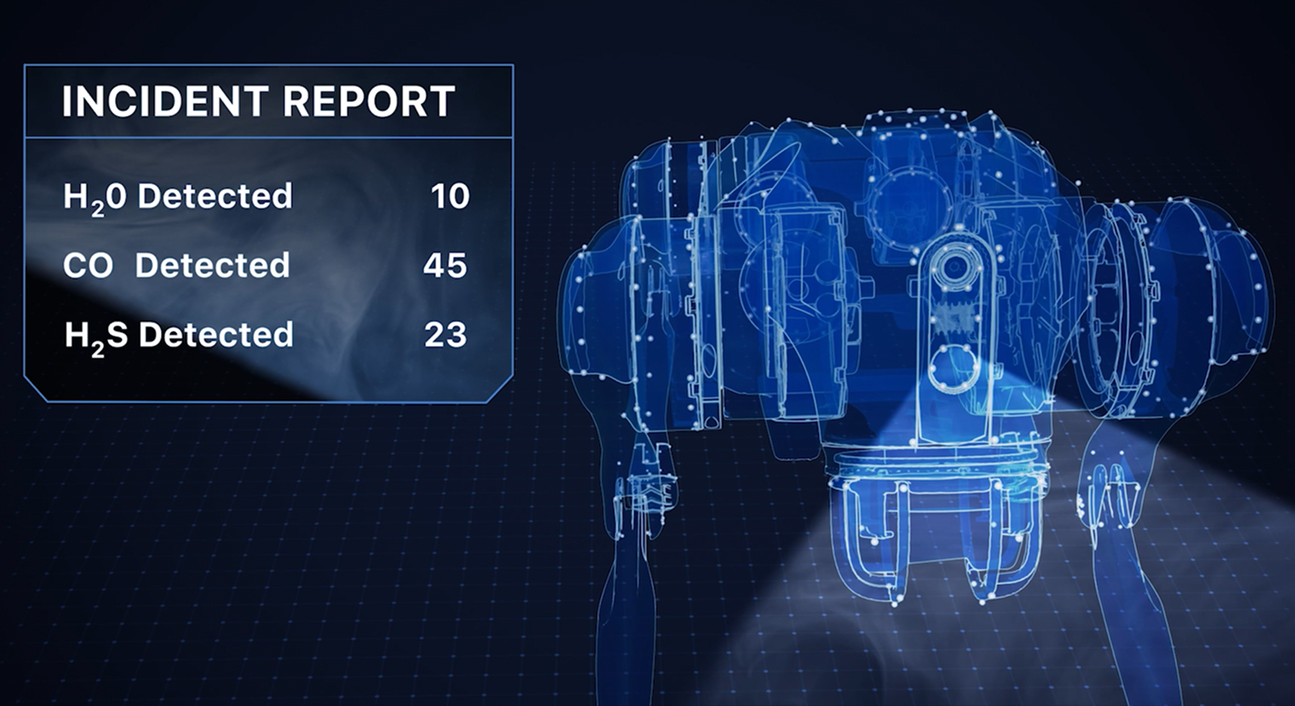

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,