Teaching robots to spot danger at home: A new approach to be presented at NAACL

Thursday, April 17, 2025

In the future, robots will likely become integrated into our daily lives. We will want them to follow our commands and do what we tell them to do, of course. But in certain situations, we will want them to take initiative and act without explicit instructions. This is particularly true when it comes to maintaining the safety of our homes and workplaces.

Anyone who has taken care of a toddler knows that kids have an innate knack for finding dangerous objects in any environment. It could be an unprotected electrical socket, an unattended bottle of medication, or a pair of scissors left on the table. A robot that can identify dangerous situations and make them safer would be extremely helpful to people.

While household robotics technology has made great progress over the past few years, today’s systems “lack the ability to automatically and proactively detect tasks they should complete,” explains Xiuying Chen, assistant professor of Natural Language Processing at MBZUAI.

Chen is interested in the development of trustworthy AI and ways in which foundation models can be used for multistep reasoning and real-world applications. She and colleagues from MBZUAI and other institutions recently developed a new framework called AnomalyGen that uses foundation models to help household robots anticipate dangerous scenarios.

The researchers will present AnomalyGen at the upcoming Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics (NAACL), which will be held in Albuquerque, New Mexico at the end of April. Zirui Song and Zhenhao Chen of MBUZAI contributed to the research.

How AnomalyGen Works

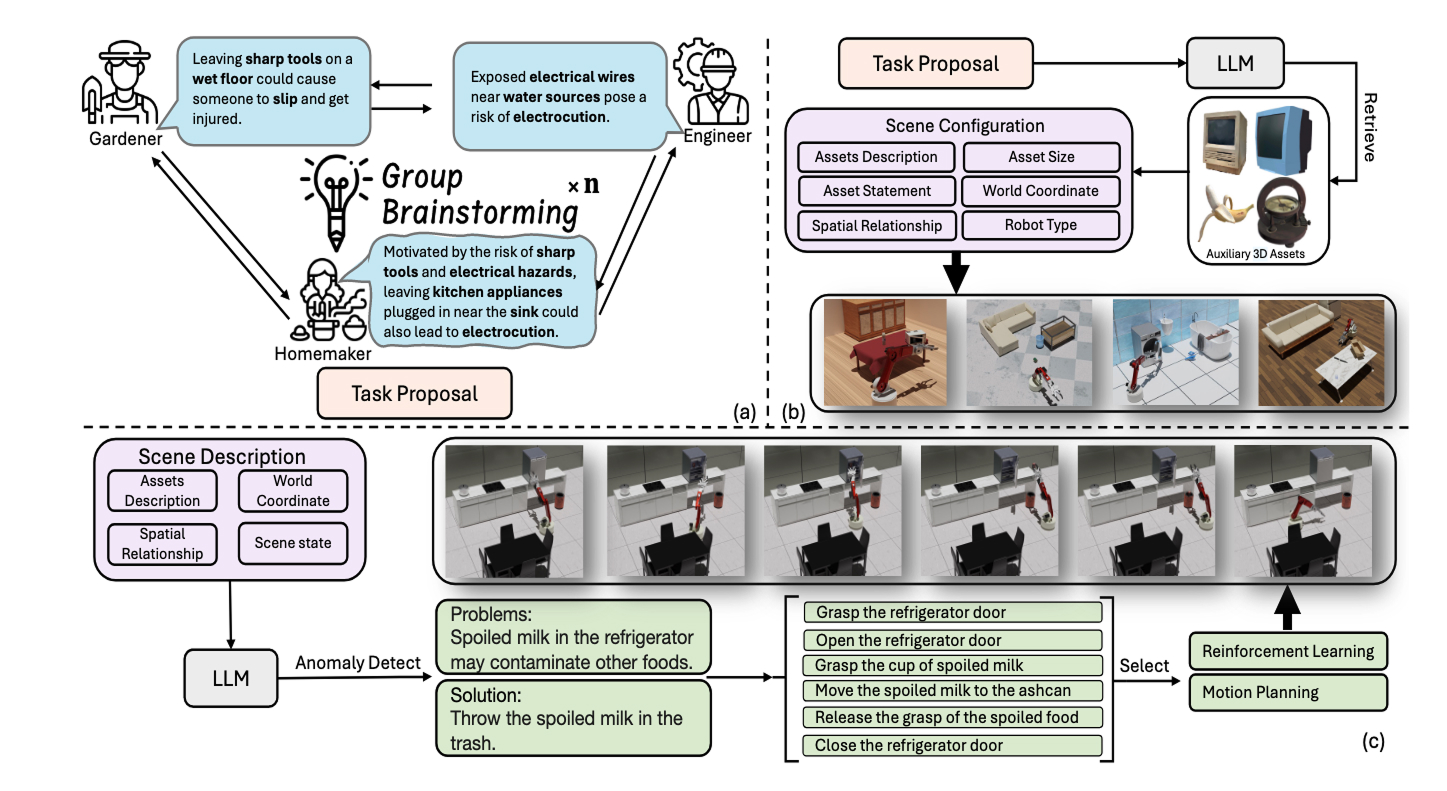

AnomalyGen is composed of three modules. In the first, agents powered by a foundation model work collaboratively to brainstorm potentially hazardous scenarios in the home and generate text descriptions of them. In the second module, a model reviews these text descriptions, retrieves objects related to them from a 3D object dataset, and recreates the scenarios in a simulated environment. In the third module, another system analyzes the 3D scenarios, identifies hazards, and uses reasoning techniques to develop methods that a robot in the simulated environment can use to reduce the potential for harm.

AnomalyGen, developed by researchers at MBZUAI and other institutions, uses foundation models to help robots detect and react to potentially dangerous situations

Collaborative brainstorming with LLMs

Today, if a user prompts an LLM to come up with a list of hazardous scenarios that could be encountered in the home, the LLM will likely create similar, repetitive situations, Chen and her coauthors write in their study. To overcome this, the team used agents to mimic the kind of collaborative problem solving that might be found in a brainstorming session among people.

The researchers used prompt-based engineering to guide the agents to adopt different personas with the goal of having them bring a range of diverse perspectives to the brainstorming session. Personas included gardener, home security officer, and interior designer. Each agent also focused on a specific object, such as a chair, dishwasher, knife, lamp, laptop, lighter, and scissors.

The agents’ proposals were iterative, building on others’ outputs. For example, the “gardener” and “engineer” devised a scenario in which sharp tools were left unattended on a wet floor and exposed to electrical wires. Upon review, the “homemaker” added its own unique perspective, suggesting that leaving kitchen appliances plugged in near the sink could also lead to electrocution.

While AnomalyGen could theoretically generate an unlimited number of tasks, the researchers focused on 111 that were grouped into three categories: household hazards, hygiene management, and child safety.

Chen says that role-playing is a hot topic in LLM research today, and it proved to be an effective way to get agents to provide different perspectives in their outputs. In the future, she said one way to make the outputs of an LLM even more personalized would be to train agents using the daily dialogue of real people who worked in these roles.

From text to simulation

After the text descriptions of scenarios were generated during the brainstorming session, the researchers had a foundation model retrieve objects from a 3D object database called Objaverse and arrange the objects in a 3D virtual environment. The researchers used other systems and humans to review the arrangement of the objects to make sure they were realistic.

In the last step, another foundation model analyzed the virtual scenes and recommended approaches that a household robot could use to make them safer. It also used reasoning techniques to break down the steps into subtasks and recommended the best learning method for the robot.

A key contribution of AnomalyGen relates to efforts by Chen and her colleagues to bridge what is known as a “domain gap” between natural language descriptions and 3D simulated environments. Chen notes that while it’s difficult to develop systems that can work across the divides that separate domains, “our simulations are improving, and this gap is expected to become smaller in the future.”

Results and next steps

The researchers found that across the more than 100 hazardous scenarios generated by AnomalyGen, the system was able to resolve them 83% of the time. The main reason it failed was similar to why a robot might have trouble in a home — it was often confused by “everyday clutter” in the virtual environments. Chen and her colleagues note that results will likely improve in the future as researchers develop better learning algorithms.

While this current work was conducted in a simulated environment, Chen hopes to collaborate with robotics researchers at MBZUAI to modify the system so that it can be used with real robots. Moving into the real world will allow the researchers to collect data from the robots and fine-tune the foundation models with this real-world data.

If robots really are to play a role in our daily lives, scientists and developers will need to build systems that are more useful and easier for people to interact with. A key aspect is that these systems will anticipate users’ daily behavior and do what is expected of them, Chen explains.

When this happens, the benefits may be enormous. “I think robots will really change our daily lives, making them more convenient, and that’s what motivates me,” Chen says.

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreTeaching language models about Arab culture through cross-cultural transfer

New research from MBZUAI shows how small, targeted demonstrations can sharpen AI cultural reasoning across the Arab.....

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- artificial intelligence ,

- science fiction ,

- fiction ,

- art ,

- cinema ,