Research Talks: Bridging neuroscience and AI

Thursday, January 30, 2025

A significant challenge for AI scientists today is to effectively replicate a human-like memory in machines. Memory is a fundamental aspect of human cognition, allowing us to store, organize and retrieve information, but emulating the brain’s context-rich and multilayered approach to memory storage and retrieval in AI has proved difficult.

This is the research focus of Surya Narayanan Hari, a graduate student at the California Institute of Technology (Caltech), who shared his progress with the MBZUAI community at one of the University’s recent Research Talks at its Masdar City campus.

“Computer science is in need of new ideas, and I think biology is ripe with parallels to computing,” said Hari. “For example, there are biological circuits and biological processes that can inform the way we build models. It’s very interesting to build mechanical analogs using biological components, and intelligence is one area we can do this.

“My principal investigator at Caltech, Matt Thomson, and I discussed the topic of memory retrieval back in 2022, and we asked whether it was necessary to have a large monolithic model to answer all questions. We realised that the way we think about models today is too monolithic, and that the ways to break this down in computer science were too mathematical to use immediately. So we looked for answers in biology, and found our inspiration.”

Understanding human and AI memory

This inspiration came in the form of the thalamus — the part of the human brain that filters sensory and motor signals and sends them to the appropriate areas of the cortex for processing and response.

“Caltech is a very tight-knit community, so we were able to interface directly with human intelligence experts all the time, and that helped us learn more about the thalamus,” said Hari.

“With the thalamus, biology gave us the inspiration to build routed monolithic models and routed architectures. The thalamus finds the right response to a stimulus. There’s a store in the thalamus that is trained or has learned to do a specific thing given a certain stimulus — it will trigger a cascade of responses, interfacing with different parts of the body, that add up to a specific action tailored to that stimulus.

“In computing terms, if you ask a model to solve a problem and it is allowed to query other models, then it can find the right model to solve the problem.”

Hari explained that in the human brain, memory retrieval occurs on multiple levels. First is the ‘object level’, which involves recalling specific details like the spelling of words, the association between names of colors and color itself, arithmetic, the sound of different keys, and so on.

Second is the ‘embedding level’, where memories involve more abstract or complex associations, such as remembering particular smells. And third is the ‘circuit level’, which deals with large-scale processes such as performing algorithmic tasks or generating appropriate motor response to stimuli.

Compare that with current AI systems, where memory retrieval primarily operates through architectures such as RAG (retrieval-augmented generation). These systems fine-tune models to retrieve relevant pieces of information by using ‘embedding similarity’, which essentially matches data points that are alike. AI, however, rarely attempts to explicitly structure retrieval at different levels, such as by parametrising object identity or spatial proximity, in the way the human brain does. Which Hari’s work is attempting to change.

A second approach prioritises distributed memory to improve image retrieval. By analysing an image’s components for semantic similarity and spatial proximity, the system can retrieve images in a way the mimics how humans understand visual relationships.

This allows small models with access to curated structured databases that are trained on retrieval to outperform large general-purpose models.

“To me, it’s similar to the question of whether a single human with access to a huge library is more powerful than many humans with a single book,” said Hari. “Memories are stored all over the brain and there’s a single ledger in the hippocampus that tells you where to retrieve relevant memories or pieces of information from. Likewise, I want to see if we can create a distributed memory analog that would mean a smaller model can achieve the same level or performance as larger models.”

The importance of biology-AI research

Hari was speaking at MBZUAI by invitation of Eduardo da Veiga Beltrame, Assistant Professor of Computational Biology, who believes Hari’s work to bridge AI and biology is important to the future of AI innovation.

“I know Surya as a colleague from my Ph.D. at Caltech — soon after I finished my Ph.D. Surya joined the lab of Matt Thomson, where I rotated and have many friends that worked in his lab,” said Beltrame.

“Matt’s lab is a hub for interdisciplinary work with biological themes and motivations, and Surya’s trajectory and research is a clear example of biological thinking and how that thinking gets translated into machine learning methods and applications.

“A lot of his work has been motivated to solve biological problems using machine learning methods, but he is now working on the converse: solving problems in machine learning using biological inspiration. This kind of thinking, which is only really possible when you have deep familiarity with biology, problems in biology, and how researchers in biology think, is something I want to foster at the newly established department of computational biology, and in our soon-to-be-launched Master’s and Ph.D. in computational biology.”

Hari and Thompson have an additional link to MBZUAI through their collaboration with Bahey Tharwat, a master’s student at the University. The trio, plus co-authors Guruprasad Raghavan, Dhruvil Satani and Rex Liu, worked together on the paper “Engineering flexible machine learning systems by traversing functionally invariant paths”, published in renowned academic journal, Nature Machine Intelligence.

Hari added that the relationship between biology and AI will only grow in the years ahead, and complemented Beltrame and MBZUAI’s advances in this domain.

“I want to highlight Eduardo’s work in this area, and in education. I think in the future a lot of students are going to ask hard questions of biology through language models, and it will be increasingly important for us to know what the truth is, and how biology intersects with AI.

“AI and biology are both fuzzy problems right now, and MBZUAI’s efforts in computational biology are helping us to understand the benefits of each of them to the other one. This is an important way of getting a better understanding of ground truth and, as a result, a better and more informed next generation of students and research.”

Related

MBZUAI marks five years of pioneering AI excellence with anniversary ceremony and weeklong celebrations

The celebrations were held under the theme “Pioneering Tomorrow: AI, Science and Humanity,” and featured events, lectures,.....

- celebration ,

- five year anniversary ,

- ceremony ,

- event ,

- board of trustees ,

- campus ,

- students ,

- faculty ,

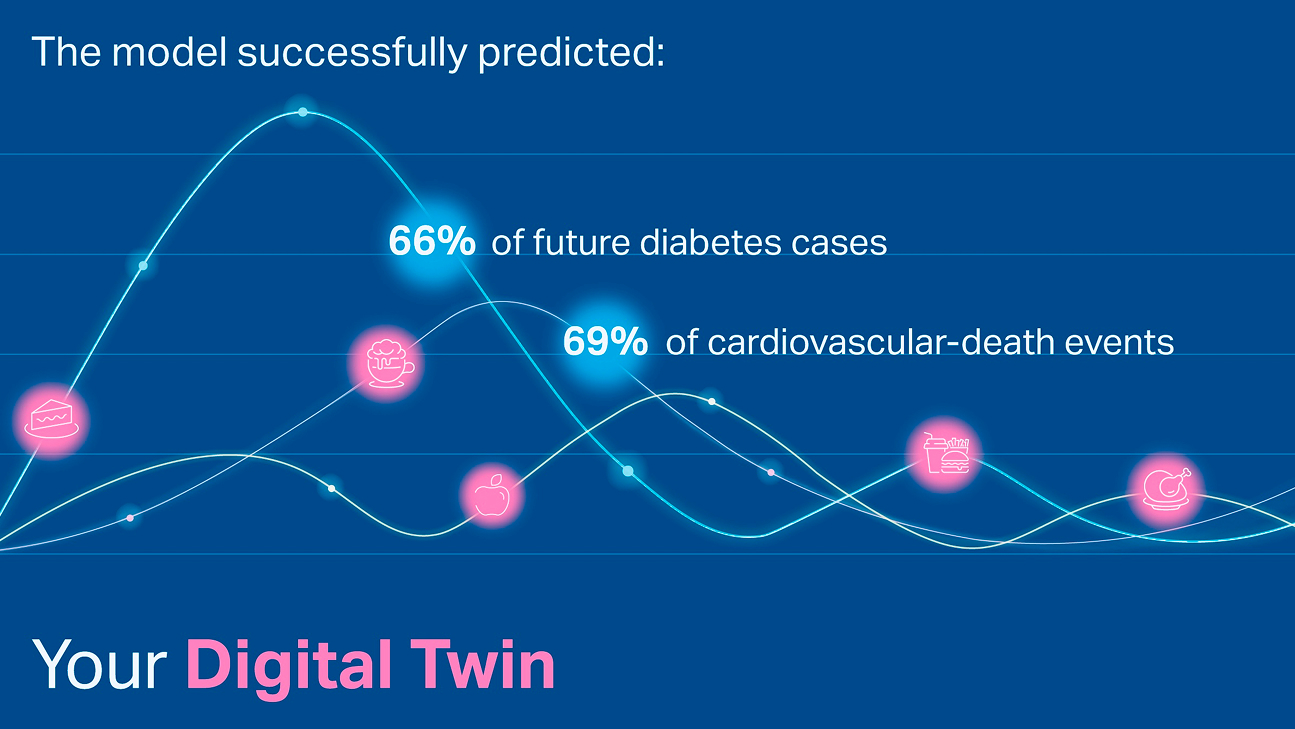

AI foundation model GluFormer outperforms clinical standards in forecasting diabetes and cardiovascular risk

Nature-published paper with MBZUAI researchers demonstrates how AI can transform glucose data into powerful predictors of long-term.....

- digital public health ,

- cardiovascular ,

- diabetes ,

- HPP ,

- health ,

- foundation models ,

- nature ,

MBZUAI's Launch Lab equips alumni and students with practical startup tools

The six-week pilot program brought alumni and students together to turn early startup ideas into tangible ventures.

- entrepreneurship ,

- alumni ,

- startups ,

- alumni relations ,

- launch lab ,