MBZUAI students win award for study presented at Asian Conference on Computer Vision

Monday, January 13, 2025

Students from MBZUAI have won an award as runners up for the best student paper at the Asian Conference on Computer Vision (ACCV), which was held in December in Hanoi. The researchers developed a new method called ObjectCompose that allows users to easily generate object-to-background variations of images that can be used to validate the performance of neural networks designed for computer vision applications.

The team’s approach provides developers with a new method for testing the performance of artificial intelligence systems and can complement current benchmark datasets.

The project was led by Hashmat Shadab Malik, a doctoral student in computer vision at MBZUAI, and Muhammad Huzaifa, a master’s student in machine learning at MBZUAI.

Challenges in training

When training computer vision systems, developers expose them to many different images with the hope that they learn to make sense of the defining features of objects in images. In the classic example, a system that is being developed to classify images of cats and dogs will be exposed to many different photos of both animals. Through training, the system will learn the visual characteristics that separate images of cats from images of dogs. That’s the goal, at least.

One of the challenges of training neural networks, however, is that these systems can often make the correct prediction about an image through a short cut. They may learn that certain objects that appear in image backgrounds are more associated with dogs than they are with cats, for example. As a result, these systems might not perform as expected when deployed.

After training, developers test computer vision systems on entirely different datasets, known as validation datasets. This process provides a sense of how good systems are at the task they were trained to do, Huzaifa explains. That said, “validation sets don’t capture all the diversity that is present in the real world, and they will only give you an approximation of how good a model is,” he says.

Validating computer vision systems

To add more variability to validation datasets, developers have come up with techniques to introduce perturbations to images, typically to the image background. These changes make it more difficult for a system to classify an image or carry out other tasks. These changes can also alert developers to situations where models may be taking a short cut and are paying attention to the image background instead of the object of interest.

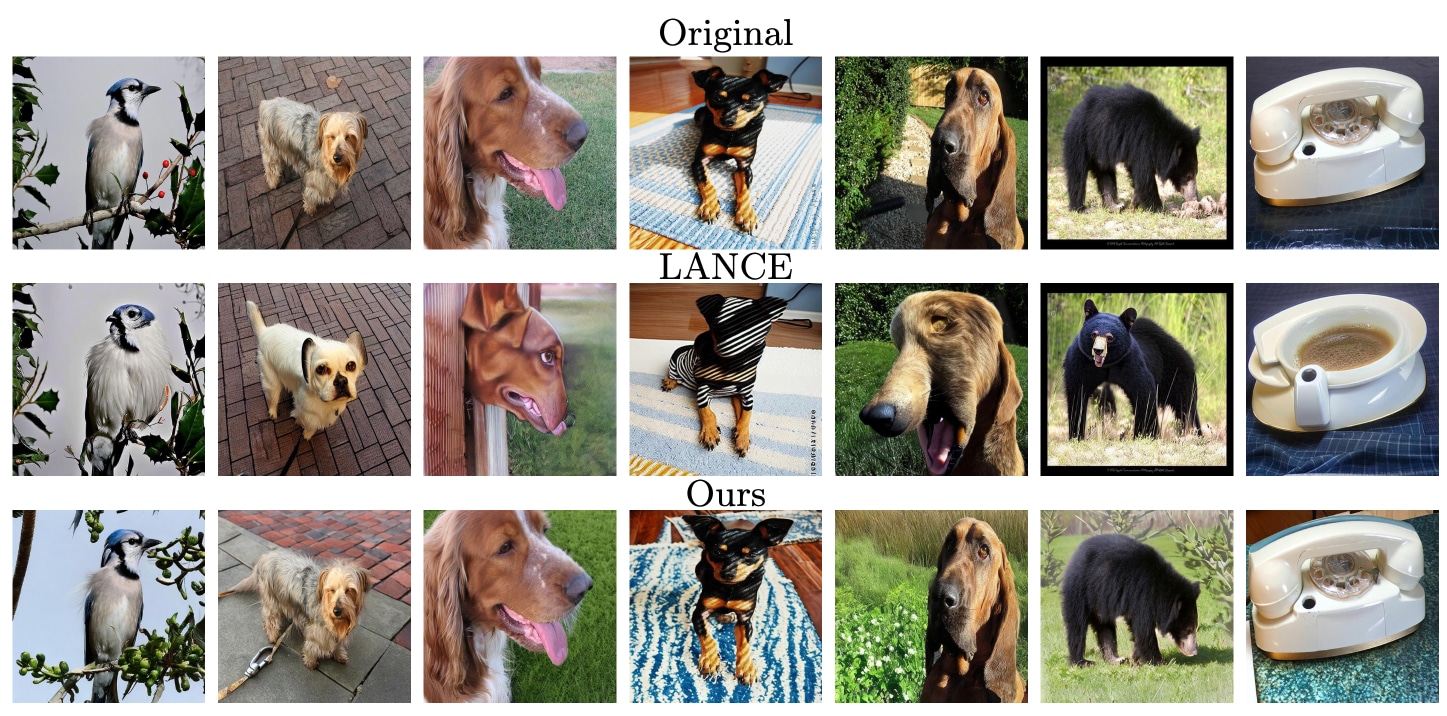

Adding doctored images to validation datasets introduces more variability to the dataset and gives developers a better idea of how a system will perform once it is deployed in the real world. Current approaches, however, tend to make changes that distort the main object in the image, changing the image’s semantics, or meaning.

ObjectCompose has been designed to solve this problem by generating diverse backgrounds for images while keeping the object of interest the same. It does this with only a simple text prompt from the user.

To introduce these changes to image backgrounds, Huzaifa and his co-authors developed an automated process that uses a technology called a segment anything model, or SAM, which was developed by Meta and can identify objects and backgrounds in images. SAM allows ObjectCompose to separate the object of interest from the background and apply changes only to the background, retaining the semantics of the image.

ObjectCompose (Ours) is an automated system that uses text prompts to generate background variations in images while not affecting the object of interest. Other methods, such as LANCE, distort the background and the object of interest, changing the image semantics.

Testing on ObjectCompose

Huzaifa and his co-authors were interested to see how computer vision models performed on datasets that were edited with ObjectCompose. They took two standard computer vision datasets, ImageNet and COCO, and ran a subset of images from these datasets through ObjectCompose. These modified datasets featured images that had changes to the background color and texture. ObjectCompose also created what are known as adversarial versions, which are intentionally difficult for systems to process.

The researchers tested several computer vision systems on these modified datasets in different tasks, including classification, object detection and captioning. They found that compared to the baseline method, changes to images made by ObjectCompose led to a drop in performance of 13.64% by computer vision systems in classification tasks. Adversarial changes led to an even larger drop of nearly 70%. These numbers suggest that the benchmark dataset crafted from ObjectCompose is significantly more difficult compared to other datasets and that there is room to improve vision models.

Huzaifa and his colleagues also found that changes made by ObjectCompose had a greater impact on classification models than they did on models designed for object detection. “It’s possible that the reason why object detection models are more robust to the changes is because the way in which they are trained,” Huzaifa says. “The models are explicitly told that they have to detect an object in an image no matter what the background is.”

Building better systems

Huzaifa explains how in the past several years, developers have made huge advancements with large language models and today these systems perform extremely well for many different tasks. Vision models, however, still need to be significantly improved and it’s not clear if current industry benchmark datasets provide an accurate depiction of their performance. “It’s very difficult to say how robust these models are,” Huzaifa says. “We need to continue to improve their generalization abilities.”

Huzaifa intends to build on insights gained working on ObjectCompose by developing new methods that can introduce additional changes to images, providing even better evaluations of computer vision models. The hope is that ObjectCompose — and whatever Huzaifa and his colleagues build next — will play a role in the development of the next generation of vision models, including those that are used in multimodal large language models.

- student achievements ,

- research ,

- computer vision ,

- students ,

- award ,

- ACCV ,

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

- AI ,

Youngest MBZUAI student sets sights on AI superintelligence at just 17

Brandon Adebayo joined the University’s inaugural undergraduate cohort, driven by a passion for reasoning, research, and the.....

- engineering ,

- student ,

- Undergraduate ,