Using child’s play for machine learning

Friday, June 24, 2022

Metaphorically, the toddler brain is similar to a sponge. It is ever evolving — both recalling things from the past, and developing new connections to the present. This capacity is so great, in fact, that the founder of the Montessori learning system, Dr. Maria Montessori, termed the first six years of life the period of the “Absorbent Mind” due to children’s extraordinary capacity to soak up and recall vast amounts of new information. Not surprisingly then, for the machine learning community, one long-standing goal has been to mimic the super-human feats of learning observed in the developing brains of toddlers

Teaching a machine to recognize objects, persons and places in images or videos creates many more challenges and needs specific guidance. It needs thousands of sets of data to be able to recognize, for example, car makes and models in traffic cameras. When the models are deployed, they also find it challenging to discover newly emerging object types e.g., new car models. To learn anything new or keep up-to-date with the latest Bugatti or Ferrari, it needs new sets of examples. With each update, the risk is that it may forget about the old 2008 Nissan Sunny.

But what if it didn’t? What if it could start to decipher and recognize when it doesn’t know a valid object and predict a separate category for that object instead of drawing a blank. You can show a child a new object and they’ll immediately know it is a toy even if they don’t know all the practices and functions of that toy; they know not to ignore it and recognize it as something new. Will artificially intelligent machines be able to mimic this human learning process of curiosity and discovery? That is the goal right now for Dr. Salman Khan, an associate professor of computer vision at MBZUAI.

Khan’s research is focused on creating systems capable of continuous, lifelong learning, just like humans. He has been actively working on learning from limited data (zero and few-shot learning), adversarial robustness of deep neural networks and continual life-long learning systems for computer vision problems. The above-mentioned tasks can help us realize intelligent autonomous systems that can better understand the real-world for improved recognition, detection, segmentation, and detailed scene comprehension.

Along with fellow MBZUAI professors Dr. Fahad Khan (not related) and Dr. Rao Anwer as well as partners such as Stony Brook University, Google Research, University of Central Florida, University of California, Merced and the Inception Institute of Artificial Intelligence (IIAI), Khan has completed several recent papers, working at the crossroads of computer vision, machine learning, deep learning, and image processing.

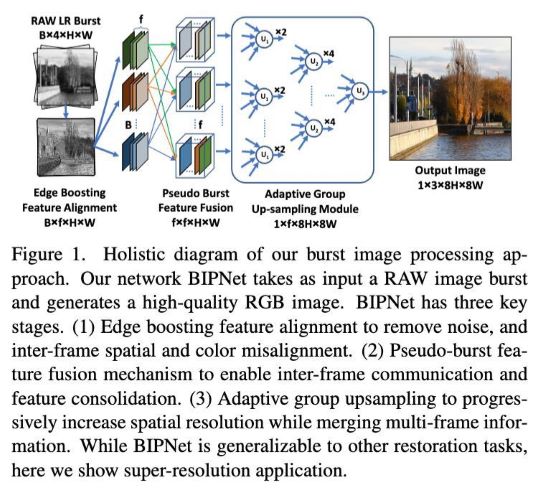

Together, they presented their research at the 34th annual Conference on Computer Vision and Pattern Recognition (CVPR 2022) in New Orleans, Louisiana. Khan and his co-authors’ paper on “Burst Image Restoration and Enhancement” was among the 33 Best Paper Award Finalist papers at CVPR 2022. The goal is to merge (degraded) burst images from smartphones to generate high-quality output (see diagram).

Together, they presented their research at the 34th annual Conference on Computer Vision and Pattern Recognition (CVPR 2022) in New Orleans, Louisiana. Khan and his co-authors’ paper on “Burst Image Restoration and Enhancement” was among the 33 Best Paper Award Finalist papers at CVPR 2022. The goal is to merge (degraded) burst images from smartphones to generate high-quality output (see diagram).

Khan is a CVPR 2022 area chair and assisted with reviewing papers. He is also an area chair at the top machine learning conference, the Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS 2022), which will also be held in New Orleans in late-November 2022.

Creating an open-ended system

Khan delivered three oral presentations at CVPR 2022 as well as three posters, with much of the research based on themes of vision transformers, incremental lifelong learning, and open-world learning. At CVPR 2021, MBZUAI researchers introduced the open-world object detection problem for the first time. This year’s paper “OW-DETR: Open-world Detection Transformer” explores this direction further and introduces vision transformer models for flexibly learning new object types.

“Transformers are a new architecture family, which can process different data modalities,” Khan said during our interview with him at CVPR 2022. “Whether you have text, speech, images, or videos, they can process different sets of information within a single unified architecture using the same basic building blocks of self-attention. We use this framework — the open-world detection framework — to show for the first time how it can be used to develop an open-ended system, which is continuously discovering new categories, and then incrementally extending its vocabulary by learning about them.

“As humans we continually grow our knowledge and adapt to dynamic situations. And the ability to adapt to new environments and learn new skills will be an essential building block towards the creation of autonomous agents.

[wps_pull-out-quote-right content=”Your model must continuously adjust according to newly emerging demands, fast evolving trends and diverse concepts and paradigms introduced to it…” surename=”Salman Khan” source=”MBZUAI Associate Professor of Computer Vision”]“In machine learning, right now, typically the models are trained in a static manner — all the data is fed into a model for training and the learned weights remain fixed. One example is the popular ImageNet dataset with 1000 object classes which would be used for training in a single training episode and the representations are learned for all 1000 classes such as different dog breeds and fruit varieties. However, what if new object classes are of interest in the future? If a model is naively trained on new data, it will catastrophically forget the past object classes.”

The high-level goal for Khan and his colleagues is to have a scalable model that can continually be adapted and not have to retrain from scratch each time more object classes are added. Such models must avoid forgetting past knowledge and reduce intransigence in learning new concepts. He links this to human education and learning where the adaptation and mental progress never stops, and the new information does not interfere with the past learning.

“We want to introduce new concepts without forgetting the previous knowledge,” Khan said. “It needs to be adaptable. Your model must continuously adjust according to newly emerging demands, fast evolving trends and diverse concepts and paradigms introduced to it. It should be able to keep using the useful content from the past and must be able to flexibly learn about new stuff. In other words, the model should not be rigid enough to stop learning and should also not be too plastic – meaning that it forgets everything from the past.

“Scholars stipulate the elasticity-plasticity dilemma in artificial neural networks. Our area of concern is to stretch the stable model – strike the right balance between these two extremes, so that you’re retaining useful knowledge from the past and then adapting to interesting new phenomena that is appearing. The machine is reusing previous contents to help understand new things with less data.”

There are many adaptations for such systems in the real world. It could be used for identity recognition systems for immigration through biometrics, shopper analysis at shopping centers, smart cities and social applications, and data and trends analysis tools.

CVPR 2022 papers

In total, MBZUAI faculty and students published 31 papers, including six oral presentations at CVPR 2022. Seven MBZUAI faculty appear on more than one paper and in publishing their work, MBZUAI faculty connect the university to 16 countries across five continents with China, the U.S., Australia, and Korea as the top four countries.

- K J Joseph, Salman Khan, Fahad Shahbaz Khan, Rao Muhammad Anwer, Vineeth N Balasubramanian; “Energy-based Latent Aligner for Incremental Learning” (Poster), Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 7452-7461

- Anirudh Thatipelli, Sanath Narayan, Salman Khan, Rao Muhammad Anwer, Fahad Shahbaz Khan, Bernard Ghanem; “Spatio-temporal Relation Modeling for Few-shot Action Recognition” (Poster), Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 19958-19967

- Akshay Dudhane, Syed Waqas Zamir, Salman Khan, Fahad Shahbaz Khan, Ming-Hsuan Yang; “Burst Image Restoration and Enhancement” (Oral, Best Paper Award Finalist), Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 5759-5768

- Akshita Gupta, Sanath Narayan, K J Joseph, Salman Khan, Fahad Shahbaz Khan, Mubarak Shah; “OW-DETR: Open-world Detection Transformer” (Poster), Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 9235-9244

- Kanchana Ranasinghe, Muzammal Naseer, Salman Khan, Fahad Shahbaz Khan, Michael S. Ryoo; “Self-supervised Video Transformers” (Oral), Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 2874-2884

CVPR is organized by the Computer Vision Foundation (CVF) and the Institute of Electrical and Electronics Engineers (IEEE). Conference proceedings will be publicly available via the CVF website, with final versions posted to IEEE Xplore after the conference.

Related

AI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- cinema ,

- AI ,

- artificial intelligence ,

- art ,

- fiction ,

- science fiction ,

Mind meld: agentic communication through thoughts instead of words

A NeurIPS 2025 study by MBZUAI shows that tapping into agents’ internal structures dramatically improves multi-agent decision-making.

- agents ,

- neurips ,

- machine learning ,

Balancing the future of AI: MBZUAI hosts AI for the Global South workshop

AI4GS brings together diverse voices from across continents to define the challenges that will guide inclusive AI.....

- representation ,

- equitable ,

- global south ,

- AI4GS ,

- event ,

- workshop ,

- languages ,

- inclusion ,

- large language models ,

- llms ,

- accessibility ,