Frontiers of federation at the AI Quorum

Tuesday, December 19, 2023

Leading scientists from across the world met earlier this month in Abu Dhabi for MBZUAI’s Second Workshop on Collaborative Learning, as part of the AI Quorum. The three-day session served as a forum where researchers discussed recent developments in collaborative and federated learning with a focus on sustainable development, as defined by the United Nations’ Sustainable Development Goals.

Presentations considered how collaborative learning principles can be applied to medicine and biology, ecological conservation, and humanitarian aid, among other areas.

Collaborative and federated learning are related concepts that have been developed by scientists to train machine learning algorithms with data that are provided by multiple devices or users. People who work in the field devise ways to provide models with more and better data, while considering constraints like privacy and security.

An illustrative example of federated learning, mentioned at the workshop by Peter Kairouz, research scientist at Google, is Gboard, a virtual mobile keyboard app developed by Google.

When users type on Gboard, a machine learning model on their mobile learns from their typing habits, helping to predict the next word that will be written or correct frequent misspellings. Instead of sending the user’s data back to Google’s servers, Gboard only sends information about how the model on that device has changed based on the information it has been exposed to. Google’s servers receive these updates from users, and the updates are aggregated to improve the base model, which is then sent back to users’ devices in an improved form. Every user benefits from insights provided by others, while their data remains private.

There are many other potential applications of collaborative and federated learning principles beyond smart keyboards, particularly in domains where privacy and security are essential, like healthcare and the life sciences.

LLM v. LBM

MBZUAI President and University Professor Eric Xing discussed how analysis of huge amounts of data related to the life sciences could provide researchers with a more complete and deeper understanding of biology similarly to how transformer-based large language models have revolutionized natural language processing.

And just as with language, there is a tremendous amount of biological data waiting to be analyzed in what Xing calls large biology models.

“The modern study of biology has been marked by the continuous development of new technologies that produce more and more detailed information,” Xing said. For example, recent decades have seen the rise of DNA sequencing technologies that provide insight into the underlying genetic code of biological systems and advanced technologies that can help scientists understand how gene expression varies across different cell types. The volume and specificity of data is increasing over time, Xing noted.

Yet with these major technological advances, the study of the life sciences remains siloed in disciplinary divisions such as molecular biology, cell biology and medicine, Xing said. “When we have the ability to put all this data from across disciplines together in a model, it gives us an opportunity to move away from a reductionist approach and towards a more data-driven approach.”

Xing’s vision, which he envisions to be repeatable and verifiable, while not necessarily providing explanations of fundamental biological phenomena, would result in what he calls “actionable empirical understanding.”

“We have an opportunity to welcome a new paradigm for the holistic life sciences where all this data gets gathered and integrated and can be used to solve not just one task but a spectrum of tasks,” he said.

Watch Professor Xing’s full talk:

[wps_youtube url=”https://www.youtube.com/watch?v=wHKal_anIw0″ width=”600″ height=”400″ responsive=”yes” autoplay=”no”]

Cooperative contributions

Collaborative learning fundamentally requires an element of cooperation. But not every participant who contributes data in a collaborative learning setting will provide data of equal value, nor will each participant derive the same benefit from using the system.

Michael I. Jordan, Pehong Chen distinguished professor at the University of California, Berkeley, discussed the challenge of managing information asymmetries in a decentralized system and how people can be incentivized to contribute to a collaborative system.

Watch Professor Jordan’s full talk:

[wps_youtube url=”https://www.youtube.com/watch?v=xlRn_KXiM5o” width=”600″ height=”400″ responsive=”yes” autoplay=”no”]

Jordan’s research draws economics with concepts such as contract theory. A real-world example of asymmetrical data environments are clinical trials, where a regulator like the U.S. Food and Drug Administration (FDA) wants to have drugs approved but has limited information about the safety or effectiveness of the medicine. What’s more, incentives between FDA and a drug developer are not in harmony because the reward for a developer for getting a drug approved is huge, even if the drug doesn’t work as well as it should, while the reputational risk of a harmful drug entering the market is huge for the FDA.

Jordan and his colleagues proposing an approach called statistical contracts, which rely on machine learning to provide the most appropriate compensation to drug developers, while aligning incentives between parties.

“We’ve been working on this in several papers where we create incentives to provide data where agents cooperate with each other and a principal to build a better statistical model than anyone could unilaterally,” Jordan said.

Samuel Horváth, assistant professor of machine learning at MBZUAI, discussed the issue of data heterogeneity in federated learning and proposes a concept called overpersonalization that helps to overcome challenges that relate to differences in data that are provided by participants of a federated arrangement. In a recent study, his approach has been shown to improve the robustness of a federated learning system.

Watch Dr. Horváth’s full talk:

[wps_youtube url=”https://www.youtube.com/watch?v=wcdRps-y3CA” width=”600″ height=”400″ responsive=”yes” autoplay=”no”]

Price of privacy

Praneeth Vepakomma, a doctoral student at the Massachusetts Institute of Technology and MBZAUI, spoke about how privacy can be maintained in collaborative learning systems through privatizing embeddings, which is the way that data are translated into representations that machines can easily process and compare.

One question that Vepakomma raised related to the value and cost of privatizing data and how that capability could be built into the design of a system.

“What does it mean for a record to be easily privatized or difficult to be privatized and how would you value this and disburse payments accordingly?”

Important questions, as scientists consider how the huge amounts of data that are generated every second can be shared, crunched and monetized while preserving the privacy of the people who the data describe.

All the talks from day 1 and day 2 are available to view on our YouTube channel.

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

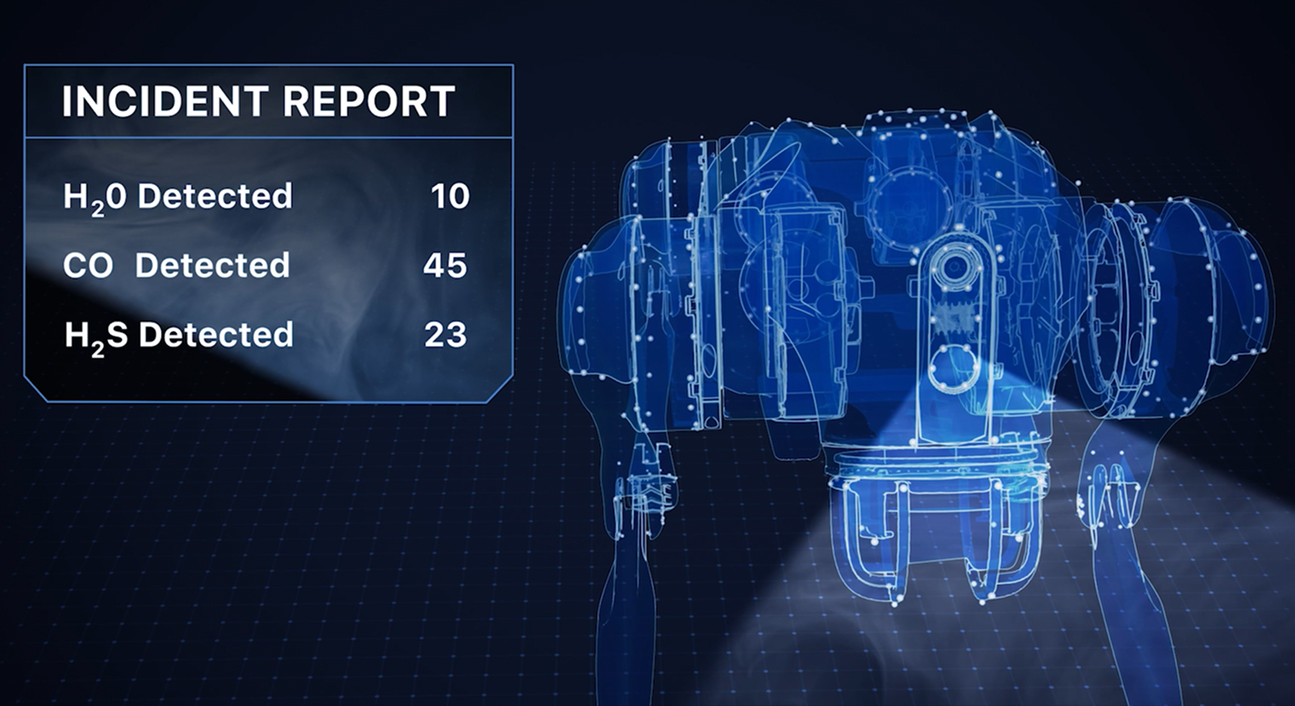

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,