A prescription for privacy

Monday, December 18, 2023

Machine learning applications are being used in healthcare today for a variety of tasks, including helping physicians interpret medical images — like photographs of skin, or complex images of internal organs — to identify signs of disease. In the field of computer vision, this process is known as image classification.

When it comes to training image classification models, the general rule of thumb is that the more data they are exposed to, the better they will be. But a potential bottleneck in healthcare is a lack of data that can be used for training.

Data scarcity in the clinic can be due either to privacy restrictions or simply because a hospital doesn’t have enough relevant info in their own archives to adequately train a model. The problem is compounded by the fact that the current state-of-the-art technology used for image classification — known as vision transformers — must be instructed with huge amounts of data to achieve their impressive results.

“The typical procedure in many industries is centralized training, where you pool all the data into one location and have the model train on that large pool of data,” said Karthik Nandakumar, associate professor of computer vision at MBZUAI. “But data privacy regulations like GDPR in Europe say that a hospital must keep patient data locally, and this is one of the main constraints of training image classification applications in healthcare.”

Nandakumar and his colleagues are developing new techniques to overcome this challenge. Their approach, called federated split learning of vision transformer with block sampling (FeSViBS), focuses on ways to train an image classification application while maintaining patient privacy.

Nandakumar presented the study at the International Conference on Medical Image Computing and Computer Assisted Intervention, which was held in October in Vancouver.

The best of both models

Nandakumar and his team’s strategy allows for a form of collaborative learning to train image classification models while also maintaining privacy and security. It builds on concepts known as federated learning and split learning, adapting elements of both.

With federated learning, a central machine learning model is housed on a central server and is also distributed to local devices. The model is trained on the local devices and does not share the data it analyzes back to the central server. This helps to maintain security and privacy. When the model learns new insights from the data it analyzes, however, it phones home and provides those learnings to the central server. The approach has its weaknesses though. Attacks known as gradient inversions have exposed vulnerabilities in federated learning algorithms, raising concerns about their ability to protect privacy.

With split learning, the model is divided, or split, into multiple parts, and each part is processed by different devices in a sequential manner. These devices collaboratively process and transform the data through a series of layers, with each device having access to a portion of the model’s layers. The final model’s output is obtained by aggregating the outputs from all nodes of the system.

Nandakumar and his colleagues build on these approaches.

“In our paper, we split the machine learning model into three parts. The first part of the model, called the head, does some initial processing of the data and stays on the client side,” Nandakumar explained. “The body stays on the server side, which does some fine-grained processing. And finally, the decision about the data is made back on the client side.” Shifting from client, to server, back to client, is known as a U-shaped configuration.

The researchers also employed a technique called block sampling to improve classification of images. When an image is processed by a vision transformer neural network, it passes through many different blocks of the network that analyze features of the image. At the start, the information that is processed may be general, such as the edges of objects. As the image is processed by additional blocks, analysis becomes more detailed. “Because of the sequential nature of the neural network, each block is learning a different feature of the image,” Nandakumar said.

In most cases, data from intermediate layers of the neural network is discarded, and the image classifier would make a decision about what an image is when it is processed in the final layer, Nandakumar said. With block sampling, however, data is randomly sampled from the different layers of the neural network, including the intermediate layers.

“What we are suggesting in this paper is that intermediate layers have some valuable information and if we can tap into some of these intermediate features, we may be able to improve our final classification.”

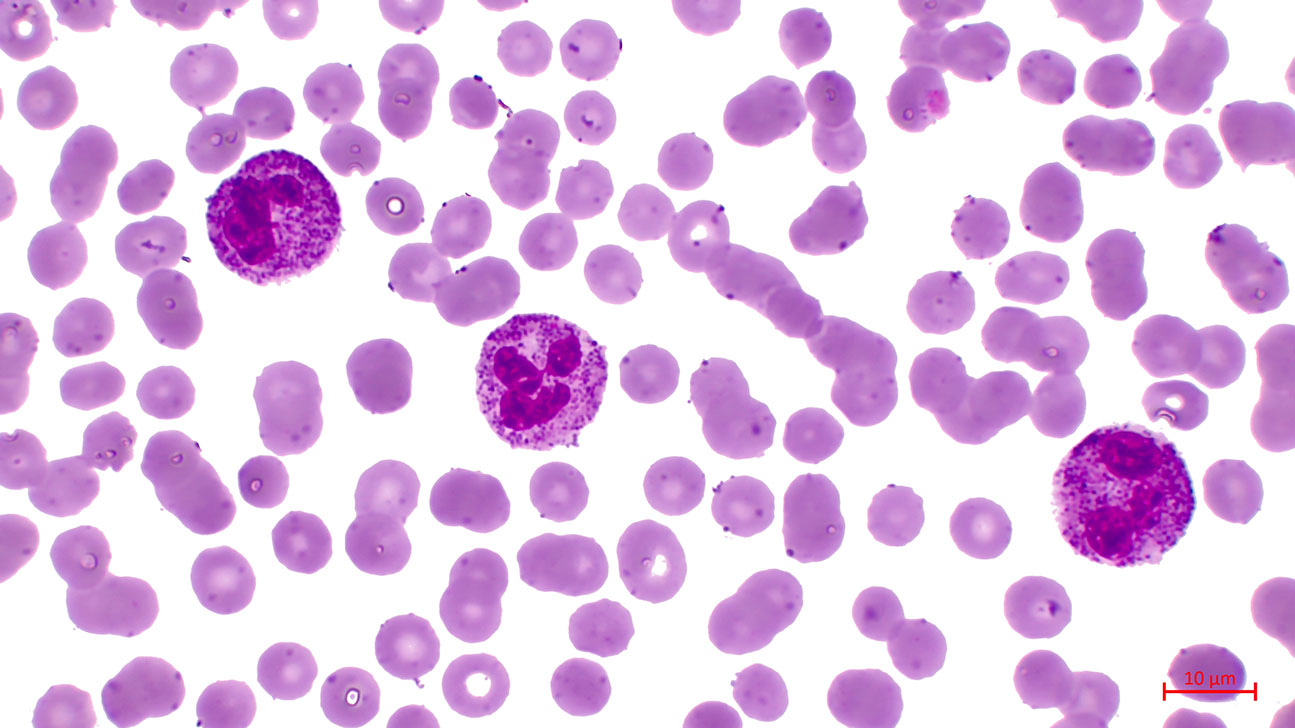

The researchers evaluated the performance of FeSViBS on three medical imaging data sets, which included images of skin cancers and blood cells. Their application performed consistently across all of them.

Faris Almalik and Naif Alkhunaizi, research assistants at MBZUAI, led the study. Ibrahim Almakky, a research associate at MBZUAI, contributed to it.

In future experiments, Nandakumar and the team intend to investigate the security of their approach, use it to analyze different kinds of medical images and for different tasks.

Related

MBZUAI and Minerva Humanoids announce strategic research partnership to advance humanoid robotics for applications in the energy sector

The partnership will facilitate the development of next-generation humanoid robotics tailored for safety-critical industrial operations.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- cinema ,

- art ,

- fiction ,

- science fiction ,

- artificial intelligence ,

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- delivery ,

- logistics ,

- machine learning ,

- research ,

- computer vision ,

- conference ,

- neurips ,

- benchmark ,