Old images to anticipate the future

Monday, October 30, 2023

Video is a rich source of information for many industries. Some common uses of video include security and traffic cameras. There are also applications in the life sciences to analyze experiments in the lab and in healthcare to monitor the wellness and safety of patients. But while video often contains valuable information, analyzing it is a time-intensive activity for a person.

One way of making video more useable to people is an artificial intelligence method called video question answering, which combines insights from the fields of computer vision and natural language processing. The goal of video question answering, as its name suggests, is to generate answers to questions that people pose about the content of videos.

You can imagine a cardiologist who wants to search through an archive of videos of his patients’ heart exams to find examples of a certain anomaly. It’s possible that the physician’s archive has been tediously catalogued by hand by an assistant over the years and with a simple search he easily finds what he’s looking for. But this scenario is unlikely. Even if the archive is organized, the classification system that the archive uses may not include the term the doctor is looking for. Without a way to quickly search through hours or perhaps even days of footage, he is not going to be able to review each video one by one.

While it is a conceptually simple use of video question answering, in that it identifies the content of a video, scientists at MBZUAI are exploring applications that integrate computer reasoning with the goal of determining the causes of events that are captured on tape.

From image to video

Current approaches to training video question answering applications are expensive and require huge amounts of computing power, explained Guangyi Chen, a postdoctoral research fellow at MBZUAI. In a recent study, Chen and colleagues from MBZUAI and other universities have proposed how insights from analyzing still images can be translated into the realm of video while using fewer resources, potentially providing a more efficient way of approaching video question answering.

“Our motivation for this project is that we want to use models that have been pre-trained on image data and use them to understand concepts in video,” Chen said.

The research was presented at the International Conference on Computer Vision held October 2 to 6 in Paris. The other authors of the research are Kun Zhang, associate professor of machine learning and director of Center for Integrative Artificial Intelligence (CIAI) at MBZUAI; Xiao Liu of Eindhoven University of Technology; Guangrun Wang and Philip H.S. Torr of University of Oxford; Xiao-Ping Zhang of Shenzhen International Graduate School, Tsinghua University and Toronto Metropolitan University; and Yansong Tang of Shenzhen International Graduate School, Tsinghua University.

Using techniques that were originally developed to make sense of images and translating them to video is efficient but creates another problem — images are frozen in time while videos have a temporal dimension. Effective video question answering must match up the meaning of language to the meaning of a moving image all within in the dimension of time. Training tools that have been designed for still images don’t have the ability to account for the temporal, dynamic nature of video, which is described as a “domain gap.”

To solve for this challenge, Chen and his team developed what they call a temporal adaptor, or Tem-adapter, which is a program that interprets the relationship between language and image and learns how these relations change over time. Tem-adapter employs a technique called language-guided autoregression that helps Tem-adapter anticipate what will come next in time. Autoregression is a concept that is used in large-language models like ChatGPT. With autoregression, a model analyzes a sequence of words, and based on that sequence, anticipates what the most likely next word will be. It goes through this process again with the new word that has been added to the sequence and anticipates the next most likely word. Tem-adapter uses a similar concept.

A goal for Chen and his team is to translate this concept to the visual world, allowing a machine to predict a future state of a video based on what has happened previously in the video.

In the study, the researchers used Tem-adapter to analyze datasets of traffic. They wanted to find out if their approach was able to interpret how videos traffic videos changed over time and if Tem-adapter could provide the correct answer when asked about the causes of accidents caught on camera. When compared to current benchmarks, even with much lower training costs, Chen and the scientists approach was effective and was in fact shown to be the most accurate of all models in determining the cause of accidents.

“This study shows two things. First, that we can use an image model for a video-based task, even with the temporal gap. Second, that image models can empower models for video representation and reasoning,” Chen said.

When looking ahead to what’s next on the research docket, Chen noted: “While we found that the understanding and the prediction capabilities of this approach were effective, we would still like to better understand the reasoning aspect and why it makes these interpretations,” Chen said.

- machine learning ,

- research ,

- ICCV ,

- video ,

Related

Intelligent, sovereign, explainable energy decisions: powered by open-source AI reasoning

As energy pressures mount, MBZUAI’s K2 Think platform offers a potential breakthrough in decision-making clarity.

- case study ,

- ADIPEC ,

- K2 Think ,

- IFM ,

- reasoning ,

- llm ,

- energy ,

- innovation ,

- research ,

Cooling more people with fewer emissions: intelligent, efficient cooling with AI and ice batteries

MBZUAI's Martin Takáč is leading research to develop an AI-driven energy management system that optimizes the use.....

- energy ,

- cooling ,

- solar ,

- ADIPEC ,

- sustainability ,

- innovation ,

- research ,

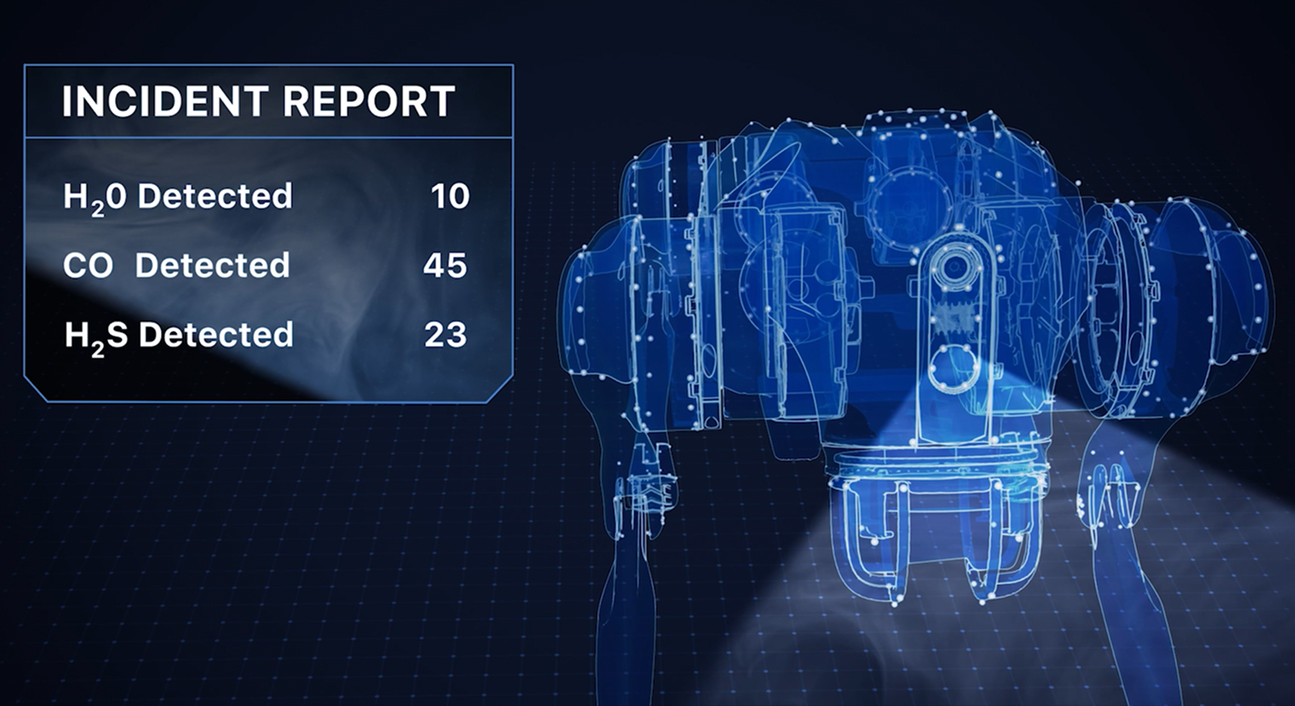

Faster, safer and smarter inspection: AI-powered robotics for industrial safety

MBZUAI's autonomous robotic system, LAIKA, is designed to enter and analyze complex industrial environments – reducing the.....

- research ,

- autonomous ,

- case study ,

- innovation ,

- infrastructure ,

- energy ,

- industry ,

- robotics ,