Mind meld: agentic communication through thoughts instead of words

Tuesday, December 23, 2025

The fact that we can communicate with AI agents in our own language is remarkable. It makes the experience intuitive and understandable. But when agents communicate with each other, human language might not be necessary and can even be a problem. This raises an interesting question: Can AI agents bypass human language altogether and communicate simply by reading each other’s minds?

This is the idea proposed by a team of researchers from MBZUAI, Carnegie Mellon University, and Meta AI that was recently presented at the 39th Annual Conference on Neural Information Processing Systems (NeurIPS 2025) in San Diego, California.

The researchers introduce a new approach to multi-agent collaboration where agents communicate through internal, latent representations that are akin to “thoughts.” The researchers show that when agents communicate through latent thoughts rather than text, they can coordinate more effectively, reach consensus faster, and solve problems more accurately.

The study is the first to consider how latent thoughts can be used for communication between agents. It also provides a method for recovering the thoughts.

The authors of the study are Yujia Zheng, Zhuokai Zhao, Zijian Li, Yaqi Xie, Mingze Gao, Lizhu Zhang, and Kun Zhang.

I know what you’re thinking

Zijian Li, a postdoctoral researcher at MBZUAI and co-author of the study, says that the team’s idea was sparked by observing limitations in the way that agents currently communicate.

When agents collaborate with each other, they typically do so through natural language. Language is powerful, of course, but it is also inefficient — “sequential, ambiguous, and imprecise,” as the researchers describe it. They cite recent studies that show that many failures in multi-agent collaboration are due to vague or confusing messages.

We rely on language because we can’t communicate complex ideas through other means, like telepathy. AI agents aren’t beholden to the same limitations and might be able to exchange ideas in a purer form. Perhaps they could bypass “the linguistic bottleneck that naturally occurs with human-like communication,” Li says.

In the team’s framework, latent thoughts relate to the underlying structure of an agent’s reasoning or decision-making process. Li describes them as high-bandwidth representations of a model’s internal state that is abstracted from any specific linguistic form.

Thoughts instead of words

The researchers call their framework ThoughtComm and it works by extracting latent thoughts from agents’ internal states before communication happens. The latent thoughts are then selectively shared based on their relevance. Some represent common ground that is useful to more than one agent, while others are private or unique to specific agents.

The concept is similar to the way that two people might approach the same problem from different perspectives. Some of their ideas about how to solve the problem will overlap, while others will diverge.

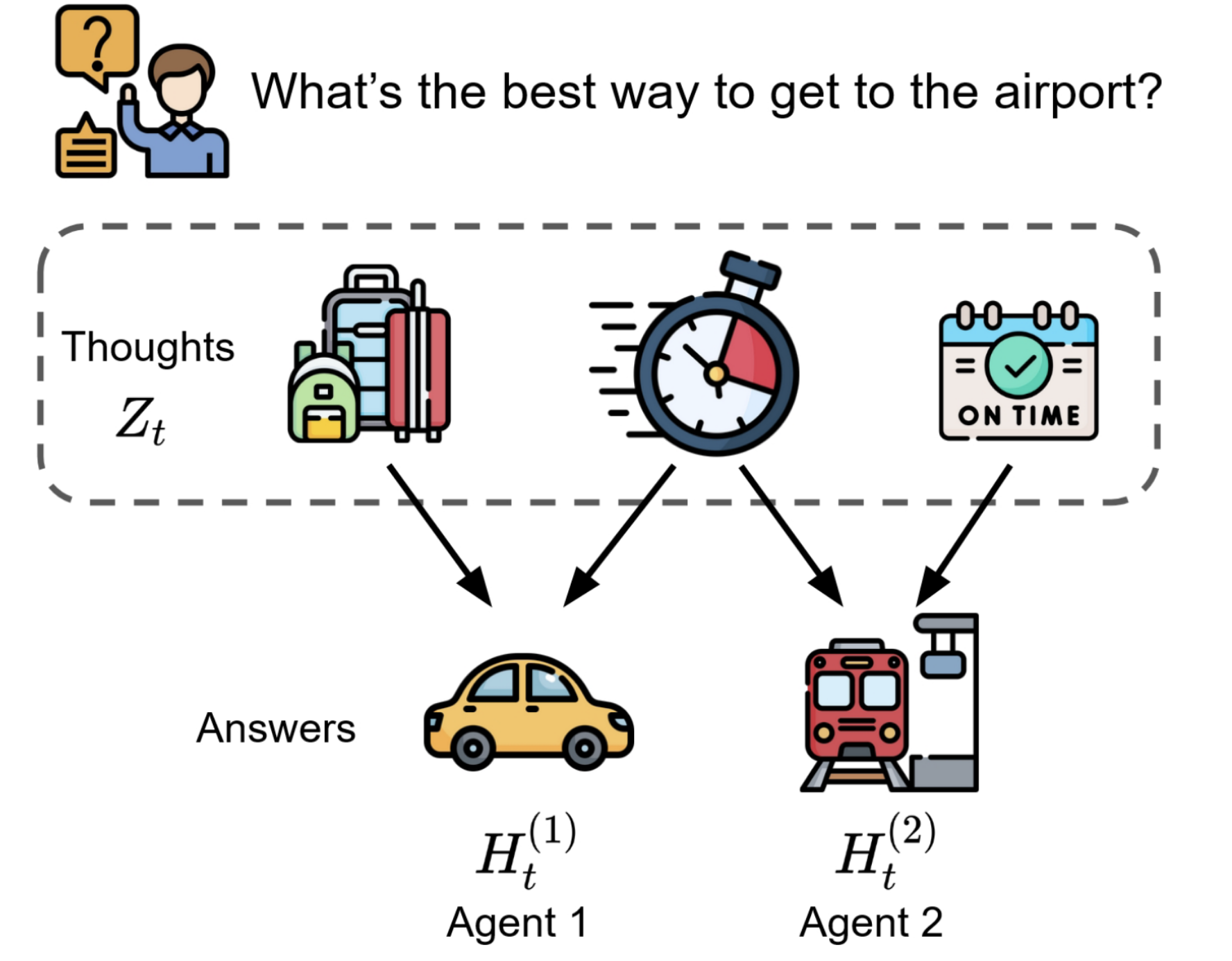

In the study, the researchers illustrate the idea with an example of two agents tasked with determining the best mode of transport to catch a flight. Both agents produce latent thoughts related to speed, but one produces a latent thought about luggage, while the other produces a latent thought about punctuality. This variation leads to different choices by the agents: the one that prioritizes luggage chooses a taxi, while the other chooses a train.

Each agent answers the same question by selecting a subset of latent thoughts. Agent 1 chooses a car based on the need to carry luggage, while Agent 2 selects a train for schedule punctuality. Both share the thought of speed.

For any two agents, the framework can disentangle which latent thoughts are shared and which are private. By analyzing results across different pairs, the system reconstructs how latent thoughts are distributed across a group of agents.

This is done by a module called a sparsity-regularized autoencoder that extracts latent thoughts from agent hidden states, inferring the underlying mapping between agents and their thoughts. The approach allows the agents to not only understand what others are thinking but also allows them to reason about which thoughts are shared and which are private.

The idea is that these latent thoughts can be shared between models instead of exchanging text, with the result being more direct and less ambiguous communication.

The researchers formalize this process through a generative model where agent states are produced by latent thoughts through an unknown function. They also prove that both shared and private thoughts “reflect the true internal structure of agent reasoning,” they say.

The approach assumes that any two agents that use the same base model will produce some shared and some private thoughts. The similarities are the result of shared model architecture and pretraining data and the differences are due to factors such as learned preferences that aren’t shared across agents, Li says.

Testing ThoughtComm

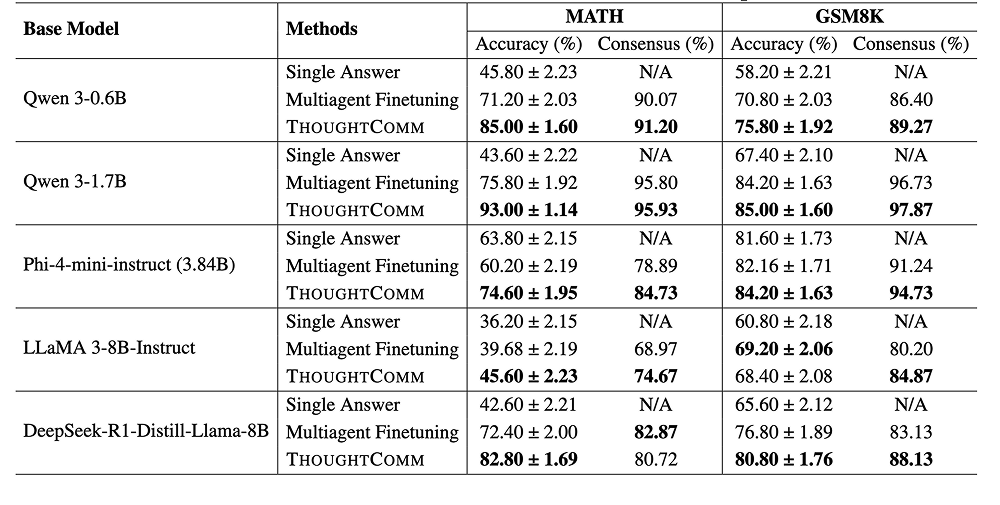

The researchers tested several language models on two math benchmarks (MATH and GSM8K) according to different collaboration methods: single answer, multiagent finetuning, and ThoughtComm. They found that ThoughtComm performed better than the other methods on both datasets.

Across the models, ThoughtComm achieved a relative improvement of 67.23% over single answer and 19.06% over multiagent finetuning, the current state-of-the-art. With Qwen 3-1.7B, ThoughtComm achieved an accuracy of 93% on MATH, an absolute gain of 17.2% over multiagent finetuning.

The researchers also found that when it came to consensus, ThoughtComm outperformed all baselines, “indicating superior inter-agent alignment enabled by efficient mind-to-mind communication,” as they describe it.

While the improvements highlight the potential of the approach when it comes to multiagent collaboration, Li says that there are areas where the method didn’t outperform traditional language-based communication.

For example, ThoughtComm wasn’t as good as traditional methods in scenarios that required creative and open-ended problem solving. “The latent representations may not be as rich or interpretable as natural language in these situations, especially when models need to engage in nuanced dialogue or reasoning that is difficult to capture in purely latent form,” Li says.

Beyond words

One of the key strengths of the framework is that it isn’t strictly tied to text and could be applied to image, audio, or even data collected by sensors. This would allow agents to coordinate on tasks that require more complex sensory information or involve tasks like robotic manipulation, object recognition, or natural scene interpretation.

As the researchers look ahead, they envision that their approach could be used in scaling frameworks to enable communication across many agents. If the future is to be populated by billions — trillions? — of AI agents working on countless tasks, these systems will likely need a way of communicating that is more efficient than human language.

“By moving beyond text and directly sharing internal representations, we can pave the way for more advanced, adaptable, and intelligent multi-agent systems,” Li says.

- machine learning ,

- neurips ,

- agents ,

Related

AI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- AI ,

- artificial intelligence ,

- science fiction ,

- fiction ,

- art ,

- cinema ,

Balancing the future of AI: MBZUAI hosts AI for the Global South workshop

AI4GS brings together diverse voices from across continents to define the challenges that will guide inclusive AI.....

- equitable ,

- large language models ,

- llms ,

- inclusion ,

- languages ,

- workshop ,

- event ,

- AI4GS ,

- global south ,

- accessibility ,

- representation ,

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- machine learning ,

- research ,

- conference ,

- neurips ,

- logistics ,

- computer vision ,

- benchmark ,

- delivery ,