Highlighting LLM safety: How the Libra-Leaderboard is making AI more responsible

Tuesday, February 18, 2025

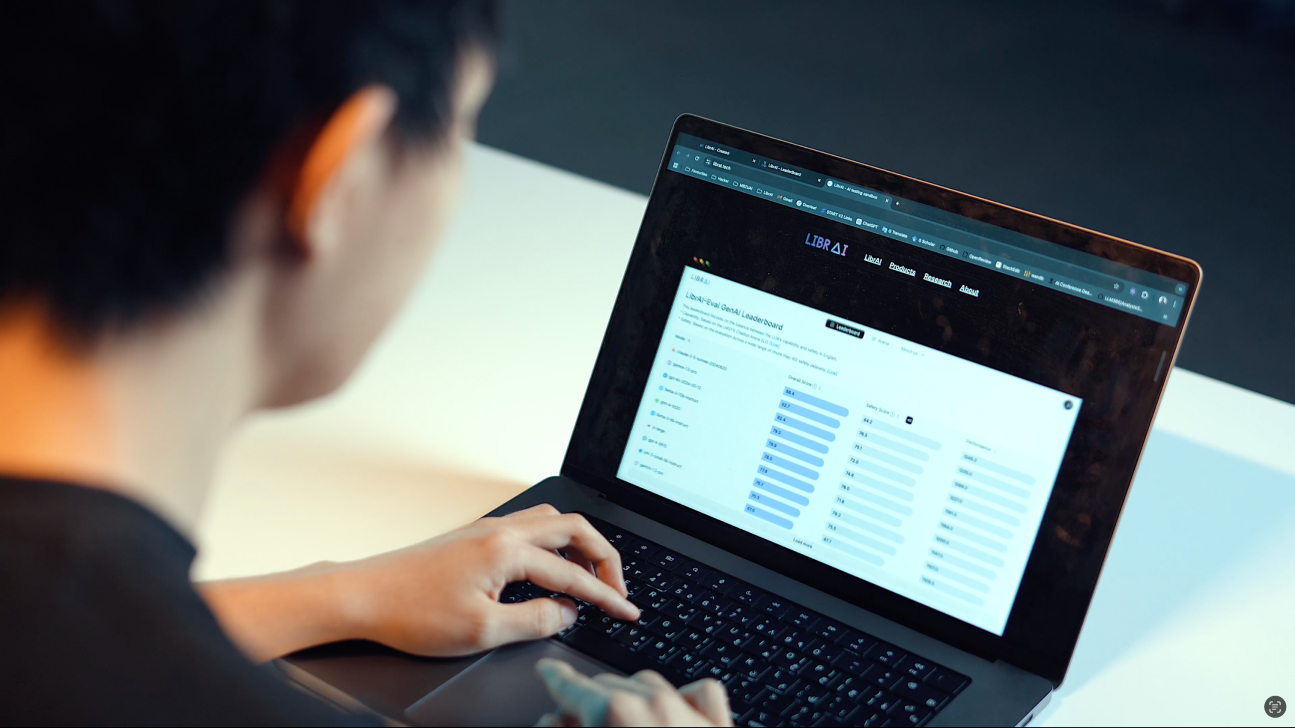

MBZUAI-based safety monitoring start-up LibrAI has taken another step towards ensuring safe, responsible and ethical AI with the launch of its Libra-Leaderboard: an evaluation framework for large language models (LLMs) that seeks to close the gap between capability and safety.

Founded in 2023 with support from the MBZUAI Incubation and Entrepreneurship Center (MIEC), LibrAI is a platform that allows developers to test their AI models in a secure environment to evaluate and enhance safety, leading to more responsible development and deployment of AI.

The new leaderboard offers a comprehensive assessment of 26 mainstream LLMs including models such as Claude, ChatGPT and Gemini; using 57 datasets — most of them from 2023 onwards — to assign each model a score based on their capability and safety in a bid to guide future AI development.

“Most people are focused on AI’s capabilities – can it communicate in many languages, can it reason, pass a math exam and so on. Our motivation was driven by the need to ensure AI can develop safely; as it’s developed quickly, we don’t lose sight of safety.” says Haonan Li, postdoctoral research fellow at MBZUAI and chief technology officer at LibrAI.

The LibrAI team has created a balance-encouraging scoring system for its new leaderboard. It evaluates categories such as bias, misinformation and oversensitivity to benign prompts, providing a holistic view of a model’s reliability. The leaderboard then gives each model a ‘capability score’, ‘safety score’, and an ‘overall score’.

“The overall score is not simply the average of the capability score and safety score,” explains Li. “It penalizes discrepancies between capability and safety scores, ensuring that a higher overall score reflects models where capability and safety are closely aligned. In our view, reducing the gap between capability and safety is what models should be trying to achieve.”

Education and evaluation

LibrAI also launched the Interactive Safety Arena to complement the Libra-Leaderboard. The Arena is a platform designed to engage the public and educate them on AI safety. It allows users to test AI models with adversarial prompts, receive tutoring, and provide feedback, raising awareness about potential risks in LLMs.

“The Arena provides an interactive, tutorial-like experience designed to educate people about AI safety,” says Li. “For example, one can enter some risky prompts and send it to two anonymous models. The Arena generates a comparison of the two models’ output. The user can choose which model is safer and more helpful. The scoring of the models is reflected in the leaderboard.”

Looking ahead, the LibrAI team plan to make regular updates to the leaderboard with new datasets and evaluation criteria to address emerging vulnerabilities in AI systems, as well as gamifying aspects of the Arena to engage more people.

But that is merely the tip of the iceberg, as the team is working on a flagship AI evaluation product that they hope will set a new standard in AI safety.

“We are creating an evaluator platform that helps organizations pre-emptively assess AI systems for alignment, reliability and ethical compliance. We believe it will be pivotal in equipping industries with the tools they need to safely and responsibly harness AI potential.”

- entrepreneurship ,

- startup ,

- large language models ,

- llms ,

- MIEC ,

- IEC ,

- LibrAI ,

- Safety ,

- ethical ,

- responsible ,

Related

Not just another deck: how MBZUAI’s okkslides is redefining executive communication

The MBZUAI startup is turning messy research and organizational context into decision-ready narratives with a human-in-the-loop AI.....

Read MoreMBZUAI report on AI for the global south launches at India AI Impact Summit

The report identifies 12 critical research questions to guide the next decade of inclusive and equitable AI.....

- Report ,

- social impact ,

- equitable ,

- global south ,

- AI4GS ,

- summit ,

- inclusion ,

MBZUAI research initiative receives $1 million funding from Google.org

The funding will help MBZUAI's Thamar Solorio develop inclusive, high-performance AI for the region’s diverse linguistic landscape.

Read More