Teaching language models about Arab culture through cross-cultural transfer

Monday, December 29, 2025

Even though Arabic is the native language of more than 400 million people, language models have been shown to struggle on benchmarks that measure Arab cultural understanding.

The problem is complicated by the fact that the Arab world is itself quite diverse. While there are cultural similarities across the 22 countries that count Arabic as an official language, there are also important differences among them related to food, family life, and daily habits. These nuances are often collapsed in language models, as they tend to have a deeper understanding of the cultures of larger countries, like Egypt, than of smaller ones, like the UAE.

Researchers at MBZUAI have explored a new way to remedy these limitations to see if cultural knowledge about one country can be used to improve knowledge of another, a process they describe as cross-cultural commonsense transfer.

Using two different alignment methods — in-context learning and demonstration-based reinforcement (DITTO) — the researchers were able to improve performance about other countries by 34% in one case and by an average of 10% across multilingual models. What’s also impressive is that they were able to do this simply by providing models with only a dozen demonstrations.

The team’s findings were recently presented at the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP 2025) in Suzhou, China. The authors of the study are Saeed Almheiri, Rania Hossam, Mena Attia, Chenxi Wang, Preslav Nakov, Timothy Baldwin, and Fajri Koto.

Exploring cross-cultural transfer

At the heart of the team’s approach is a concept known as transfer learning, where knowledge gained by training on one dataset can be used to improve performance on a different one. “We were trying to do something similar, but specifically related to culture,” explains Saeed Almheiri, a doctoral student in Natural Language Processing at MBZUAI and co-author of the study.

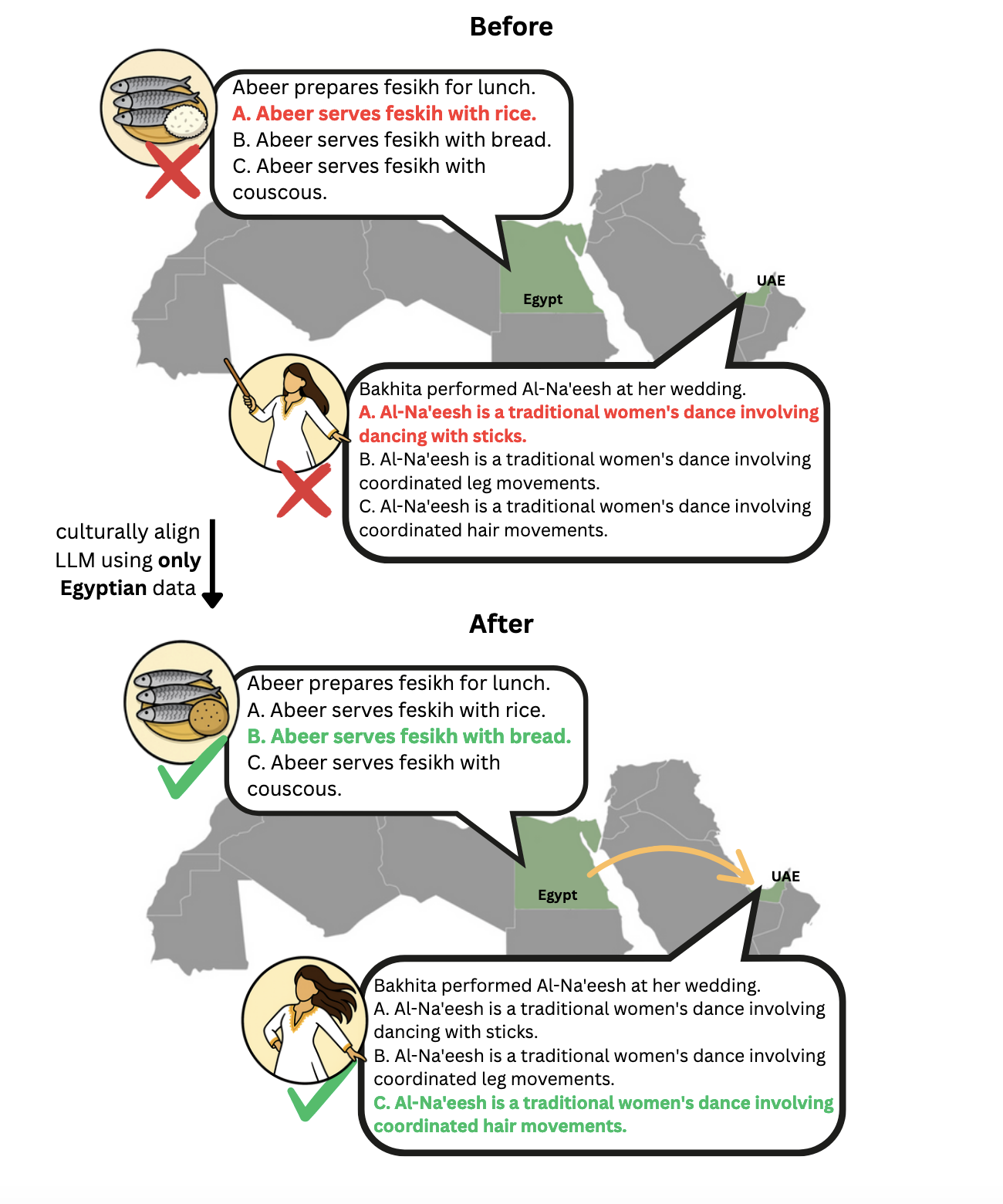

Researchers from MBZUAI explored how demonstrations about one culture could be used to improve a language model’s ability to answer questions about another culture. This example shows how training on data about Egypt improved performance on questions about the UAE.

Almheiri has a personal interest in the way language models represent culture. “I’ve seen models try to mimic the Emirati dialect and I’ve found them to be quite incorrect, so I feel that it’s my responsibility to engage in this kind of cultural NLP work.”

Rania Hossam, a master’s student in Natural Language Processing and co-author of the study, says that while there are similarities between the regions of the Arabic-speaking world, it’s important to develop models that are aligned to the cultural distinctions of each country.

Almheiri and Hossam’s work builds on another project by MBZUAI researchers, a cultural commonsense reasoning benchmark dataset called ArabCulture. It covers 13 Arabic-speaking countries and 12 domains of daily life, such as family, food, habits, and games.

The researchers selected 24 demonstration examples from the ArabCulture dataset for each country compromising of two sets of 12 examples, one representing the single domain of food and the other featuring each domain of daily life. They used each of these demonstration sets to teach two multilingual language models and two Arabic language models using in-context learning and DITTO. They then tested each configuration on test sets from different countries or domains. The goal was to see if aligning a language model to the culture of one Arab country or domain would enhance the model’s performance on questions about other countries or domains.

Impact of cross-cultural transfer

The researchers found that training on data about one country improved model performance on questions about other countries by an average of 2% to 5% on multiple choice questions and sentence completion tasks across models and alignment methods.

DITTO worked better to improve the performance of the multilingual models by an average of 20% on multiple-choice questions while in-context learning worked better on sentence completion with an average of 6%.

While the results are evidence that cross-cultural transfer occurs, demonstration sets from some countries and domains helped more than others. For example, demonstrations related to Syria resulted in the highest average improvement (5.02%) across all models and methods.

They found that there is greater transferability between countries that are culturally similar, but cultural similarities don’t necessarily correlate to geographic proximity. When it came to cultural domains, Hossam says that topics that are extremely specific, like idioms or foods, didn’t transfer well.

The researchers also wanted to see if using examples from completely different cultures would influence performance on Arabic cultural commonsense. They aligned models using demonstration sets related to Indonesian and US culture.

Surprisingly, even though these cultures are quite different, training on Indonesian and US data improved performance on multiple choice questions about Arabic culture just as much as training on Arabic samples did.

Overall, they found that models improve through cross-cultural transfer and that the approach can be used to help models develop a broad understanding of Arab culture rather than just memorizing features that are specific to one country.

Almheiri says that the work shows the potential for cross-cultural transfer learning to be used to improve the cultural alignment of language models for low-resource cultures. But there are questions that still need to be answered about why this approach works as well as it does.

“Not all cultures are the same, even though they benefit from each other’s data,” he says. “We should continue to investigate how these models encode information about different cultures and what makes this cross-cultural transfer possible.”

Related

MBZUAI report on AI for the global south launches at India AI Impact Summit

The report identifies 12 critical research questions to guide the next decade of inclusive and equitable AI.....

- Report ,

- social impact ,

- equitable ,

- global south ,

- AI4GS ,

- summit ,

- inclusion ,

MBZUAI research initiative receives $1 million funding from Google.org

The funding will help MBZUAI's Thamar Solorio develop inclusive, high-performance AI for the region’s diverse linguistic landscape.

Read MoreAI and the silver screen: how cinema has imagined intelligent machines

Movies have given audiences countless visions of how artificial intelligence might affect our lives. Here are some.....

- cinema ,

- fiction ,

- art ,

- science fiction ,

- AI ,

- artificial intelligence ,