Towards open and scalable AI-powered waste detection

Wednesday, November 19, 2025

Material recovery facilities around the world handle massive amounts of plastic, cardboard, metals, and other recyclables every day. All this material needs to be sorted before they are recycled. It’s not easy. Conveyor belts that carry materials are crowded, items overlap, and transparent or reflective objects often go undetected by the workers who do the sorting. As a result, valuable recyclables are lost and workers are often exposed to hazardous conditions.

While researchers have developed AI systems that can recognize different kinds of waste, most academic efforts rely on clean, simplified datasets. Models trained on these datasets perform well in controlled settings but not so well when they are deployed in the real world. Another limitation is that training datasets require thousands of hand-labeled images. Generating them is costly, time-consuming, and requires expert knowledge to distinguish between different types of materials.

“AI models often perform well on tidy datasets but break down when faced with the complexity of real recycling facilities,” explains Muhammad Haris Khan, assistant professor of Computer Vision at MBZUAI.

Under the supervision of Khan, Hassan Abid, a recent master’s graduate from MBZUAI, together with Khan Muhammad of Sungkyunkwan University, set out to tackle these challenges. The team’s research evaluates state-of-the-art vision-language detection models on real industrial waste footage, establishes stronger baseline performance through fine-tuning, and introduces a semi-supervised approach that learns from unlabeled data.

They will present their findings at the 36th British Machine Vision Conference (BMVC) in Sheffield, England.

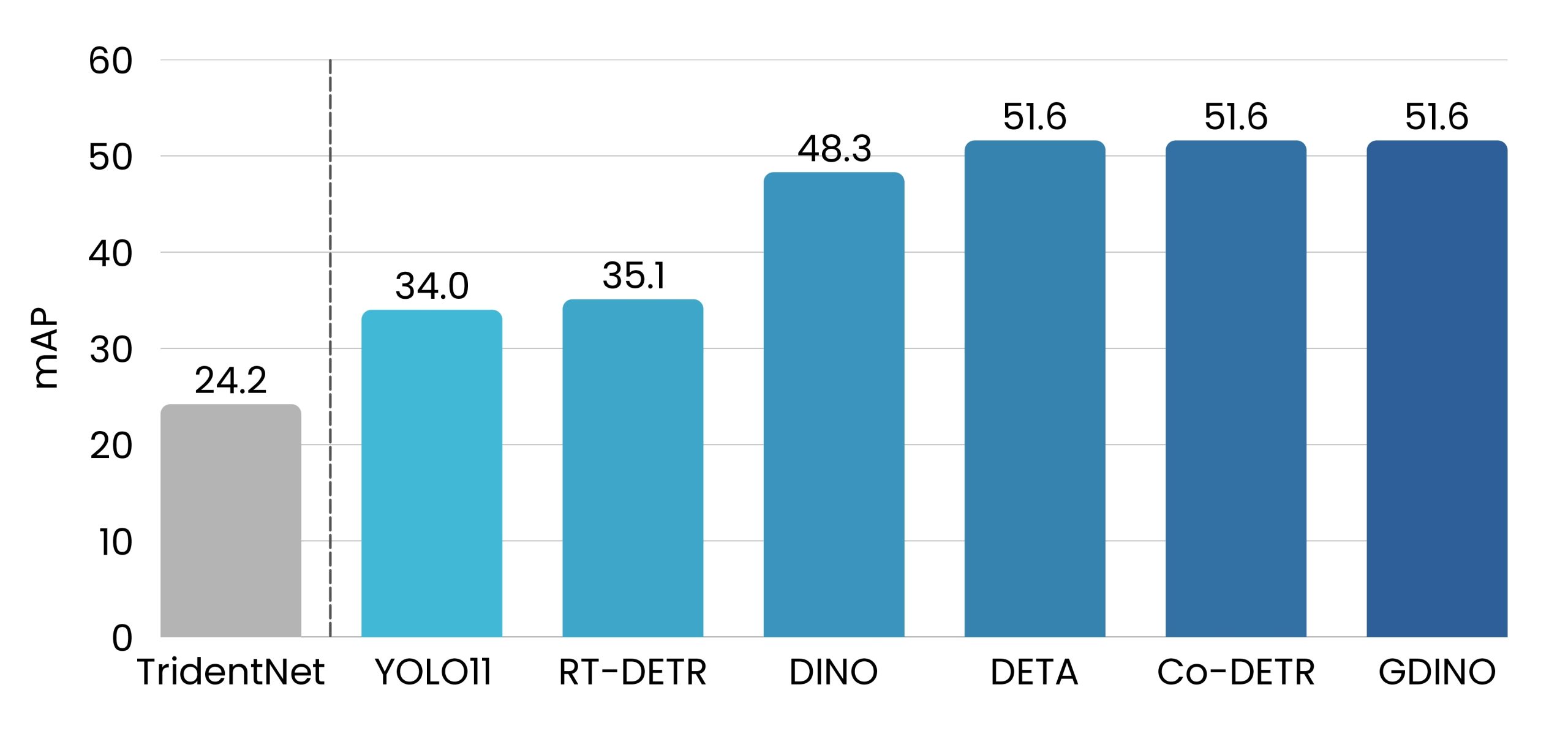

Overall, the results are striking: fine-tuning modern detectors more than doubled their performance on academic benchmarks.

Additionally, the team’s semi-supervised pipeline outperformed fully supervised baselines, showing a practical path for open research that rivals proprietary systems while reducing the need for costly manual labeling.

Sorting waste in the wild

Previous research related to this task often relied on convolutional neural networks trained on isolated objects placed on clean backgrounds. To establish stronger baselines, the researchers fine-tuned modern object detection models on real industrial waste imagery. “Our work deliberately focuses on the complexity of industrial reality,” Abid says. “By using actual conveyor belt footage, we challenge models in the same messy environments where recycling decisions really happen.”

The researchers fine-tuned detectors on cluttered industrial streams and combined this approach with a semi-supervised learning pipeline. The result is robustness to clutter, adaptability to rare classes of items, and reduced dependence on manual labeling.

Doubling accuracy with stronger baselines

By fine-tuning advanced models directly on a real industrial waste dataset, the researchers more than doubled performance compared to the strongest prior academic benchmark, boosting mean average precision, a metric used to measure object detection tasks, from approximately 24 to over 51.

Performance of object detection models fine-tuned on real industrial waste footage. The older TridentNet baseline reached 24.2 mean average precision (mAP), while modern detectors fine-tuned in this study established much stronger baselines, with the best models achieving 51.6 mAP.

Letting AI teach itself

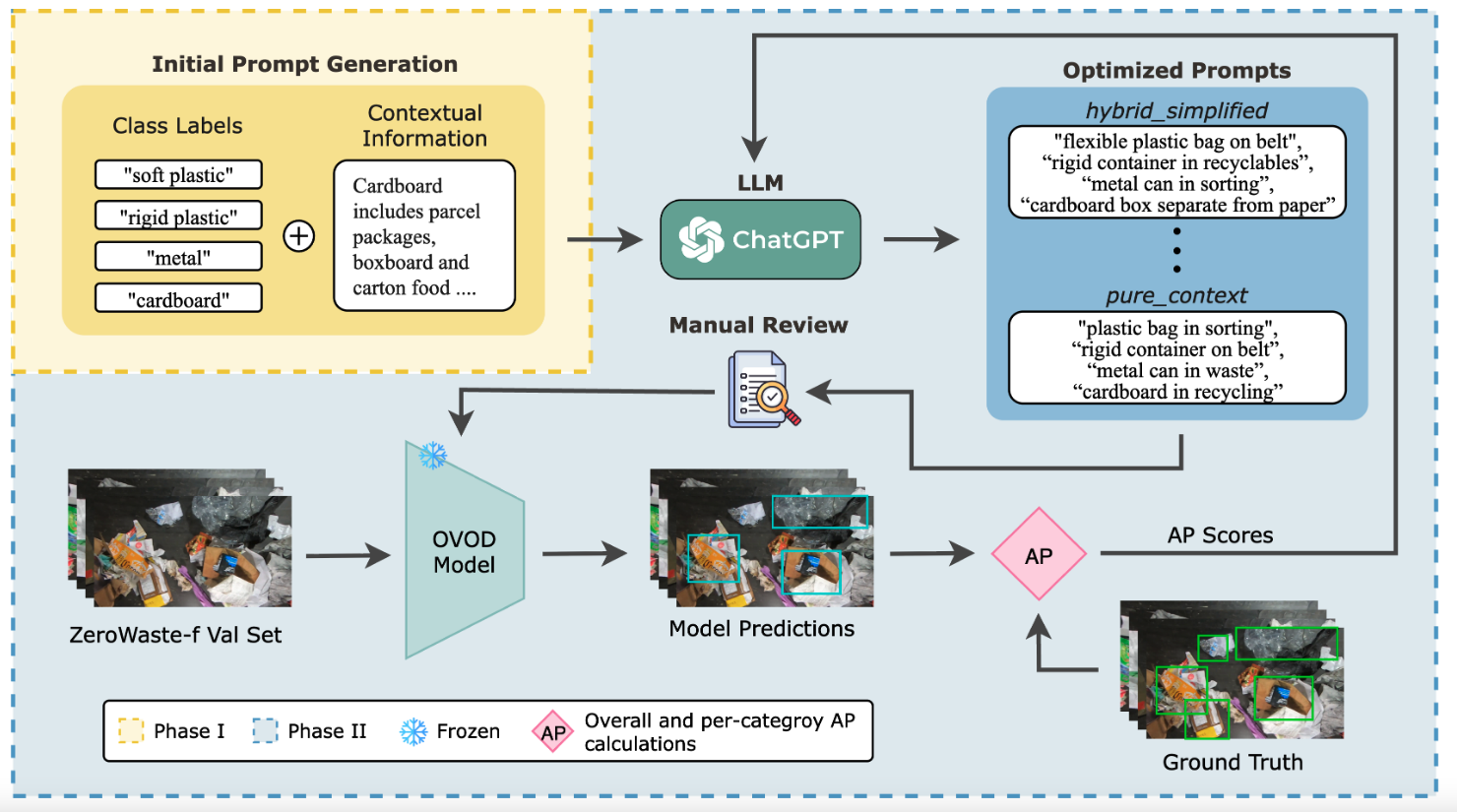

The team’s semi-supervised learning approach tackles one of the biggest challenges in waste detection: the need for large amounts of hand-labeled data. Training AI systems traditionally requires thousands of manually annotated images. This takes weeks to produce and requires significant expert effort.

Through the team’s semi-supervised approach, multiple AI models “teach each other.” By generating labels for unlabeled footage and then refining the labels into high-quality pseudo-annotations, the system produced training data at scale without depending entirely on human effort.

Pseudo-labeling pipeline where predictions from several AI models are merged and refined into high-quality annotations, enabling semi-supervised training on real conveyor footage.

Remarkably, models trained with this mix of human-labeled and AI-labeled data consistently outperformed the best fully supervised baselines. This shows that AI can help scale its own training, cutting costs and accelerating progress toward smarter, more accessible recycling systems.

Lowering barriers to the development of AI recycling systems

As part of their contribution, the team released 33,075 high-quality pseudo-annotations from industrial conveyor footage. By making these resources openly available, they hope to accelerate innovation beyond proprietary platforms and enable researchers, startups, and municipalities worldwide to build smarter recycling AI systems.

“Our approach demonstrates that we don’t always need massive amounts of human-labeled data to push the field forward,” Abid says. “With careful design, semi-supervised methods can close the gap with commercial systems while keeping research open and accessible.”

Toward smarter, safer, and greener facilities

The researchers say their study is more than simply a benchmark exercise. And the results illustrate a clear direction for how AI systems can make recycling smarter and more scalable. With recycling rates worldwide still below 20%, advances like these could help facilities recover more valuable material, reduce landfill waste, and improve worker safety.

Looking ahead, the team sees several areas for improvement that they hope to address in the future. For example, transparent plastics, reflective metals, and rare categories remain difficult to detect reliably, even with the most advanced models. Another challenge is the constantly changing nature of industrial waste, with new types of packaging and materials appearing every day.

The team has released their code and annotations, inviting the global community to build upon their work and push the boundaries of AI for recycling and sustainability.

- machine learning ,

- research ,

- ML ,

- detectors ,

- BMVC ,

- waste ,

- fine-tuning ,

Related

Special delivery: a new, realistic measure of vehicle routing algorithms

A new benchmark by researchers at MBZUAI simulates the unpredictable nature of delivery in cities, helping logistics.....

- delivery ,

- benchmark ,

- logistics ,

- neurips ,

- conference ,

- computer vision ,

- research ,

- machine learning ,

Why 3D spatial reasoning still trips up today’s AI systems

A new benchmark by MBZUAI researchers shows how poorly current multimodal methods handle real-world geometric and perspective-based.....

- benchmark ,

- 3D ,

- spatial reasoning ,

- Vision language model ,

- neurips ,

- conference ,

- research ,

Sir Michael Brady on why healthcare AI must move from detection to articulation

Speaking as part of MBZUAI’s Distinguished Lecture series, Sir Michael Brady discussed the future of healthcare and.....

- medicine ,

- healthcare ,

- guest lecture ,

- on campus ,

- oncology ,

- computer vision ,

- causal reasoning ,